TikTok CEO Shou Chew on Its Future — and What Makes Its Algorithm Different | Live at TED2023

Summary

TLDR在这段视频中,Chris Anderson与TikTok首席执行官Shou Chew进行了对话。Shou Chew分享了他如何加入TikTok,并解释了该平台成功背后的算法和推荐机制。他详细介绍了TikTok的使命是激发创意和带来欢乐,并讨论了他们如何确保平台的安全性,特别是对青少年的保护。Shou Chew还谈到了他们在美国数据安全方面的努力,以及通过Project Texas将美国用户数据存储在美国。整个对话强调了TikTok对用户和内容创作者的影响,以及他们应对隐私和数据安全挑战的措施。

Takeaways

- 🎉 恭喜Shou Chew在美国国会的听证会上取得了两党共识。

- 📱 Shou Chew分享了自己加入TikTok的经历,并介绍了TikTok的创立背景。

- 🔍 TikTok的推荐算法通过用户兴趣信号进行内容推荐,而不是基于社交关系。

- 🌍 TikTok为普通人提供了展示才能的平台,使他们有机会被发现并获得成功。

- 👨🏫 教师和教育内容在TikTok上也受到了广泛欢迎,尤其是STEM内容。

- 📉 TikTok采取多种措施防止用户过度使用,包括时间限制和提示视频。

- 🛡️ TikTok有明确的社区指南,并且通过机器和人工来审核和删除不良内容。

- 🗣️ TikTok的透明度措施包括允许第三方审查源代码,以确保平台上的自由表达和防止政府干预。

- 🔒 Shou Chew介绍了Project Texas,旨在将美国用户的数据存储在美国境内以提高数据安全性。

- 🌟 Shou Chew展望了TikTok未来五年的发展愿景,包括更多的内容发现、创造和连接社区的机会。

Q & A

问题1:Shou Chew是如何加入TikTok的?

-Shou Chew介绍说他来自新加坡,大约十年前他遇到了两位正在开发产品的工程师,他们的理念是根据用户喜欢的内容推荐,而不是根据他们认识的人。五年前,随着4G和短视频的兴起,TikTok诞生了。几年前,Shou Chew有机会管理这家公司,并表示每天都很兴奋。

问题2:TikTok成功的关键因素是什么?

-Shou Chew提到TikTok的成功很大程度上归功于其内容推荐算法,该算法基于用户的兴趣信号推荐内容。此外,TikTok的短视频格式和针对智能手机优化的设计也是成功的重要因素。

问题3:TikTok的推荐算法是如何工作的?

-Shou Chew解释说,TikTok的推荐算法主要是基于用户的兴趣信号进行模式识别。例如,如果用户喜欢某些视频,算法会将这些兴趣信号结合起来,向用户推荐更多类似的内容。

问题4:TikTok如何确保用户的安全,尤其是青少年的安全?

-Shou Chew表示,TikTok有明确的社区准则,禁止暴力、色情和其他不良内容。对于未成年人,TikTok提供了限制功能,例如不允许16岁以下用户使用即时消息和直播功能,并且父母可以通过家庭配对功能控制孩子的使用时间。

问题5:TikTok如何处理平台上的有害内容?

-Shou Chew说,TikTok有一个由数万人和机器组成的团队,专门负责识别和移除平台上的有害内容。此外,他们还与创作者合作,制作教育视频,提醒用户一些危险行为的风险。

问题6:TikTok在数据隐私和保护方面采取了哪些措施?

-Shou Chew提到TikTok启动了“德克萨斯计划”,将美国用户的数据存储在美国境内,由美国公司Oracle管理和监督。此外,他们还让第三方审核员查看源代码,以确保平台的透明度和数据安全。

问题7:TikTok对内容创作者的支持措施有哪些?

-Shou Chew表示,TikTok为所有用户提供了一个展示自己才能的平台,不管他们是否有粉丝。通过这种方式,很多有才华但不出名的人也能在TikTok上获得观众和成功。

问题8:TikTok如何帮助小型企业?

-Shou Chew举例说明了一个在凤凰城经营餐厅的企业主,通过TikTok发布内容吸引了大量顾客,去年通过TikTok赚取了约一百万美元的收入。他表示,TikTok为许多小企业提供了新的商业机会。

问题9:TikTok如何应对青少年用户对屏幕时间的依赖?

-Shou Chew表示,TikTok在用户使用时间过长时会主动推送提示视频,建议他们休息。对于18岁以下的用户,默认设置为每天使用时间限制为60分钟,并且父母可以通过家庭配对功能进一步控制使用时间。

问题10:TikTok在未来五年的愿景是什么?

-Shou Chew提到,TikTok的愿景是继续提供发现窗口、创作平台和连接桥梁。他希望通过新技术和AI帮助用户创作更多内容,进一步促进用户之间的连接,并帮助更多企业通过TikTok获得成功。

Outlines

😀 欢迎与背景

Chris Anderson欢迎Shou Chew,并祝贺他在国会听证会上取得的成就。讨论了TikTok在美国政治中引起的共识,即需要禁止TikTok。然后,Chris询问Shou的背景以及他如何加入TikTok。Shou Chew介绍了自己的背景,解释了他是如何与两位工程师合作创建了TikTok的前身产品,并描述了TikTok的早期发展和他加入公司的过程。

🤔 TikTok的成功秘诀

Chris Anderson深入探讨了TikTok为何如此成功和令人上瘾的问题。Shou Chew解释了TikTok公司的使命,即激发创造力和带来快乐,并详细描述了推荐算法如何根据用户兴趣提供内容。他强调了TikTok独特的发现引擎和机器学习算法如何帮助用户发现有趣的内容,并举例说明了像Khaby这样的创作者如何在TikTok上取得成功。

📊 推荐算法的工作原理

Chris Anderson继续探讨TikTok的推荐算法。Shou Chew简化了算法的工作原理,解释了如何通过用户的互动数据进行协同过滤,从而推荐相关内容。讨论了优化智能手机格式和短视频的重要性,以及TikTok如何通过推荐算法连接用户和发现社区。Shou Chew还分享了一些成功的创作者故事,强调了TikTok为普通人提供了展示才华的平台。

🛡️ 社区准则与内容审核

讨论了TikTok如何应对平台上的不良行为和内容。Shou Chew解释了TikTok的社区准则以及如何使用人工和机器相结合的方式来审查和移除违反准则的内容。他强调了为不同年龄段的用户提供不同体验的重要性,并详细描述了针对未成年用户的保护措施,如时间限制和家长控制功能。

⏳ 控制使用时间与健康关系

Chris Anderson提出了关于用户成瘾的问题,特别是年轻用户。Shou Chew解释了TikTok如何通过提示用户休息和设置时间限制来管理用户的屏幕时间。他强调,TikTok的目标不是最大化用户的使用时间,而是确保用户与平台之间的健康关系,并分享了与专家合作制定的60分钟使用限制的建议。

👮 数据隐私与政府干预担忧

讨论了美国国会对TikTok数据隐私和潜在政府干预的担忧。Shou Chew介绍了Project Texas计划,该计划将美国用户的数据存储在美国,由美国公司Oracle管理,以确保数据安全和透明。他强调了TikTok为保护数据所做的努力,并解释了如何通过第三方审核和透明度报告来防止任何形式的政府操控。

🔍 透明度与算法监督

Shou Chew详细说明了TikTok的透明度措施,包括允许第三方审查源代码和提供研究工具,以确保平台上的内容和算法的公正性和透明度。他重申了对防止政府操控的承诺,并强调了通过透明度和第三方监督来降低风险的重要性。

🌐 未来愿景与平台影响

在最后的讨论中,Chris Anderson询问了Shou Chew对TikTok未来的愿景。Shou Chew描述了TikTok在发现新事物、激发创造力和连接社区方面的长期目标。他提到了科学、书籍和烹饪等内容在平台上的广泛影响,并强调了TikTok为普通人提供展示才华和建立商业机会的平台。讨论以制作一段TikTok视频结束,强调了Shou Chew作为CEO的魅力和影响力。

Mindmap

Keywords

💡TikTok

💡推荐算法

💡用户生成内容

💡成瘾性

💡数据隐私

💡创作者

💡社区指南

💡Project Texas

💡人工智能

💡透明度

Highlights

Chris Anderson称赞Shou Chew成功地在美国政治中达成了跨党派共识,尽管这一共识主要集中在“我们必须禁止TikTok”上。

Shou Chew介绍了自己如何加入TikTok的故事,提到了十年前与两位工程师的相遇,以及五年前TikTok在4G时代的诞生。

Shou Chew描述了TikTok的使命是激发创造力和带来欢乐,并分享了平台的愿景:提供发现的窗口、创作的画布以及连接的桥梁。

Shou Chew解释了TikTok的推荐算法如何通过机器学习迅速学习用户的兴趣信号,并展示相关内容。

Shou Chew提到TikTok的推荐算法基于数学,通过模式识别来展示用户可能喜欢的内容,而不是依赖用户已认识的人。

Shou Chew分享了TikTok如何帮助普通人展示才华,并以Khaby的例子说明一个普通工人如何通过TikTok成为拥有1.6亿粉丝的大V。

Shou Chew解释了TikTok如何为中小企业和个人创造者提供一个被发现的平台,提到了一个通过TikTok成功的餐厅案例。

Shou Chew强调TikTok并非单纯追求用户在线时长,平台会主动提醒用户休息,并为青少年设置默认的60分钟时间限制。

Shou Chew谈到TikTok在保护青少年用户方面的努力,包括提供家长控制工具和限制未成年用户的功能。

Shou Chew讨论了TikTok在美国通过Project Texas保护用户数据的计划,并解释了数据存储在美国的Oracle云基础设施中的安全措施。

Shou Chew提到TikTok为了防止政府操控,提供前所未有的透明度,包括让第三方审查源代码。

Shou Chew解释了TikTok如何通过透明度和第三方监控来确保平台不会被政府操控,并强调这一点是其公司使命的一部分。

Shou Chew分享了TikTok在推广STEM内容方面的成就,并提到了全球范围内STEM内容获得了1160亿次观看。

Shou Chew强调TikTok的使命是通过发现、创作和连接来激发创造力和带来欢乐,分享了平台的未来愿景。

Shou Chew在TED演讲结束时,与观众一起拍摄了一段TikTok视频,并表达了对平台带来正面影响的信心。

Transcripts

Chris Anderson: It's very nice to have you here.

Let's see.

First of all, congratulations.

You really pulled off something remarkable on that grilling,

you achieved something that very few people do,

which was, you pulled off a kind of, a bipartisan consensus in US politics.

It was great.

(Laughter)

The bad news was that that consensus largely seemed to be:

"We must ban TikTok."

So we're going to come to that in a bit.

And I'm curious, but before we go there, we need to know about you.

You seem to me like a remarkable person.

I want to know a bit of your story

and how you came to TikTok in the first place.

Shou Chew: Thank you, Chris.

Before we do that, can I just check, need to know my audience,

how many of you here use TikTok?

Oh, thank you.

For those who don’t, the Wi-Fi is free.

(Laughter)

CA: There’s another question, which is,

how many of you here have had your lives touched through TikTok,

through your kids and other people in your lives?

SC: Oh, that's great to see.

CA: It's basically, if you're alive,

you have had some kind of contact with TikTok at this point.

So tell us about you.

SC: So my name is Shou, and I’m from Singapore.

Roughly 10 years ago,

I met with two engineers who were building a product.

And the idea behind this was to build a product

that recommended content to people not based on who they knew,

which was, if you think about it, 10 years ago,

the social graph was all in the rage.

And the idea was, you know,

your content and the feed that you saw should be based on people that you knew.

But 10 years ago,

these two engineers thought about something different,

which is, instead of showing you --

instead of showing you people you knew,

why don't we show you content that you liked?

And that's sort of the genesis and the birth

of the early iterations of TikTok.

And about five years ago,

with the advent of 4G, short video, mobile phone penetration,

TikTok was born.

And a couple of years ago,

I had the opportunity to run this company,

and it still excites me every single day.

CA: So I want to dig in a little more into this,

about what was it that made this take-off so explosive?

Because the language I hear from people who spent time on it,

it's sort of like I mean,

it is a different level of addiction to other media out there.

And I don't necessarily mean this in a good way, we'll be coming on to it.

There’s good and bad things about this type of addiction.

But it’s the feeling

that within a couple of days of experience of TikTok,

it knows you and it surprises you

with things that you didn't know you were going to be interested in,

but you are.

How?

Is it really just, instead of the social graph --

What are these algorithms doing?

SC: I think to describe this, to begin to answer your question,

we have to talk about the mission of the company.

Now the mission is to inspire creativity and to bring joy.

And I think missions for companies like ours [are] really important.

Because you have product managers working on the product every single day,

and they need to have a North Star, you know,

something to sort of, work towards together.

Now, based on this mission,

our vision is to provide three things to our users.

We want to provide a window to discover,

and I’ll talk about discovery, you talked about this, in a second.

We want to give them a canvas to create,

which is going to be really exciting with new technologies in AI

that are going to help people create new things.

And the final thing is bridges for people to connect.

So that's sort of the vision of what we're trying to build.

Now what really makes TikTok very unique and very different

is the whole discovery engine behind it.

So there are earlier apps that I have a lot of respect for,

but they were built for a different purpose.

For example, in the era of search, you know,

there was an app that was built for people who wanted to search things

so that is more easily found.

And then in the era of social graphs,

it was about connecting people and their followers.

Now what we have done is that ... based on our machine-learning algorithms,

we're showing people what they liked.

And what this means is that we have given the everyday person

a platform to be discovered.

If you have talent, it is very, very easy to get discovered on TikTok.

And I'll just give you one example of this.

The biggest creator on TikTok is a guy called Khaby.

Khaby was from Senegal,

he lives in Italy, he was a factory worker.

He, for the longest time, didn't even speak in any of his videos.

But what he did was he had talent.

He was funny, he had a good expression,

he had creativity, so he kept posting.

And today he has 160 million followers on our platform.

So every single day we hear stories like that,

businesses, people with talent.

And I think it's very freeing to have a platform

where, as long as you have talent, you're going to be heard

and you have the chance to succeed.

And that's what we're providing to our users.

CA: So this is the amazing thing to me.

Like, most of us have grown up with, say, network television,

where, for decades you've had thousands of brilliant, creative people

toiling in the trenches,

trying to imagine stuff that will be amazing for an audience.

And none of them ever remotely came up with anything

that looked like many of your creators.

So these algorithms,

just by observing people's behavior and what they look like,

have discovered things that thousands of brilliant humans never discovered.

Tell me some of the things that it is looking at.

So obvious things, like if someone presses like

or stays on a video for a long time,

that gives you a clue, "more like that."

But is it subject matter?

What are the array of things

that you have noticed that you can actually track

that provide useful clues?

SC: I'm going to simplify this a lot,

but the machine learning, the recommendation algorithm

is really just math.

So, for example, if you liked videos one, two, three and four,

and I like videos one, two, three and five,

maybe he liked videos one, two, three and six.

Now what's going to happen is,

because we like one, two, three at the same time,

he's going to be shown four, five, six, and so are we.

And you can think about this repeated at scale in real time

across more than a billion people.

That's basically what it is, it's math.

And of course, you know,

AI and machine learning has allowed this to be done

at a very, very big scale.

And what we have seen, the result of this,

is that it learns the interest signals

that people exhibit very quickly

and shows you content that's really relevant for you

in a very quick way.

CA: So it's a form of collaborative filtering, from what you're saying.

The theory behind it is that these humans are weird people,

we don't really know what they're interested in,

but if we see that one human is interested,

with an overlap of someone else, chances are, you know,

you could make use of the other pieces

that are in that overlapped human's repertoire to feed them,

and they'll be surprised.

But the reason they like it is because their pal also liked it.

SC: It's pattern recognition based on your interest signals.

And I think the other thing here

is that we don't actually ask you 20 questions

on whether you like a piece of content, you know, what are your interests,

we don't do that.

We built that experience organically into the app experience.

So you are voting with your thumbs by watching a video,

by swiping it, by liking it, by sharing it,

you are basically exhibiting interest signals.

And what it does mathematically is to take those signals,

put it in a formula and then matches it through pattern recognition.

That's basically the idea behind it.

CA: I mean, lots of start-ups have tried to use these types of techniques.

I'm wondering what else played a role early on?

I mean, how big a deal was it,

that from the get-go you were optimizing for smartphones

so that videos were shot in portrait format

and they were short.

Was that an early distinguishing thing that mattered?

SC: I think we were the first to really try this at scale.

You know, the recommendation algorithm is a very important reason

as to why the platform is so popular among so many people.

But beyond that, you know, you mentioned the format itself.

So we talked about the vision of the company,

which is to have a window to discover.

And if you just open the app for the first time,

you'll see that it takes up your whole screen.

So that's the window that we want.

You can imagine a lot of people using that window

to discover new things in their lives.

Then, you know, through this recommendation algorithm,

we have found that it connects people together.

People find communities,

and I've heard so many stories of people who have found their communities

because of the content that they're posting.

Now, I'll give you an example.

I was in DC recently, and I met with a bunch of creators.

CA: I heard.

(Laughter)

SC: One of them was sitting next to me at a dinner,

his name is Samuel.

He runs a restaurant in Phoenix, Arizona, and it's a taco restaurant.

He told me he has never done this before, first venture.

He started posting all this content on TikTok,

and I saw his content,

I was hungry after looking at it, it's great content.

And he's generated so much interest in his business,

that last year he made something like a million dollars in revenue

just via TikTok.

One restaurant.

And again and again, I hear these stories,

you know, by connecting people together,

by giving people the window to discover,

we have given many small businesses and many people, your common person,

a voice that they will never otherwise have.

And I think that's the power of the platform.

CA: So you definitely have identified early

just how we're social creatures, we need affirmation.

I've heard a story,

and you can tell me whether true or not,

that one of the keys to your early liftoff

was that you wanted to persuade creators who were trying out TikTok

that this was a platform where they would get response,

early on, when you're trying to grow something,

the numbers aren't there for response.

So you had the brilliant idea of goosing those numbers a bit,

basically finding ways to give people, you know,

a bigger sense of like, more likes,

more engagement than was actually the case,

by using AI agents somehow in the process.

Is that a brilliant idea, or is that just a myth?

SC: I would describe it in a different way.

So there are other platforms that exist before TikTok.

And if you think about those platforms,

you sort of have to be famous already in order to get followers.

Because the way it’s built is that people come and follow people.

And if you aren't already famous,

the chances that you get discovered are very, very low.

Now, what we have done, again,

because of the difference in the way we're recommending content,

is that we have given anyone,

any single person with enough talent a stage to be able to be discovered.

And I think that actually is the single, probably the most important thing

contributing to the growth of the platform.

And again and again, you will hear stories from people who use the platform,

who post regularly on it,

that if they have something they want to say,

the platform gives them the chance and the stage

to connect with their audience

in a way that I think no other product in the past has ever offered them.

CA: So I'm just trying to play back what you said there.

You said you were describing a different way what I said.

Is it then the case that like, to give someone a decent chance,

someone who's brilliant but doesn't come with any followers initially,

that you've got some technique to identify talent

and that you will almost encourage them,

you will give them some kind of, you know,

artificially increase the number of followers or likes

or whatever that they have,

so that others are encouraged to go,

"Wow, there's something there."

Like it's this idea of critical mass that kind of, every entrepreneur,

every party planner kind of knows about of

"No, no, this is the hot place in town, everyone come,"

and that that is how you actually gain critical mass?

SC: We want to make sure that every person who posts a video

is given an equal chance to be able to have some audience to begin with.

But this idea that you are maybe alluding to,

that we can get people to like something,

it doesn't really work like that.

CA: Could you get AI agents to like something?

Could you seed the network with extra AI agents that could kind of, you know,

give someone early encouragement?

SC: Ultimately, what the machine does is it recognizes people's interests.

So if you post something that's not interesting to a lot of people,

even if you gave it a lot of exposure,

you're not going to get the virality that you want.

So it's a lot of ...

There is no push here.

It's not like you can go and push something,

because I like Chris, I'm going to push your content,

it doesn't work like that.

You've got to have a message that resonates with people,

and if it does,

then it will automatically just have the virality itself.

That's the beauty of user-generated content.

It's not something that can be engineered or over-thought.

It really is something that has to resonate with the audience.

And if it does, then it goes viral.

CA: Speaking privately with an investor who knows your company quite well,

who said that actually the level of sophistication

of the algorithms you have going

is just another order of magnitude

to what competitors like, you know, Facebook or YouTube have going.

Is that just hype or do you really believe you --

like, how complex are these algorithms?

SC: Well, I think in terms of complexity,

there are many companies who have a lot of resources

and a lot of talent.

They will figure out even the most complex algorithms.

I think what is very different is your mission of your company,

how you started the company.

Like I said, you know, we started with this idea

that this was the main use case.

The most important use case is you come and you get to see recommended content.

Now for some other apps out there,

they are very significant and have a lot of users,

they are built for a different original purpose.

And if you are built for something different,

then your users are used to that

because the community comes in and they expect that sort of experience.

So I think the pivot away from that

is not really just a matter of engineering and algorithms,

it’s a matter of what your company is built to begin with.

Which is why I started this by saying you need to have a vision,

you need to have a mission, and that's the North Star.

You can't just shift it halfway.

CA: Right.

And is it fair to say

that because your start point has been interest algorithms

rather than social graph algorithms,

you've been able to avoid some of the worst of the sort of,

the filter bubbles that have happened in other social media

where you have tribes kind of declaring war on each other effectively.

And so much of the noise and energy is around that.

Do you believe that you've largely avoided that on TikTok?

SC: The diversity of content that our users see is very key.

You know, in order for the discovery -- the mission is to discover --

sorry, the vision is to discover.

So in order to facilitate that,

it is very important to us

that what the users see is a diversity of content.

Now, generally speaking, you know,

there are certain issues that you mentioned

that the industry faces, you know.

There are some bad actors who come on the internet,

they post bad content.

Now our approach is that we have very clear community guidelines.

We're very transparent about what is allowed

and what is not allowed on our platform.

No executives make any ad hoc decisions.

And based on that,

we have built a team that is tens of thousands of people plus machines

in order to identify content that is bad

and actively and proactively remove it from the platform.

CA: Talk about what some of those key guidelines are.

SC: We have it published on our website.

In March, we just iterated a new version to make it more readable.

So there are many things like, for example, no pornography,

clearly no child sexual abuse material and other bad things,

no violence, for example.

We also make it clear that it's a differentiated experience

if you're below 18 years old.

So if you're below 18 years old, for example,

your entire app experience is actually more restricted.

We don't allow, as an example,

users below 16, by default, to go viral.

We don't allow that.

If you're below 16,

we don’t allow you to use the instant messaging feature in app.

If you’re below 18, we don’t allow you to use the livestreaming features.

And of course, we give parents a whole set of tools

to control their teenagers’ experience as well.

CA: How do you know the age of your users?

SC: In our industry, we rely mainly on something called age gating,

which is when you sign up for the app for the first time

and we ask you for the age.

Now, beyond that,

we also have built tools to go through your public profile for example,

when you post a video,

we try to match the age that you said with the video that you just posted.

Now, there are questions of can we do more?

And the question always has, for every company, by the way,

in our industry, has to be balanced with privacy.

Now, if, for example, we scan the faces of every single user,

then we will significantly increase the ability to tell their age.

But we will also significantly increase the amount of data

that we collect on you.

Now, we don't want to collect data.

We don't want to scan data on your face to collect that.

So that balance has to be maintained,

and it's a challenge that we are working through

together with industry, together with the regulators as well.

CA: So look, one thing that is unquestionable

is that you have created a platform for literally millions of people

who never thought they were going to be a content creator.

You've given them an audience.

I'd actually like to hear from you one other favorite example

of someone who TikTok has given an audience to

that never had that before.

SC: So when again,

when I travel around the world,

I meet with a whole bunch of creators on our platform.

I was in South Korea just yesterday, and before that I met with -- yes,

before that I met with a bunch of --

People don't expect, for example, teachers.

There is an English teacher from Arkansas.

Her name is Claudine, and I met her in person.

She uses our platform to reach out to students.

There is another teacher called Chemical Kim.

And Chemical Kim teaches chemistry.

What she does is she uses our platform

to reach out to a much broader student base

than she has in her classroom.

And they're both very, very popular.

You know, in fact,

what we have realized is that STEM content

has over 116 billion views on our platform globally.

And it's so significant --

CA: In a year?

SC: Cumulatively.

CA: [116] billion.

SC: It's so significant, that in the US we have started testing,

creating a feed just for STEM content.

Just for STEM content.

I’ve been using it for a while, and I learned something new.

You want to know what it is?

Apparently if you flip an egg on your tray,

the egg will last longer.

It's science,

there’s a whole video on this, I learned this on TikTok.

You can search for this.

CA: You want to know something else about an egg?

If you put it in just one hand and squeeze it as hard as you can,

it will never break.

SC: Yes, I think I read about that, too.

CA: It's not true.

(Laughter)

SC: We can search for it.

CA: But look, here's here's the flip side to all this amazingness.

And honestly, this is the key thing,

that I want to have an honest, heart-to-heart conversation with you

because it's such an important issue,

this question of human addiction.

You know, we are ...

animals with a prefrontal cortex.

That's how I think of us.

We have these addictive instincts that go back millions of years,

and we often are in the mode of trying to modulate our own behavior.

It turns out that the internet is incredibly good

at activating our animal cells

and getting them so damn excited.

And your company, the company you've built,

is better at it than any other company on the planet, I think.

So what are the risks of this?

I mean, how ...

From a company point of view, for example,

it's in your interest to have people on there as long as possible.

So some would say, as a first pass,

you want people to be addicted as long as possible.

That's how advertising money will flow and so forth,

and that's how your creators will be delighted.

What is too much?

SC: I don't actually agree with that.

You know, as a company,

our goal is not to optimize and maximize time spent.

It is not.

In fact, in order to address people spending too much time on our platform,

we have done a number of things.

I was just speaking with some of your colleagues backstage.

One of them told me she has encountered this as well.

If you spend too much time on our platform,

we will proactively send you videos to tell you to get off the platform.

We will.

And depending on the time of the day,

if it's late at night, it will come sooner.

We have also built in tools to limit,

if you below 18 years old, by default,

we set a 60-minute default time limit.

CA: How many?

SC: Sixty minutes.

And we've given parents tools and yourself tools,

if you go to settings, you can set your own time limit.

We've given parents tools so that you can pair,

for the parents who don't know this, go to settings, family pairing,

you can pair your phone with your teenager's phone

and set the time limit.

And we really encourage parents to have these conversations with their teenagers

on what is the right amount of screen time.

I think there’s a healthy relationship that you should have with your screen,

and as a business, we believe that that balance needs to be met.

So it's not true that we just want to maximize time spent.

CA: If you were advising parents here

what time they should actually recommend to their teenagers,

what do you think is the right setting?

SC: Well, 60 minutes,

we did not come up with it ourselves.

So I went to the Digital Wellness Lab at the Boston Children's Hospital,

and we had this conversation with them.

And 60 minutes was the recommendation that they gave to us,

which is why we built this into the app.

So 60 minutes, take it for what it is,

it’s something that we’ve had some discussions of experts.

But I think for all parents here,

it is very important to have these conversations with your teenage children

and help them develop a healthy relationship with screens.

I think we live in an age where it's completely inevitable

that we're going to interact with screens and digital content,

but I think we should develop healthy habits early on in life,

and that's something I would encourage.

CA: Curious to ask the audience,

which of you who have ever had that video on TikTok appear

saying, “Come off.”

OK, I mean ...

So maybe a third of the audience seem to be active TikTok users,

and about 20 people maybe put their hands up there.

Are you sure that --

like, it feels to me like this is a great thing to have,

but are you ...

isn't there always going to be a temptation

in any given quarter or whatever,

to just push it a bit at the boundary

and just dial back a bit on that

so that you can hit revenue goals, etc?

Are you saying that this is used scrupulously?

SC: I think, you know, in terms ...

Even if you think about it from a commercial point of view,

it is always best when your customers have a very healthy relationship

with your product.

It's always best when it's healthy.

So if you think about very short-term retention, maybe,

but that's not the way we think about it.

If you think about it from a longer-term perspective,

what you really want to have is a healthy relationship, you know.

You don’t want people to develop very unhealthy habits,

and then at some point they're going to drop it.

So I think everything in moderation.

CA: There's a claim out there that in China,

there's a much more rigorous standards imposed on the amount of time

that children, especially, can spend on the TikTok equivalent of that.

SC: That is unfortunately a misconception.

So that experience that is being mentioned for Douyin,

which is a different app,

is for an under 14-year-old experience.

Now, if you compare that in the United States,

we have an under-13 experience in the US.

It's only available in the US, it's not available here in Canada,

in Canada, we just don't allow it.

If you look at the under-13 experience in the US,

it's much more restricted than the under-14 experience in China.

It's so restrictive,

that every single piece of content is vetted

by our third-party child safety expert.

And we don't allow any under-13s in the US to publish,

we don’t allow them to post,

and we don't allow them to use a lot of features.

So I think that that report, I've seen that report too,

it's not doing a fair comparison.

CA: What do you make of this issue?

You know, you've got these millions of content creators

and all of them, in a sense, are in a race for attention,

and that race can pull them in certain directions.

So, for example, teenage girls on TikTok,

sometimes people worry that, to win attention,

they've discovered that by being more sexual

that they can gain extra viewers.

Is this a concern?

Is there anything you can do about this?

SC: We address this in our community guidelines as well.

You know, if you look at sort of the sexualized content on our guidelines,

if you’re below a certain age,

you know, for certain themes that are mature,

we actually remove that from your experience.

Again, I come back to this,

you know, we want to have a safe platform.

In fact, at my congressional hearing,

I made four commitments to our users and to the politicians in the US.

And the first one is that we take safety, especially for teenagers,

extremely seriously,

and we will continue to prioritize that.

You know, I believe that we need to give our teenage users,

and our users in general,

a very safe experience,

because if we don't do that,

then we cannot fulfill --

the mission is to inspire creativity and to bring joy.

If they don't feel safe, I cannot fulfill my mission.

So it's all very organic to me as a business

to make sure I do that.

CA: But in the strange interacting world of human psychology and so forth,

weird memes can take off.

I mean, you had this outbreak a couple years back

with these devious licks where kids were competing with each other

to do vandalism in schools and, you know,

get lots of followers from it.

How on Earth do you battle something like that?

SC: So dangerous challenges are not allowed on our platform.

If you look at our guidelines, it's violative.

We proactively invest resources to identify them

and remove them from our platform.

In fact, if you search for dangerous challenges on our platform today,

we will redirect you to a safety resource page.

And we actually worked with some creators as well to come up with campaigns.

This is another campaign.

It's the "Stop, Think, Decide Before You Act" campaign

where we work with the creators to produce videos,

to explain to people that some things are dangerous,

please don't do it.

And we post these videos actively on our platform as well.

CA: That's cool.

And you've got lots of employees.

I mean, how many employees do you have

who are specifically looking at these content moderation things,

or is that the wrong question?

Are they mostly identified by AI initially

and then you have a group who are overseeing

and making the final decision?

SC: The group is based in Ireland and it's a lot of people,

it's tens of thousands of people.

CA: Tens of thousands?

SC: It's one of the most important cost items on my PnL,

and I think it's completely worth it.

Now, most of the moderation has to be done by machines.

The machines are good, they're quite good,

but they're not as good as, you know,

they're not perfect at this point.

So you have to complement them with a lot of human beings today.

And I think, by the way, a lot of the progress in AI in general

is making that kind of content moderation capabilities a lot better.

So we're going to get more precise.

You know, we’re going to get more specific.

And it’s going to be able to handle larger scale.

And that's something I think that I'm personally looking forward to.

CA: What about this perceived huge downside

of use of, certainly Instagram, I think TikTok as well.

What people worry that you are amplifying insecurities,

especially of teenagers

and perhaps especially of teenage girls.

They see these amazing people on there doing amazing things,

they feel inadequate,

there's all these reported cases of depression, insecurity,

suicide and so forth.

SC: I take this extremely seriously.

So in our guidelines,

for certain themes that we think are mature and not suitable for teenagers,

we actually proactively remove it from their experience.

At the same time, if you search certain terms,

we will make sure that you get redirected to a resource safety page.

Now we are always working with experts to understand some of these new trends

that could emerge

and proactively try to manage them, if that makes sense.

Now, this is a problem that predates us,

that predates TikTok.

It actually predates the internet.

But it's our responsibility to make sure

that we invest enough to understand and to address the concerns,

to keep the experience as safe as possible

for as many people as possible.

CA: Now, in Congress,

the main concern seemed to be not so much what we've talked about,

but data, the data of users,

the fact that you're owned by ByteDance, Chinese company,

and the concern that at any moment

Chinese government might require or ask for data.

And in fact, there have been instances

where, I think you've confirmed,

that some data of journalists on the platform

was made available to ByteDance's engineers

and from there, who knows what.

Now, your response to this was to have this Project Texas,

where you're moving data to be controlled by Oracle here in the US.

Can you talk about that project and why, if you believe it so,

why we should not worry so much about this issue?

SC: I will say a couple of things about this, if you don't mind.

The first thing I would say is that the internet is built

on global interoperability,

and we are not the only company that relies on the global talent pool

to make our products as good as possible.

Technology is a very collaborative effort.

I think many people here would say the same thing.

So we are not the first company to have engineers in all countries,

including in China.

We're not the first one.

Now, I understand some of these concerns.

You know, the data access by employees is not data accessed by government.

This is very different, and there’s a clear difference in this.

But we hear the concerns that are raised in the United States.

We did not try to avoid discussing.

We did not try to argue our way out of it.

What we did was we built an unprecedented project

where we localize American data to be stored on American soil

by an American company overseen by American personnel.

So this kind of protection for American data

is beyond what any other company in our industry has ever done.

Well, money is not the only issue here,

but it's very expensive to build something like that.

And more importantly, you know,

we are basically localizing data in a way that no other company has done.

So we need to be very careful that whilst we are pursuing

what we call digital sovereignty in the US

and we are also doing a version of this in Europe,

that we don't balkanize the internet.

Now we are the first to do it.

And I expect that, you know,

other companies are probably looking at this

and trying to figure out how you balance between protecting, protected data,

you know, to make sure that everybody feels secure about it

while at the same time allowing for interoperability

to continue to happen,

because that's what makes technology and the internet so great.

So that's something that we are doing.

CA: How far are you along that journey with Project Texas?

SC: We are very, very far along today.

CA: When will there be a clear you know,

here it is, it’s done, it’s firewalled, this data is protected?

SC: Today, by default, all new US data

is already stored in the Oracle cloud infrastructure.

So it's in this protected US environment that we talked about in the United States.

We still have some legacy data to delete in our own servers in Virginia

and in Singapore.

Our data has never been stored in China, by the way.

That deletion is a very big engineering effort.

So as we said, as I said at the hearing,

it's going to take us a while to delete them,

but I expect it to be done this year.

CA: How much power do you have

over your own ability to control certain things?

So, for example, suppose that, for whatever reason,

the Chinese government was to look at an upcoming US election and say,

"You know what, we would like this party to win," let's say,

or "We would like civil war to break out" or whatever.

How ...

"And we could do this

by amplifying the content of certain troublemaking, disturbing people,

causing uncertainty, spreading misinformation," etc.

If you were required via ByteDance to do this,

like, first of all, is there a pathway where theoretically that is possible?

What's your personal line in the sand on this?

SC: So during the congressional hearing,

I made four commitments,

we talked about the first one, which is safety.

The third one is to keep TikTok a place of freedom of expression.

By the way, if you go on TikTok today,

you can search for anything you want,

as long as it doesn't violate our community guidelines.

And to keep it free from any government manipulation.

And the fourth one is transparency and third-party monitoring.

So the way we are trying to address this concern

is an unprecedented amount of transparency.

What do I mean by this?

We're actually allowing third-party reviewers

to come in and review our source code.

I don't know any other company that does this, by the way.

Because everything, as you know, is driven by code.

So to allow someone else to review the source code

is to give this a significant amount of transparency

to ensure that the scenarios that you described

that are highly hypothetical, cannot happen on our platform.

Now, at the same time,

we are releasing more research tools for researchers

so that they can study the output.

So the source code is the input.

We are also allowing researchers to study the output,

which is the content on our platform.

I think the easiest way to sort of fend this off is transparency.

You know, we give people access to monitor us,

and we just make it very, very transparent.

And that's our approach to the problem.

CA: So you will say directly to this group

that the scenario I talked about,

of theoretical Chinese government interference in an American election,

you can say that will not happen?

SC: I can say that we are building all the tools

to prevent any of these actions from happening.

And I'm very confident that with an unprecedented amount of transparency

that we're giving on the platform,

we can reduce this risk to as low as zero as possible.

CA: To as low as zero as possible.

SC: To as close to zero as possible.

CA: As close to zero as possible.

That's fairly reassuring.

Fairly.

(Laughter)

I mean, how would the world know?

If you discovered this or you thought you had to do it,

is this a line in the sand for you?

Like, are you in a situation you would not let the company that you know now

and that you are running do this?

SC: Absolutely.

That's the reason why we're letting third parties monitor,

because if they find out, you know, they will disclose this.

We also have transparency reports, by the way,

where we talk about a whole bunch of things,

the content that we remove, you know, that violates our guidelines,

government requests.

You know, it's all published online.

All you have to do is search for it.

CA: So you're super compelling

and likable as a CEO, I have to say.

And I would like to, as we wrap this up,

I'd like to give you a chance just to paint, like, what's the vision?

As you look at what TikTok could be,

let's move the clock out, say, five years from now.

How should we think about your contribution to our collective future?

SC: I think it's still down to the vision that we have.

So in terms of the window of discovery,

I think there's a huge benefit to the world

when people can discover new things.

You know, people think that TikTok is all about dancing and singing,

and there’s nothing wrong with that, because it’s super fun.

There's still a lot of that,

but we're seeing science content, STEM content,

have you about BookTok?

It's a viral trend that talks about books

and encourages people to read.

That BookTok has 120 billion views globally,

120 billion.

CA: Billion, with a B.

SC: People are learning how to cook,

people are learning about science,

people are learning how to golf --

well, people are watching videos on golfing, I guess.

(Laughter)

I haven't gotten better by looking at the videos.

I think there's a huge, huge opportunity here on discovery

and giving the everyday person a voice.

If you talk to our creators, you know,

a lot of people will tell you this again and again, that before TikTok,

they would never have been discovered.

And we have given them the platform to do that.

And it's important to maintain that.

Then we talk about creation.

You know, there’s all this new technology coming in with AI-generated content

that will help people create even more creative content.

I think there's going to be a collaboration between,

and I think there's a speaker who is going to talk about this,

between people and AI

where they can unleash their creativity in a different way.

You know, like for example, I'm terrible at drawing personally,

but if I had some AI to help me,

then maybe I can express myself even better.

Then we talk about bridges to connect

and connecting people and the communities together.

This could be products, this could be commerce,

five million businesses in the US benefit from TikTok today.

I think we can get that number to a much higher number.

And of course, if you look around the world, including in Canada,

that number is going to be massive.

So I think these are the biggest opportunities that we have,

and it's really very exciting.

CA: So courtesy of your experience in Congress,

you actually became a bit of a TikTok star yourself, I think.

Some of your videos have gone viral.

You've got your phone with you.

Do you want to make a little little TikTok video right now?

Let's do this.

SC: If you don't mind ...

CA: What do you think, should we do this?

SC: We're just going to do a selfie together, how's that?

So why don't we just say "Hi."

Hi!

Audience: Hi!

CA: Hello from TED.

SC: All right, thank you, I hope it goes viral.

(Laughter)

CA: If that one goes viral, I think I've given up on your algorithm, actually.

(Laughter)

Shou Chew, you're one of the most influential

and powerful people in the world, whether you know it or not.

And I really appreciate you coming and sharing your vision.

I really, really hope the upside of what you're talking about comes about.

Thank you so much for coming today.

SC: Thank you, Chris.

CA: It's really interesting.

(Applause)

5.0 / 5 (0 votes)

美国为什么要不断立法,坚持封杀TikTok?|字节跳动|TikTok|周受资|bytedance|中美|川普|拜登|王局拍案20240319

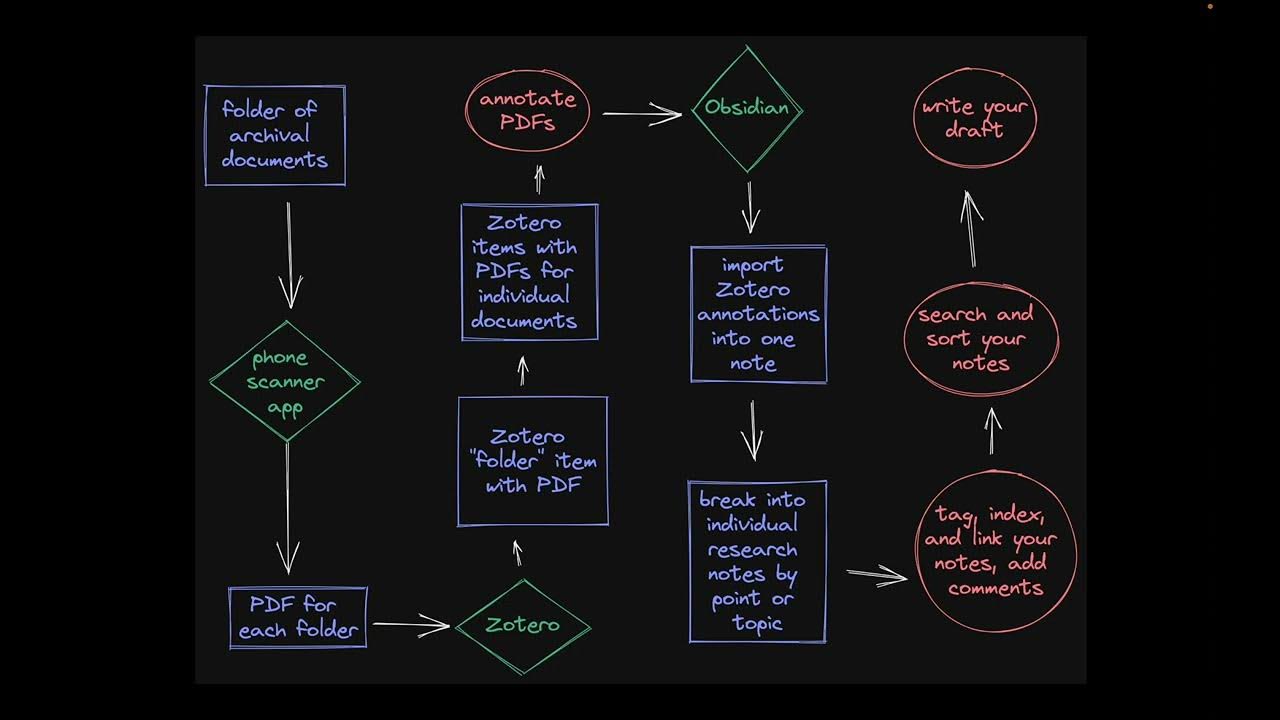

Doing History with Zotero and Obsidian: Archival Research

Website Design Process for Clients (Start to Finish)

Risk-Based Alerting (RBA) for Splunk Enterprise Security Explained—Bite-Size Webinar Series (Part 3)

Best FREE VPN for Windows 2024 💸 TOP 4 Free VPN for PC options! (TESTED)

37 Academic Research