🔴 WATCH LIVE: NVIDIA GTC 2024 Keynote - The Future Of AI!

Summary

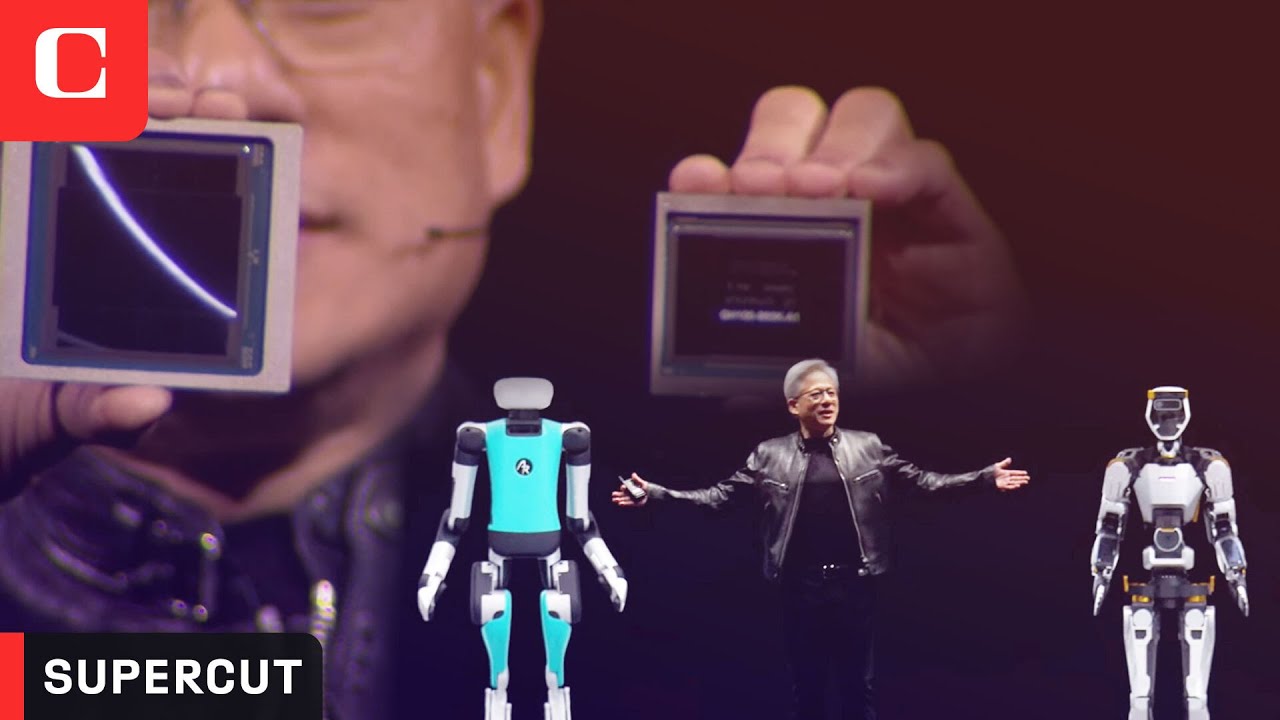

TLDRNvidia 在 GTC 大会上展示了其在人工智能、高性能计算和机器人技术方面的最新进展。介绍了新的 Blackwell GPU 平台、先进的网络系统和系统设计,以及如何通过生成式 AI 创造新的价值和软件类型。此外,Nvidia 强调了 Omniverse 数字孪生平台在机器人技术中的应用,以及如何通过 AI 推动工业革命。

Takeaways

- 🚀 我们正处于一场新的工业革命的边缘,数据中心的现代化将推动这一变革。

- 🌟 计算能力的显著提升催生了生成式AI,这将创造新的软件类别和基础设施。

- 🤖 未来,所有移动的物体都将被机器人化,这包括自动驾驶汽车、叉车、机械臂等。

- 💡 英伟达(Nvidia)正在构建一个全面的机器人技术堆栈,从AI训练到物理世界中的实际操作。

- 🔌 英伟达推出了Jetson Thor,这是一款为机器人和自动驾驶汽车设计的下一代AI计算机。

- 🔄 Omniverse成为了机器人世界的操作系统,提供了一个数字孪生平台,用于模拟和训练AI。

- 🧠 英伟达的AI Foundry提供了Nims(Nvidia推理微服务),这是一种新的软件分发方式。

- 🔧 通过Nvidia的技术和工具,如Isaac Sim和Osmo,可以在物理基础上训练和模拟机器人。

- 🌐 英伟达与各行业领导者合作,将AI和Omniverse技术整合到他们的工作流程中,如与SEAM合作打造工业元宇宙。

- 🛠️ 英伟达的Blackwell GPU平台和MVLink开关展示了公司在加速计算和网络系统方面的最新成就。

- 🎉 英伟达正在推动AI和机器人技术的边界,为未来的创新和生产力提升奠定基础。

Q & A

Nvidia的创始人和CEO是谁?

-Nvidia的创始人和CEO是Jensen Huang。

Nvidia在1993年的旅程中有哪些重要的里程碑?

-Nvidia在1993年成立,2006年推出了革命性的计算模型Cuda,2012年AlexNet AI和Cuda首次接触,2016年推出了世界上第一台AI超级计算机dgx-1,2017年Transformer出现,2022年Chat GPT吸引了全世界的注意,2023年生成性AI出现,标志着一个新行业的开始。

Nvidia的Blackwell平台是什么?

-Blackwell不是单个芯片,而是一个平台,它包括了多个GPU和连接这些GPU的高速网络系统,以及专为AI计算设计的软件和系统架构。

Nvidia的Envy Link Switch芯片有什么特点?

-Envy Link Switch芯片拥有50亿个晶体管,几乎与Hopper芯片的大小相当。这个交换机内置了四个MV链接,每个链接的速度为1.8TB每秒,并且芯片内嵌入了计算能力。

Nvidia如何推动AI在不同行业的应用?

-Nvidia通过提供强大的计算平台和软件工具,如dgx超级计算机、Jetson机器人计算平台、Omniverse数字孪生平台等,以及与各行各业的合作伙伴共同开发针对特定需求的AI解决方案,从而推动AI在不同行业的应用。

Nvidia的AI工厂概念是什么?

-Nvidia的AI工厂概念是指将数据中心视为AI的生产工厂,其目标是生成智能而非传统的电力。这意味着AI工厂将专注于创建和运行大型AI模型,以生成内容、进行数据分析和支持决策制定等。

Nvidia的Blackwell平台与Hopper相比有哪些性能提升?

-与Hopper相比,Blackwell平台在训练性能上提升了2.5倍,支持的fp8性能每芯片提升,同时引入了新的fp6格式,使得尽管计算速度相同,但由于内存的增加,带宽得到放大。此外,fp4有效吞吐量翻倍,这对推理尤其重要。

Nvidia如何帮助合作伙伴加速其生态系统?

-Nvidia通过提供Cuda加速的软件工具、Omniverse数字孪生平台、以及强大的计算平台如dgx和Jetson,帮助合作伙伴加速其生态系统。此外,Nvidia还与合作伙伴共同开发针对特定行业的AI解决方案,以推动整个行业的数字化转型。

Nvidia的AI技术在医疗领域的应用有哪些?

-Nvidia的AI技术在医疗领域的应用包括医疗成像、基因测序、计算化学等。Nvidia的GPU是这些领域背后的关键计算力量,而且Nvidia还推出了专门针对医疗行业的AI模型和服务,如用于蛋白质结构预测的AlphaFold。

Nvidia的Jetson Thor是为哪种类型的机器人设计的?

-Jetson Thor是为下一代AI驱动的机器人设计的,特别是那些需要在物理世界中进行感知、操作和自主导航的机器人。

Outlines

🎶 音乐与笑声的序幕

视频脚本的开头以音乐和笑声为背景,营造出一种轻松愉快的氛围。

🌌 愿景与启示:星系的见证者

第二段介绍了演讲者作为一位富有远见的启示者,他通过音乐和星辰的比喻,描述了自己在理解极端天气事件、帮助盲人导航以及作为助手的角色。

🤖 转型与创新:力量的传递者

第三段中,演讲者变身为一个变革者,利用重力储存可再生能源,并引领我们走向无限清洁能源的未来。他还是一位训练师,教导机器人如何避免危险并拯救生命。

🎭 演绎与创造:AI的诞生

第四段描述了Nvidia如何通过深度学习和杰出人才,将AI带入生活。演讲者提到了Nvidia创始人兼CEO Jensen Huang的欢迎词,并强调了GTC会议的重要性。

🌐 数字孪生与行业变革

第五段强调了数字孪生技术在各个行业中的广泛应用,包括生命科学、医疗保健、交通和制造业等。演讲者提到了这些行业如何通过加速计算来解决传统计算机无法解决的问题。

🚀 计算的飞跃:模拟与创新

第六段讨论了计算的未来发展,包括如何通过模拟工具创建产品,以及数字孪生如何推动整个行业的加速。演讲者宣布了与重要公司合作,共同加速生态系统的建设。

🧠 人工智能的大脑:GPU的进化

第七段详细介绍了Nvidia GPU的发展历程,从早期的Cuda到最新的Blackwell平台。演讲者强调了Blackwell平台的创新之处,包括其对大型AI模型的推理能力。

🔄 网络与协同:MV链接的革新

第八段讲述了MV链接技术的进步,以及它如何使多个GPU之间的数据传输变得更加高效。演讲者提到了这种技术在构建大型AI超级计算机中的应用。

🛠️ 可靠性与安全性:AI的保障

第九段强调了Blackwell芯片的可靠性和安全性,包括其自我测试功能以及对AI的加密保护。演讲者提到了如何通过这些技术来确保AI的安全性和数据的保密性。

🚀 性能的飞跃:Blackwell的卓越

第十段中,演讲者详细介绍了Blackwell平台的性能优势,包括其在训练和推理方面的显著提升。他还提到了Blackwell如何通过新的计算格式和网络技术来提高效率。

🌟 未来展望:生成式AI的崛起

第十一段讨论了生成式AI的未来,以及它如何改变我们与计算机的互动方式。演讲者强调了生成式AI在内容创作和个性化体验中的潜力。

🔥 能源与效率:Blackwell的热效率

第十二段中,演讲者讨论了Blackwell平台在能源效率方面的突破,包括其在训练大型AI模型时的能耗降低。他还提到了Blackwell如何通过液冷技术来管理热量。

🌐 网络与连接:Blackwell的网络架构

第十三段强调了Blackwell平台的网络架构,包括其如何通过MV链接和EnV链接交换机来实现GPU之间的高速通信。演讲者提到了这种架构在提高计算效率方面的重要性。

🤖 机器人与AI:物理智能的未来

第十四段中,演讲者展望了机器人和AI在物理世界中的应用,包括自动驾驶汽车和工业自动化。他还提到了Nvidia如何通过其技术平台支持这些应用的开发。

🌟 合作与创新:Nvidia的合作伙伴

第十五段讨论了Nvidia与其合作伙伴共同推动AI技术发展的努力,包括与AWS、Google、Microsoft和Oracle等公司的合作。演讲者强调了这些合作如何加速AI技术的创新和应用。

🌍 气候与预测:AI在气候科学中的应用

第十六段中,演讲者介绍了Nvidia如何利用AI技术来预测天气和气候变化,以及这种技术如何帮助我们更好地理解和应对极端天气事件。

💊 医疗与发现:AI在药物研发中的应用

第十七段讨论了Nvidia如何利用AI技术来加速药物发现和医疗诊断的过程,包括通过生成式AI模型来预测蛋白质结构和分子对接。

🧠 智能服务:Nvidia的AI微服务

第十八段中,演讲者介绍了Nvidia的AI微服务(Nims),这是一种预训练的模型,可以优化并打包运行在Nvidia的硬件上。他还提到了如何通过Nems来创建和部署AI应用。

🤖 机器人的进化:Nvidia的机器人技术

第十九段讨论了Nvidia在机器人技术方面的进展,包括开发用于自动驾驶汽车的计算平台和用于机器人的Jetson芯片。演讲者还提到了Nvidia如何通过AI技术来提高机器人的感知和操作能力。

🌐 数字孪生与协作:Omniverse的应用

第二十段中,演讲者介绍了Nvidia的Omniverse平台,这是一个用于创建和模拟数字孪生的系统。他还提到了Omniverse如何与工业工程和操作平台Seamans合作,以及如何通过AI技术来提高工业生产效率。

🚀 机器人的未来:Nvidia的机器人战略

第二十一段中,演讲者展望了机器人技术的未来,包括开发人形机器人和自动化系统。他还提到了Nvidia如何通过其技术平台来支持这些机器人的开发和部署。

🎉 总结与展望:Nvidia的未来方向

最后一段总结了Nvidia在AI、机器人技术和数字孪生领域的主要成就,并展望了公司的未来方向。演讲者强调了Nvidia如何通过其技术平台来推动工业革命和创新。

Mindmap

Keywords

💡加速计算

💡生成式AI

💡Blackwell

💡数字孪生

💡Omniverse

💡Jetson

💡AI工厂

💡机器人技术

💡Nvidia AI Foundry

💡Isaac Sim

Highlights

Nvidia的旅程始于1993年,经历了多个重要里程碑,包括2006年的Cuda、2012年的AlexNet AI和Cuda的首次接触,以及2016年推出的世界上第一台AI超级计算机dgx-1。

2022年,Transformer的出现和Chat GPT的兴起使人们意识到人工智能的重要性和能力,预示着一个新产业的诞生。

Nvidia的Omniverse是一个虚拟世界仿真平台,它将计算机图形学、物理学和人工智能相结合,展示了公司的灵魂。

Nvidia宣布与多个重要公司合作,包括Ansys、Synopsys和Cadence,以加速其生态系统,并连接到Omniverse数字孪生。

Nvidia介绍了Blackwell平台,这是一个新的GPU平台,旨在推动生成性AI的发展,具有2080亿个晶体管和10TB/秒的数据传输速度。

Nvidia的Jetson AGX是一个为自主系统设计的低功耗、高速传感器处理和AI的处理器,适用于自动驾驶汽车等移动应用。

Nvidia的OVX计算机运行Omniverse,是构建数字孪生和模拟环境的基础,支持机器人学习和物理反馈。

Nvidia的AI Foundry为企业提供AI模型、工具和基础设施,帮助他们创建和部署定制的AI应用和服务。

Nvidia与Seeq合作,将其工业工程和运营平台与Omniverse集成,提高工业规模设计和制造项目的效率。

Nvidia的Isaac感知器SDK为机器人提供了先进的视觉里程计、3D重建和深度感知能力,使其能够适应环境并自主导航。

Nvidia的Thor是下一代自动驾驶汽车计算机,专为Transformer引擎设计,将被比亚迪采用。

Nvidia的Gro项目组是一个通用的人类机器人学习模型,能够在观看视频、模仿人类动作和执行日常任务方面进行自我学习。

Nvidia的Jetson Thor机器人芯片为未来的AI驱动机器人提供了强大的计算能力,包括Isaac实验室、Osmo和Gro。

Nvidia的数字孪生技术Omniverse Cloud API使得开发者可以轻松地将其应用程序与数字孪生能力连接起来。

Nvidia的AI工厂概念预示着未来的数据中心将被视为AI工厂,其目标是生成智能而非电力。

Nvidia的Nims(Nvidia推理微服务)是一种新的软件分发方式,允许用户以数字盒的形式下载和运行预训练模型。

Transcripts

e

[Music]

[Music]

for

[Music]

[Laughter]

for

[Music]

[Music]

[Music]

[Music]

e

[Music]

[Music]

[Music]

[Music]

[Music]

[Music]

our show is about to

begin

[Music]

e

[Music]

I am a

Visionary Illuminating galaxies to

witness the birth of

[Music]

stars and sharpening our understanding

of extreme weather

[Music]

events I am a

helper guiding the blind through a

crowded

world I was thinking about running to

the store and giving voice to those who

cannot

speak to not make me laugh

love I am a

[Music]

Transformer harnessing gravity to store

Renewable

[Music]

Power and Paving the way towards

unlimited clean energy for us

[Music]

all I am a

trainer teaching robots to

assist

[Music]

to watch out for

danger and help save

lives I am a

Healer providing a new generation of

cures and new levels of patient care

doctor that I am allergic to penicillin

is it still okay to take the medic ation

definitely these antibiotics don't

contain penicillin so it's perfectly

safe for you to take

them I am a

navigator generating virtual

scenarios to let us safely explore the

real

world and understand every

decision

I even help write the

script breathe life into the

[Music]

words I am

AI brought to life by

Nvidia deep learning

and Brilliant

Minds

[Music]

everywhere please welcome to the stage

Nvidia founder and CEO Jensen

[Music]

Wong

welcome to

[Music]

[Applause]

GTC I hope you realize this is not a

concert you have

arrived at a developers

conference there will be a lot of

science

described algorithms computer

architecture mathematics

I sensed a very heavy weight in the room

all of a

sudden almost like you were in the wrong

place no no conference in the

world is there a greater assembly of

researchers from such diverse fields of

science from climate Tech to radio

scientist trying to figure out how to

use AI to robotically control MOS for

Next Generation 6G radios robotic

self-driving

cars even artificial

intelligence even artificial

intelligence

everybody's first I noticed a sense of

relief there all of all of a

sudden also this conference is

represented by some amazing

companies this list this is not the

attendees these are the

presentors and what's amazing is

this if you take away all of my friends

close friends Michael Dell is sitting

right there in the IT

industry all of the friends I grew up

with in the industry if you take away

that list this is what's

amazing these are the presenters of the

non-it industries using accelerated

Computing to solve problems that normal

computers

can't it's

represented in Live

Science it's rep

represented in life sciences healthc

care genomics

transportation of course retail

Logistics manufacturing

industrial the gamut of Industries

represented is truly amazing and you're

not here to attend only you're here to

present to talk about your research $100

trillion dollar of the world's

Industries is represented in this room

today this is absolutely

amazing

there is absolutely something happening

there is something going

on the industry is being transformed not

just ours because the computer industry

the computer is the single most

important instrument of society today

fundamental transformations in Computing

affects every industry but how did we

start how did we get here I made a

little cartoon for you literally I drew

this in one page this is nvidia's

Journey started in

1993 this might be the rest of the

talk 1993 this is our journey we were

founded in 1993 there are several

important events that happened along the

way I'll just highlight a few in 2006

Cuda which has turned out to have been a

revolutionary Computing model we thought

it was revolutionary then it was going

to be overnight success and almost 20

years later it

happened we saw it

coming two decades

later in

2012

alexnet Ai and

Cuda made first

Contact in

2016 recognizing the importance of this

Computing model we invented a brand new

type of computer we call the

dgx-1

170 Tera flops in this super computer

eight gpus connected together for the

very first time I hand delivered the

very first djx1 to a

startup located in San

Francisco called open

AI djx1 was the world's first AI

supercomputer remember 170

teraflops

2017 the Transformer arrived

2022 chat GPT captured the world's imag

imaginations have people realize the

importance and the capabilities of

artificial intelligence in

2023 generative AI emerged and a new

industry begins why

why is a new industry because the

software never existed before we are now

producing software using computers to

write software producing software that

never existed before it is a brand new

category it took share from

nothing it's a brand new category and

the way you produce the

software is unlike anything we've ever

done before in data

centers generating tokens

producing floating Point

numbers at very large scale as if in the

beginning of this last Industrial

Revolution when people realized that you

would set up

factories apply energy to it and this

invisible valuable thing called

electricity came out AC

generators and 100 years later 200 years

later we are now creating

new types of electrons tokens using

infrastructure we call factories AI

factories to generate this new

incredibly valuable thing called

artificial intelligence a new industry

has

emerged well we're going to talk about

many things about this new

industry we're going to talk about how

we're going to do Computing next we're

going to talk about the type of software

that you build because of this new

industry the new

software how you would think about this

new software what about applications in

this new

industry and then maybe what's next and

how can we start preparing today for

what is about to come next well but

before I

start I want to show you the soul of

Nvidia the soul of our company at the

intersection of computer

Graphics physics

and artificial

intelligence all intersecting inside a

computer in

Omniverse in a virtual world

simulation everything we're going to

show you today literally everything

we're going to show you

today is a simulation not animation it's

only beautiful because it's physics the

world is

beautiful it's only amazing because it's

being animated with robotics it's being

animated with artificial intelligence

what you're about to see all

day is completely generated completely

simulated in Omniverse and all of it

what you're about to enjoy is the

world's first concert where everything

is

homemade everything is homemade you're

about to watch some home videos so sit

back and eny enjoy

[Music]

yourself

[Applause]

[Music]

[Music]

w

[Music]

he

[Music]

[Music]

God I love

Nvidia accelerated Computing has reached

the Tipping

Point general purpose Computing has run

out of steam

we need another way of doing Computing

so that we can continue to scale so that

we can continue to drive down the cost

of computing so that we can continue to

consume more and more Computing while

being sustainable accelerated Computing

is a dramatic speed up over general

purpose Computing and in every single

industry we engage and I'll show you

many the impact is dramatic but in no

industry is a more important than our

own the industry of using simulation

tools to create

products in this industry it is not

about driving down the cost of computing

it's about driving up the scale of

computing we would like to be able to

simulate the entire product that we do

completely in full Fidelity completely

digitally and essentially what we call

digital twins we would like to design it

build it

simulate it operate it completely

digitally in order to do that we need to

accelerate an entire industry and today

I would like to announce that we have

some Partners who are joining us in this

journey to accelerate their entire

ecosystem so that we can bring the world

into accelerated Computing but there's a

bonus when you become accelerated your

INF infrastructure is cou to gpus and

when that happens it's exactly the same

infrastructure for generative

Ai and so I'm just delighted to announce

several very important Partnerships

there are some of the most important

companies in the world ansis does

engineering simulation for what the

world makes we're partnering with them

to Cuda accelerate the ancis ecosystem

to connect Anis to the Omniverse digital

twin incredible the thing that's really

great is that the install base of

Invidia GPU accelerated systems are all

over the world in every cloud in every

system all over Enterprises and so the

app the applications they accelerate

will have a giant installed base to go

serve end users will have amazing

applications and of course system makers

and csps will have great customer

demand

synopsis synopsis is nvidia's literally

first software partner they were there

in very first day of our company

synopsis revolutionized the chip

industry with highlevel design we are

going to Cuda accelerate synopsis we're

accelerating computational lithography

one of the most important applications

that nobody's ever known about in order

to make chips we have to push

lithography to the Limit Nvidia has

created a library domain specific

library that accelerates computational

lithography incredibly once we can

accelerate and software Define all of

tsmc who is announcing today that

they're going to go into production with

Nvidia kitho once is software defined

and accelerated the next step is to

apply generative AI to the future of

semiconductor manufacturing pushing

geometry even

further Cadence builds the world's

essential Ed and SDA tools we also use

Cadence between these three companies

ansis synopsis and Cadence

we basically build Nvidia together we

are cud to accelerating Cadence they're

also building a supercomputer out of

Nvidia gpus so that their customers

could do fluid Dynamic simulation at a

100 a thousand times scale

basically a wind tunnel in real time

Cadence Millennium a supercomputer with

Nvidia gpus inside a software company

building supercomputers I love seeing

that building k co-pilots together

imagine a

day when Cadence could synopsis ansis

tool providers would offer you AI

co-pilots so that we have thousands and

thousands of co-pilot assistants helping

us design chips Design Systems and we're

also going to connect Cadence digital

twin platform to Omniverse as you could

see the trend here we're accelerating

the world's CAE Eda and SDA so that we

could create our future in digital Twins

and we're going to connect them all to

Omniverse the fundamental operating

system for future digital

twins one of the industries that

benefited tremendously from scale and

you know you all know this one very well

large language

models basically after the Transformer

was

invented we were able to scale large

language models at incredible rates

effectively doubling every six months

now how is it possible possible that by

doubling every six months that we have

grown the industry we have grown the

computational requirements so far and

the reason for that is quite simply this

if you double the size of the model you

double the size of your brain you need

twice as much information to go fill it

and so every time you double your

parameter count you also have to

appropriately increase your training

token count the combination of those two

numbers becomes the computation scale

you have to

support the latest the state-of-the-art

open AI model is approximately 1.8

trillion parameters 1.8 trillion

parameters required several trillion

tokens to go

train so a few trillion parameters on

the order of a few trillion tokens on

the order of when you multiply the two

of them together approximately 30 40 50

billion

quadrillion floating Point operations

per second now we just have to do some

Co math right now just hang hang with me

so you have 30 billion

quadrillion a quadrillion is like a p

and so if you had a paa flop GPU you

would need

30 billion seconds to go compute to go

train that model 30 billion seconds is

approximately 1,000

years well 1,000 years it's worth

it like to do it sooner but it's worth

it which is usually my answer when most

people tell me hey how long how long's

it going to take to do something so 20

years I it's worth

it but can we do it next

week and so 1,000 years 1,000 years so

what we need what we need

need are bigger

gpus we need much much bigger gpus we

recognized this early on and we realized

that the answer is to put a whole bunch

of gpus together and of course innovate

a whole bunch of things along the way

like inventing tensor cores advancing MV

links so that we could create

essentially virtually Giant

gpus and connecting them all together

with amazing networks from a company

called melanox infiniband so that we

could create these giant systems and so

djx1 was our first version but it wasn't

the last we built we built

supercomputers all the way all along the

way in

2021 we had Seline 4500 gpus or so and

then in 2023 we built one of the largest

AI supercomputers in the world it's just

come

online

EOS and as we're building these things

we're trying to help the world build

these things and in order to help the

world build these things we got to build

them first we build the chips the

systems the networking all of the

software necessary to do this you should

see these

systems imagine writing a piece of

software that runs across the entire

system Distributing the computation

across thousands of gpus but inside are

thousands of smaller

gpus millions of gpus to distribute work

across all of that and to balance the

workload so that you can get the most

energ efficiency the best computation

time keep your cost down and so those

those fundamental

Innovations is what God is here and here

we

are as we see the miracle of chat GPT

emerg in front of us we also realize we

have a long ways to go we need even

larger models we're going to train it

with multimodality data not just text on

the internet but we're going to we're

going to train it on texts and images

and graphs and

charts and just as we learn watching TV

and so there's going to be a whole bunch

watching video so that these Mo models

can be grounded in physics understands

that an arm doesn't go through a wall

and so these models would have common

sense by watching a lot of the world's

video combined with a lot of the world's

languages it'll use things like

synthetic data generation just as you

and I

when we try to learn we might use our

imagination to simulate how it's going

to end up just as I did when I Was

preparing for this keynote I was

simulating it all along the

way I hope it's going to turn out as

well as I had in my

head as I was simulating how this

keynote was going to turn out somebody

did say that another performer

did her performance completely on a

treadmill so that she could be in shape

to deliver it with full

energy I I didn't do

that if I get a low wind at about 10

minutes into this you know what

happened and so so where were we we're

sitting here using synthetic data

generation we're going to use

reinforcement learning we're going to

practice it in our mind we're going to

have ai working with AI training each

other just like student teacher

Debaters all of that is going to

increase the size of our model it's

going to increase the amount of the

amount of data that we have and we're

going to have to build even bigger

gpus Hopper is

fantastic but we need bigger

gpus and so ladies and

gentlemen I would like to introduce

you to a very very big GPU

[Applause]

you named after David

Blackwell

mathematician game theorists

probability we thought it was a perfect

per per perfect name Blackwell ladies

and gentlemen enjoy this

[Music]

oh

[Music]

so

s

Blackwell is not a chip Blackwell is the

name of a

platform uh people think we make

gpus and and we do but gpus don't look

the way they used

to uh here here's the here's the here's

the the if you will the heart of the

Blackwell system and this inside the

company is not called Blackwell it's

just the number and um uh

this this is Blackwell sitting next to

oh this is the most advanced GP in the

world in production

today this is

Hopper this is hopper Hopper changed the

world this is

Blackwell

it's okay

Hopper you're you're very

good good good

boy well good

girl 208 billion transistors and so so

you could see you I can see that there's

a small line between two dieses this is

the first time two dyes have abutted

like this together in such a way that

the two chip the two dies think it's one

chip there's 10 terabytes of data

between it 10 terabytes per second so

that these two these two sides of the

Blackwell Chip have no clue which side

they're on there's no memory locality

issues no cash issues it's just one

giant chip and so uh when we were told

that Blackwell's Ambitions were beyond

the limits of physics uh the engineer

sits so what and so this is what what

happened and so this is the

Blackwell chip and it goes into two

types of systems the first

one is form fit function compatible to

Hopper and so you slide a hopper and you

push in Blackwell that's the reason why

one of the challenges of ramping is

going to be so efficient there are

installations of Hoppers all over the

world and they could be they could be

you know the same infrastructure same

design the power the electricity The

Thermals the software identical push it

right back and so this is a hopper

version for the current hgx

configuration and this is what the other

the second Hopper looks like this now

this is a prototype board and um Janine

could I just

borrow ladies and gentlemen J

Paul

and so this this is the this is a fully

functioning board and I just be careful

here this right here is I don't know10

billion the second one's

five it gets cheaper after that so any

customers in the audience it's

okay

all right but this is this one's quite

expensive this is to bring up board and

um and the the way it's going to go to

production is like this one here okay

and so you're going to take take this it

has two blackw D two two blackw chips

and four Blackwell dyes connected to a

Grace CPU the grace CPU has a super fast

chipto chip link what's amazing is this

computer is the first of its kind where

this much computation

first of all fits into this small of a

place second it's memory coherent they

feel like they're just one big happy

family working on one application

together and so everything is coherent

within it um the just the amount of you

know you saw the numbers there's a lot

of terabytes this and terabytes that um

but this is this is a miracle this is a

this let's see what are some of the

things on here uh there's um uh uh mvy

link on top PCI Express on the

bottom on on uh

your which one is mine and your left one

of them it doesn't matter uh one of them

one of them is a c CPU chipto chip link

it's my left or your depending on which

side I was just I was trying to sort

that out and I just kind of doesn't

matter

hopefully it comes plugged in

so okay so this is the grace Blackwell

[Applause]

system but there's

more so it turns out it turns out all of

the specs is fantastic but we need a

whole whole lot of new features u in

order to push the limits Beyond if you

will the limits of

physics we would like to always get a

lot more X factors and so one of the

things that we did was We Invented

another Transformer engine another

Transformer engine the second generation

it has the ability to

dynamically and automatically

rescale and

recp numerical formats to a lower

Precision whenever can remember

artificial intelligence is about

probability and so you kind of have you

know 1.7 approximately 1.7 times

approximately 1.4 to be approximately

something else does that make sense and

so so the the ability for the

mathematics to retain the Precision and

the range necessary in that particular

stage of the pipeline super important

and so this is it's not just about the

fact that we designed a smaller ALU it's

not quite the world's not quite that's

simple you've got to figure out when you

can use that across a computation that

is thousands of gpus it's running for

weeks and weeks on weeks and you want to

make sure that the the uh the training

job is going to converge and so this new

Transformer engine we have a fifth

generation MV

link it's now twice as fast as Hopper

but very importantly it has computation

in the network and the reason for that

is because when you have so many

different gpus working together we have

to share our information with each other

we have to synchronize and update each

other and every so often we have to

reduce the partial products and then

rebroadcast out the partial products

that sum of the partial products back to

everybody else and so there's a lot of

what is called all reduce and all to all

and all gather it's all part of this

area of synchronization and collectives

so that we can have gpus working with

each other having extraordinary fast

lengths and being able to do mathematics

right in the network allows us to

essentially amplify even further so even

though it's 1.8 terabytes per second

it's effectively higher than that and so

it's many times that of Hopper the

likelihood of a supercomputer running

for weeks on in is approximately zero

and the reason for that is because

there's so many components working at

the same time the statistic the

probability of them working continuously

is very low and so we need to make sure

that whenever there is a well we

checkpoint and restart as often as we

can but if we have the ability to detect

a weak chip or a weak node early we

could retire it and maybe swap in

another processor that ability to keep

the utilization of the supercomputer

High especially when you just spent $2

billion building it is super super

important and so we put in a Ras engine

a reliability engine that does 100% self

test in system test of every single gate

every single bit of memory on the

Blackwell chip and all the memory that's

connected to it it's almost as if we

shipped with every single chip its own

Advanced tester that we ch test our

chips with this is the first time we're

doing this super excited about it secure

AI only this conference do they clap for

Ras the

the uh secure AI uh obviously you've

just spent hundreds of millions of

dollars creating a very important Ai and

the the code the intelligence of that AI

is and voted in the parameters you want

to make sure that on the one hand you

don't lose it on the other hand it

doesn't get contaminated and so we now

have the ability to encrypt data of

course at rest but also in transit and

while it's being computed it's all

encrypted and so we now have the ability

to encrypt and transmission and when

we're Computing it it is in a trusted

trusted environment trusted uh engine

environment and the last thing is

decompression moving data in and out of

these nodes when the compute is so fast

becomes really

essential and so we've put in a high

linep speed compression engine and

effectively moves data 20 times faster

in and out of these computers these

computers are are so powerful and

there's such a large investment the last

thing we want to do is have them be idle

and so all of these capabilities are

intended to keep

black well fed and as busy as

possible overall compared to

Hopper it is two and a half times two

and a half times the fp8 performance for

training per chip it is ALS it also has

this new format called fp6 so that even

though the computation speed is the same

the bandwidth that's Amplified because

of the memory the amount of parameters

you can store in the memory is now

Amplified fp4 effectively doubles the

throughput this is vitally important for

inference one of the things that that um

is becoming very clear is that whenever

you use a computer with AI on the other

side when you're chatting with the

chatbot when you're asking it to uh

review or make an

image remember in the back is a GPU

generating

tokens some people call it inference but

it's more appropriately

generation the way that Computing is

done in the past was retrieval you would

grab your phone you would touch

something um some signals go off

basically an email goes off to some

storage somewhere there's pre-recorded

content somebody wrote a story or

somebody made an image or somebody

recorded a video that record

pre-recorded content is then streamed

back to the phone and recomposed in a

way based on a recommender system to

present the information to

you you know that in the future the vast

majority of that content will not be

retrieved and the reason for that is

because that was pre-recorded by

somebody who doesn't understand the

context which is the reason why we have

to retrieve so much content if you can

be working with an AI that understands

the context who you are for what reason

you're fetching this information and

produces the information for you just

the way you like it the amount of energy

we save the amount of networking

bandwidth we save the amount of waste of

time we save will be tremendous the

future is generative which is the reason

why we call it generative AI which is

the reason why this is a brand new

industry the way we compute is

fundamentally different we created a

processor for the generative AI era and

one of the most important parts of it is

content token generation we call it this

format is

fp4 well that's a lot of computation

5x the Gent token generation 5x the

inference capability of Hopper seems

like

enough but why stop

there the answer answer is is not enough

and I'm going to show you why I'm going

to show you why and so we would like to

have a bigger GPU even bigger than this

one and so

we decided to scale it and notice but

first let me just tell you how we've

scaled over the course of the last eight

years we've increased computation by

1,000 times eight years 1,000 times

remember back in the good old days of

Moore's Law it was 2x well 5x every

what 10 10x every five years that's

easiest easiest maap 10x every five

years a 100 times every 10 years 100

times every 10 years at the in the

middle in the hey days of the PC

Revolution 100 times every 10 years in

the last eight years we've gone 1,000

times we have two more years to

go and so that puts it in

perspective

the rate at which we're advancing

Computing is insane and it's still not

fast enough so we built another

chip this chip is just an incredible

chip we call it the Envy link switch

it's 50 billion transistors it's almost

the size of Hopper all by itself this

switch ship has four MV links in

it each 1.8 terabytes per

second and

and it has computation in as I mentioned

what is this chip

for if we were to build such a chip we

can have every single GPU talk to every

other GPU at full speed at the same time

that's

insane it doesn't even make

sense but if you could do that if you

can find a way to do that and build a

system to do that that's cost effective

that's cost effective how incredible

would it be that we could have all these

gpus connect over a coherent link so

that they effectively are one giant GPU

well one of one of the Great Inventions

in order to make it cost effective is

that this chip has to drive copper

directly the the series of this CHP is

is just a phenomenal invention so that

we could do direct drive to copper and

as a result you can build a system that

looks like

this now this system this

system is kind of

insane this is one dgx this is what a

dgx looks like now remember just six

years

ago it was pretty heavy but I was able

to lift

it I delivered the uh the uh first djx1

to open Ai and and the researchers there

it's on you know the pictures are on the

internet and uh and we all autographed

it uh and um it become to my office it's

autographed there it's really beautiful

and but but you could lift it uh this DG

X this dgx that dgx by the way was

170

teraflops if you're not familiar with

the numbering system that's

0.17 pedop flops so this is

720 the first one I delivered to open AI

was

0.17 you could round it up to 0.2 it

won't make any difference but and by

then was like wow you know 30 more Tera

flops and so this is now Z 720 pedop

flops almost an exf flop for training

and the world's first one exf flops

machine in one

rack just so you know there are only a

couple two three exop flops machines on

the planet as we speak and so this is an

exif flops AI system in one single rack

well let's take a look at the back of

it so this is what makes it possible

that's the back that's the that's the

back the dgx MV link spine 130 terabytes

per

second goes through the back of that

chassis that is more than the aggregate

bandwidth of the

internet

so we we could basically send everything

to everybody within a

second and so so we we have 5,000 cables

5,000 mvlink cables in total two

miles now this is the amazing thing if

we had to use Optics we would have had

to use transceivers and re timers and

those transceivers and reers alone would

have cost

20,000

watts 2 kilowatts of just transceivers

alone just to drive the mvlink spine as

a result we did it completely for free

over mvlink switch and we were able to

save the 20 kilowatt for computation

this entire rack is 120 kilowatt so that

20 kilowatt makes a huge difference it's

liquid cooled what goes in is 25 degrees

C about room temperature what comes out

is 45° C about your jacuzzi so room

temperature goes in jacuzzi comes out

2 L per

second we could we could sell a

peripheral 600,000 Parts somebody used

to say you know you guys make gpus and

we do but this is what a GPU looks like

to me when somebody says GPU I see this

two years ago when I saw a GPU was the

hgx it was 70 lb 35,000 Parts our gpus

now are

600,000 parts

and 3,000 lb 3,000 PB 3,000 lbs that's

kind of like the weight of a you know

Carbon

Fiber

Ferrari I don't know if that's useful

metric

but everybody's going I feel it I feel

it I get it I get that now that you

mentioned that I feel it I don't know

what's

3,000 okay so 3,000 pounds ton and a

half so it's not quite an

elephant so this is what a dgx looks

like now let's see what it looks like in

operation okay let's imagine what is

what how do we put this to work and what

does that mean well if you were to train

a GPT model 1.8 trillion parameter

model it took it took about apparently

about you know 3 to 5 months or so uh

with 25,000 amp uh if we were to do it

with hopper it would probably take

something like 8,000 gpus and it would

consume 15 megawatts 8,000 gpus on 15

megawatts it would take 90 days about

three months and that would allows you

to train something that is you know this

groundbreaking AI model and this it's

obviously not as expensive as as um as

anybody would think but it's 8,000 8,000

gpus it's still a lot of money and so

8,000 gpus 15 megawatts if you were to

use Blackwell to do this it would only

take 2,000

gpus 2,000 gpus same 90 days but this is

the amazing part only four megawatts of

power so from 15 yeah that's

right and that's and that's our goal our

goal is to continuously drive down the

cost and the energy they're directly

proportional to each other cost and

energy associated with the Computing so

that we can continue to expand and scale

up the computation that we have to do to

train the Next Generation models well

this is

training inference or generation is

vitally important going forward you know

probably some half of the time that

Nvidia gpus are in the cloud these days

it's being used for token generation you

know they're either doing co-pilot this

or chat you know chat GPT that or all

these different models that are being

used when you're interacting with it or

generating IM generating images or

generating videos generating proteins

generating chemicals there's a bunch of

gener generation going on all of that is

B in the category of computing we call

inference but inference is extremely

hard for large language models because

these large language models have several

properties one they're very large and so

it doesn't fit on one GPU this is

Imagine imagine Excel doesn't fit on one

GPU you know and imagine some

application you're running on a daily

basis doesn't run doesn't fit on one

computer like a video game doesn't fit

on one computer and most in fact do and

many times in the past in hyperscale

Computing many applications for many

people fit on the same computer and now

all of a sudden this one inference

application where you're interacting

with this chatbot that chatbot requires

a supercomputer in the back to run it

and that's the future

the future is generative with these

chatbots and these chatbots are

trillions of tokens trillions of

parameters and they have to generate

tokens at interactive rates now what

does that mean oh well uh three tokens

is about a

word uh you know the the uh uh you know

space the final frontier these are the

adventures that's like that's like 80

tokens

okay I don't know if that's useful to

you and

so you know the art of communications is

is selecting good an good

analogies yeah this is this is not going

well every I don't know what he's

talking about never seen Star Trek and

so and so so here we are we're trying to

generate these tokens when you're

interacting with it you're hoping that

the tokens come back to you as quickly

as possible and is as quickly as you can

read it and so the ability for

Generation tokens is really important

you have to paralyze the work of this

model across many many gpus so that you

could achieve several things one on the

one hand you would like throughput

because that throughput reduces the cost

the overall cost per token of uh

generating so your throughput dictates

the cost of of uh delivering the service

on the other hand you have another

interactive rate which just another

tokens per second where it's about per

user and that has everything to do with

quality of service and so these two

things um uh compete against each other

and we have to find a way to distribute

work across all of these different gpus

and paralyze it in a way that allows us

to achieve both and it turns out the

search search space is

enormous you know I told you there's

going to be math

involved and everybody's going oh

dear I heard some gasp just now when I

put up that slide you know so so this

this right here the the y axis uses

tokens per second data center throughput

the x-axis is tokens per second

interactivity of the person and notice

the upper right is the best you want

interactivity to be very high number of

tokens per second per user you want the

tokens per second of per data center to

be very high the upper upper right is is

terrific however it's very hard to do

that and in order for us to search for

the best

answer across every single one of those

intersections XY coordinates okay so you

just look at every single XY coordinate

all those blue dots came from some

repartitioning of the software some

optimizing solution has to go and figure

out whether to use use tensor

parallel expert parallel pipeline

parallel or data parallel and distribute

this enormous model across all these

different gpus and sustain performance

that you need this exploration space

would be impossible if not for the

programmability of nvidia's gpus and so

we could because of Cuda because we have

such Rich ecosystem we could explore

this universe and find that green roof

line it turns out that green roof line

notice you got tp2 ep8 dp4 it means two

parallel two uh tensor parallel tensor

parallel across two gpus expert

parallels across

data parallel across 4 notice on the

other end you got tensor parallel across

4 and expert parallel across 16 the

configuration the distribution of that

software it's a different different um

runtime that would

produce these different results and you

have to go discover that roof line well

that's just one model and this is just

one configuration of a computer imagine

all of the models being created around

the world and all the different

different um uh configurations of of uh

systems that are going to be

available so now that you understand the

basics let's take a look at inference of

Blackwell compared

to Hopper and this is this is the

extraordinary thing in one generation

because we created a system that's

designed for trillion parameter generate

generative AI the inference capability

of Blackwell is off the

charts

and in fact it is some 30 times Hopper

yeah for large language models for large

language models like Chad GPT and others

like it the blue line is Hopper I gave

you imagine we didn't change the

architecture of Hopper we just made it a

bigger

chip we just used the latest you know

greatest uh 10 terab you know terabytes

per second we connected the two chips

together we got this giant 208 billion

parameter chip how would we have

performed if nothing else changed and it

turns out quite

wonderfully quite wonderfully and that's

the purple line but not as great as it

could be and that's where the fp4 tensor

core the new Transformer engine and very

importantly the EnV link switch and the

reason for that is because all these

gpus have to share the results partial

products whenever they do all to all all

all gather whenever they communicate

with each

other that MV link switch is

communicating almost 10 times faster

than what we could do in the past using

the fastest

networks okay so Blackwell is going to

be just an amazing system for a

generative Ai and in the

future in the future data centers are

going to be thought of as I mentioned

earlier as an AI Factory an AI Factory's

goal in life is to generate revenues

generate in this

case

intelligence in this facility not

generating electricity as in AC

generators but of the last Industrial

Revolution and this Industrial

Revolution the generation of

intelligence and so this ability is

super super important the excitement of

Blackwell is really off the charts you

when we first when we first um uh you

know this this is a year and a half ago

two years ago I guess two years ago when

we first started to to go to market with

hopper you know we had the benefit of of

uh two two uh two csps uh joined us in a

lunch and and we were you know delighted

um and so we had two

customers uh we have more

now

unbelievable excitement for Blackwell

unbelievable excitement and there's a

whole bunch of different configurations

of course I showed you the

configurations that slide into the

hopper form factor so that's easy to

upgrade I showed you examples that are

liquid cooled that are the extreme

versions of it one entire rack that's

that's uh connected by mvlink 72 uh

we're going to

Blackwell is going to be ramping to the

world's AI companies of which there are

still many now doing amazing work in

different modalities the csps every CSP

is geared up all the oems and

odms Regional clouds Sovereign AIS and

Telos all over the world are signing up

to launch with Blackwell

this

Blackwell Blackwell would be the the the

most successful product launch in our

history and so I can't wait wait to see

that um I want to thank I want to thank

some partners that that are joining us

in this uh AWS is gearing up for

Blackwell they're uh they're going to

build the first uh GPU with secure AI

they're uh building out a 222 exf flops

system you know just now when we

animated uh just now the the digital

twin if you saw the the all of those

clusters are coming down by the way that

is not just art that is a digital twin

of what we're building that's how big

it's going to be besides infrastructure

we're doing a lot of things together

with AWS we're Cuda accelerating Sage

maker AI we're Cuda accelerating Bedrock

AI uh Amazon robotics is working with us

uh using Nvidia Omniverse and

Isaac leaned into accelerated Computing

uh Google is Gary in up for Blackwell

gcp already has A1 100s h100s t4s l4s a

whole Fleet of Nvidia Cuda gpus and they

recently announced the Gemma model that

runs across all of it uh we're work

working to optimize uh and accelerate

every aspect of gcp we're accelerating

data proc which for data processing the

data processing engine Jacks xlaa vertex

Ai and mu Joko for robotics so we're

working with uh Google and gcp across a

whole bunch of initiatives uh Oracle is

gearing up for Blackwell Oracle is a

great partner of ours for Nvidia dgx

cloud and we're also working together to

accelerate something that's really

important to a lot of companies Oracle

database Microsoft is accelerating and

Microsoft is gearing up for Blackwell

Microsoft Nvidia has a wide ranging

partnership we're accelerating Cuda

accelerating all kinds of services when

you when you chat obviously and AI

services that are in Microsoft Azure uh

it's very very likely Nvidia in the back

uh doing the inference and the token

generation uh we built they built the

largest Nvidia infiniband supercomputer

basically a digital twin of ours or a

physical twin of hours we're bringing

the Nvidia ecosystem to Azure Nvidia

DJ's Cloud to Azure uh Nvidia Omniverse

is now hosted in Azure Nvidia Healthcare

is an Azure and all of it is deeply

integrated and deeply connected with

Microsoft fabric the whole industry is

gearing up for Blackwell this is what

I'm about to show you most of the most

of the the the uh uh scenes that you've

seen so far of Blackwell are the are the

full Fidelity design of Blackwell

everything in our company has a digital

twin and in fact this digital twin idea

is is really spreading and it it helps

it helps companies build very

complicated things perfectly the first

time and what could be more exciting

than creating a digital twin to build a

computer that was built in a digital

twin and so let me show you what wistron

is

doing to meet the demand for NVIDIA

accelerated Computing wraw one of our

leading manufacturing Partners is

building digital twins of Nvidia dgx and

hgx factories using custom software

developed with Omniverse sdks and

apis for their newest Factory westron

started with a digital twin to virtually

integrate their multi-ad and process

simulation data into a unified view

testing and optimizing layouts in this

physically accurate digital environment

increased worker efficiency by

51% during construction the Omniverse

digital twin was used to verify that the

physical build matched the digital plans

identifying any discrepancies early has

helped avoid costly change orders and

the results have been impressive using a

digital twin helped bring wion's fact

Factory online in half the time just 2

and 1/2 months instead of five in

operation the Omniverse digital twin

helps withdrawn rapidly Test new layouts

to accommodate new processes or improve

operations in the existing space and

monitor realtime operations using live

iot data from every machine on the

production

line which ultimately enabled wion to

reduce endtoend cycle Times by 50% and

defect rates by

40% with Nvidia AI and Omniverse

nvidia's Global ecosystem of partners

are building a new era of accelerated AI

enabled

[Music]

digitalization that's how we that's the

way it's going to be in the future we're

going to manufacturing everything

digitally first and then we'll

manufactur it physically people ask me

how did it

start what got you guys so excited

what was it that you

saw that caused you to put it all

in on this incredible idea and it's

this hang on a

second guys that was going to be such a

moment that's what happens when you

don't rehearse

this as you know was first

Contact 2012

alexnet you put a cat into this computer

and it comes out and it says

cat and we said oh my God this is going

to change

everything you take One Million numbers

you take one Million numbers across

three channels

RGB these numbers make no sense to

anybody you put it into this software

and it compress it dimensionally reduce

it it reduces it from a million

dimensions a million Dimensions it turns

it into three letters one vector one

number and it's

generalized you could have the cat

be different

cats and and you could have it be the

front of the cat and the back of the cat

and you look at this thing you say

unbelievable you mean any

cats yeah any

cat and it was able to recognize all

these cats and we realized how it did it

systematically structurally it's

scalable how big can you make it well

how big do you want to make it and so we

imagine that this is a completely new

way of writing

software and now today as you know you

could have you type in the word c a and

what comes out is a

cat it went the other

way am I right

unbelievable how is it possible that's

right how is it possible you took three

letters and generated a million pixels

from it and it made

sense well that's the miracle and here

we are just literally 10 years later 10

years later where we recognize text we

recognize images we recognize videos and

sounds and images not only do we

recognize them we understand their

meaning we understand the meaning of the

text that's the reason why it can chat

with you it can summarize for you it

understands the text it understand not

just recogniz the the English it

understood the English it doesn't just

recognize the pixels it understood the

pixels and you can you can even

condition it between two modalities you

can have language condition image and

generate all kinds of interesting things

well if you can understand these things

what else can you understand that you've

digitized the reason why we started with

text and you know images is because we

digitized those but what else have we

digitized well it turns out we digitized

a lot of things proteins and genes and

brain

waves anything you can digitize so long

as there's structure we can probably

learn some patterns from it and if we

can learn the patterns from it we can

understand its meaning if we can

understand its meaning we might be able

to generate it as well and so therefore

the generative AI Revolution is here

well what else can we generate what else

can we learn well one of the things that

we would love to learn we would love to

learn is we would love to learn climate

we would love to learn extreme weather

we would love to learn uh what how we

can

predict future weather at Regional

scales at sufficiently high

resolution such that we can keep people

out of Harm's Way before harm comes

extreme weather cost the world $150

billion surely more than that and it's

not evenly distributed $150 billion is

concentrated in some parts of the world

and of course to some people of the

world we need to adapt and we need to

know what's coming and so we're creating

Earth to a digital twin of the Earth for

predicting weather and we've made an

extraordinary invention called ctive the

ability to use generative AI to predict

weather at extremely high resolution

let's take a

look as the earth's climate changes AI

powered weather forecasting is allowing

us to more more accurately predict and

track severe storms like super typhoon

chanthu which caused widespread damage

in Taiwan and the surrounding region in

2021 current AI forecast models can

accurately predict the track of storms

but they are limited to 25 km resolution

which can miss important details

nvidia's cordi is a revolutionary new

generative AI model trained on high

resolution radar assimilated Warf

weather forecasts and AA 5 reanalysis

data using cordi extreme events like

chanthu can be super resolved from 25 km

to 2 km resolution with 1,000 times the

speed and 3,000 times the Energy

Efficiency of conventional weather

models by combining the speed and

accuracy of nvidia's weather forecasting

model forecast net and generative AI

models like cordi we can explore

hundreds or even thousands of kilometer

scale Regional weather forcasts to

provide a clear picture of the best

worst and most likely impacts of a storm

this wealth of information can help

minimize loss of life and property

damage today cordi is optimized for

Taiwan but soon generative super

sampling will be available as part of

the Nvidia Earth 2 inference service for

many regions across the

globe the weather company is the trusted

source of global weather prediction we

are working together to accelerate their

weather simulation first principled base

of simulation however they're also going

to integrate Earth to cordi so that they

could help businesses and countries do

Regional high resolution weather

prediction and so if you have some

weather prediction You' like to know

like to do uh reach out to the weather

company really exciting really exciting

work Nvidia Healthcare something we

started 15 years ago we're super super

excited about this this is an area we're

very very proud whether it's Medical

Imaging or Gene sequencing or

computational

chemistry it is very likely that Nvidia

is the computation behind it we've done

so much work in this

area today we're announcing that we're

going to do something really really cool

imagine all of these AI models that are

being

used to

generate images and audio but instead of

images and audio because it understood

images and audio all the digitization

that we've done for genes and proteins

and amino acids that digitization

capability is now passed through machine

learning so that we understand the

language of

Life the ability to understand the

language of Life of course we saw the

first evidence of

it with alphafold this is really quite

an extraordinary thing after Decades of

painstaking work the world had only

digitized

and reconstructed using cor electron

microscopy or Crystal X x-ray

crystallography um these different

techniques painstakingly reconstructed

the protein 200,000 of them in just what

is it less than a year or so Alpha fold

has

reconstructed 200 million proteins

basically every protein every of every

living thing that's ever been sequenced

this is completely revolutionary well

those models are incredibly hard to use

um for incredibly hard for people to

build and so what we're going to do is

we're going to build them we're going to

build them for uh the the researchers

around the world and it won't be the

only one there'll be many other models

that we create and so let me show you

what we're going to do with

it virtual screening for new medicines

is a computationally intractable problem

existing techniques can only scan

billions of compounds and require days

on thousands of standard compute nodes

to identify new drug

candidates Nvidia bimo Nims enable a new

generative screening Paradigm using Nims

for protein structure prediction with

Alpha fold molecule generation with mle

MIM and docking with diff dock we can

now generate and Screen candidate

molecules in a matter of minutes MIM can

connect to custom applications to steer

the generative process iteratively

optimizing for desired properties

these applications can be defined with

biion Nemo microservices or built from

scratch here a physics based simulation

optimizes for a molecule's ability to

bind to a Target protein while

optimizing for other favorable molecular

properties in parallel MIM generates

high quality drug-like molecules that

bind to the Target and are synthesizable

translating to a higher probability of

developing successful medicines faster

Bono is enabling new paradigm in drug

Discovery with Nims providing OnDemand

microservices that can be combined to

build powerful drug Discovery workf

flows like denovo protein design or

guided molecule generation for virtual

screening biion Nemo Nims are helping

researchers and developers reinvent

computational drug

[Music]

design Nvidia m m cord diff there's a

whole bunch of other models whole bunch

of other models computer vision models

robotics models and even of

course some really really terrific open

source language models these models are

groundbreaking however it's hard for

companies to use how would you use it

how would you bring it into your company

and integrate it into your workflow how

would you package it up and run it

remember earlier I just

said that inference is an extraordinary

computation problem how would you do the

optimization for each and every one of

these models and put together the

Computing stack necessarily to run that

supercomputer so that you can run these

models in your company and so we have a

great idea we're going to invent a new

way an invent a new way for you to

receive and operate

software this software comes basically

in a digital box we call it a container

and we call it the Nvidia inference

micro service a Nim and let me explain

to you what it is a NM it's a

pre-trained model so it's pretty

clever and it is packaged and optimized

to run across nvidia's install base

which is very very large what's inside

it is incredible you have all these

pre-trained State ofthe art open source

models they could be open source they

could be from one of our partners it

could be creative by us like Nvidia

moment it is packaged up with all of its

dependencies so Cuda the right version

CNN the right version tensor RT llm

Distributing across the multiple gpus

trid and inference server all completely

packaged together it's optimized

depending on whether you have a single

GPU multi-gpu or multi node of gpus it's

optimized for that and it's connected up

with apis that are simple to use now

this think about what an AI API is and

AI API is an interface that you just

talk to and so this is a piece of

software in the future that has a really

simple API and that API is called human

and these packages incredible bodies of

software will be optimized and packaged

and we'll put it on a

website and you can download it you

could take it with you you could run it

in any Cloud you could run it in your

own data center you can run in

workstations it fit and all you have to

do is come to ai. nvidia.com we call it

Nvidia inference microservice but inside

the company we all call it

Nims

okay just imagine you know one of some

someday there there's going to be one of

these chat bots in these chat Bots is

going to just be in a Nim and you you'll

uh you'll assemble a whole bunch of Bots

and that's the way software is going to

be built someday how do we build

software in the future it is unlikely

that you'll write it from scratch or

write a whole bunch of python code or

anything like that it is very likely

that you assemble a team of AIS there's

probably going to be a super AI that you

use that takes the mission that you give

it and breaks it down into an execution

plan some of that execution plan could

be handed off to another Nim that Nim

would maybe uh understand

sap the language of sap is abap it might

understand service now and it go

retrieve some information from their

platforms it might then hand that result

to another Nim who that goes off and

does some calculation on it maybe it's

an optimization software a

combinatorial optimization algorithm

maybe it's uh you know some just some

basic

calculator maybe it's pandas to do some

numerical analysis on it and then it

comes back with its

answer and it gets combined with

everybody else's and it because it's

been presented with this is what the

right answer should look like it knows

what answer what an what right answers

to produce and it presents it to you we

can get a report every single day at you

know top of the hour uh that has

something to do with a bill plan or some

forecast or some customer alert or some

bugs database or whatever it happens to

be and we could assemble it using all

these n NS and because these Nims have

been packaged up and ready to work on

your systems so long as you have video

gpus in your data center in the cloud

this this Nims will work together as a

team and do amazing things and so we

decided this is such a great idea we're

going to go do that and so Nvidia has

Nims running all over the company we

have chatbots being created all over the

place and one of the mo most important

chatbots of course is a chip designer

chatbot you might not be surprised we

care a lot about building chips and so

we want to build chatbots AI

co-pilots that are co-designers with our

engineers and so this is the way we did

it so we got ourselves a llama llama 2

this is a 70b and it's you know packaged

up in a NM and we asked it you know uh

what is a

CTL it turns out CTL is an internal uh

program and it has a internal

proprietary language but it thought the

CTL was a combinatorial timing logic and

so it describes you know conventional

knowledge of CTL but that's not very

useful to us and so we gave it a whole

bunch of new examples you know this is

no different than employee onboarding an

employee and we say you know thanks for

that answer it's completely wrong um and

and uh and then we present to them uh

this is what a CTL is okay and so this

is what a CTL is at Nvidia

and the CTL as you can see you know CTL

stands for compute Trace Library which

makes sense you know we are tracing

compute Cycles all the time and it wrote

the program isn't that

amazing and so the productivity of our

chip designers can go up this is what

you can do with a Nim first thing you

can do with this customize it we have a

service called Nemo microservice that

helps you curate the data preparing the

data so that you could teach this on

board this AI you fine-tune them and

then you guardrail it you can even

evaluate the answer evaluate its

performance against um other other

examples and so that's called the Nemo

micr service now the thing that's that's

emerging here is this there are three

elements three pillars of what we're

doing the first pillar is of course

inventing the technology for um uh AI

models and running AI models and

packaging it up for you the second is to

create tools to help you modify it first

is having the AI technology second is to

help you modify it and third is

infrastructure for you to fine-tune it

and if you like deploy it you could

deploy it on our infrastructure called

dgx cloud or you can employ deploy it on

Prem you can deploy it anywhere you like

once you develop it it's yours to take

anywhere and so we are

effectively an AI Foundry we will do for

you and the industry on AI what TSM does

for us building chips and so we go to it

with our go to tsmc with our big Ideas

they manufacture it and we take it with

us and so exactly the same thing here AI

Foundry and the three pillars are the

NIMS Nemo microservice and dgx Cloud the

other thing that you could teach the Nim

to do is to understand your proprietary

information remember inside our company

the vast majority of our data is not in

the cloud it's inside our company it's

been sitting there you know being used

all the time and and gosh it's it's

basically invidious intelligence we

would like to take that

data learn its meaning like we learned

the meaning of almost anything else that

we just talked about learn its meaning

and then reindex that knowledge into a

new type of database called a vector

database and so you essentially take

structured data or unstructured data you

learn its meaning you encode its meaning

so now this becomes an AI database and

it that AI database in the future once

you create it you can talk to it and so

let me give you an example of what you

could do so suppose you create you get

you got a whole bunch of multi modality

data and one good example of that is PDF

so you take the PDF you take all of your

PDFs all the all your favorite you know

the stuff that that is proprietary to

you critical to your company you can

encode it just as we encoded pixels of a

cat and it becomes the word cat we can

encode all of your PDF and turns

into vectors that are now stored inside

your vector database it becomes the

proprietary information of your company

and once you have that proprietary

information you could chat to it it's an

it's a smart database so you just chat

chat with data and how how much more

enjoyable is that you know we for for

our software team you know they just

chat with the bugs database you know how

many bugs was there last night um are we

making any progress and then after

you're done done talking to this uh bugs

database you need therapy and so so we

have another chat bot for

you you can do

it okay so we called this Nemo Retriever

and the reason for that is because

ultimately its job is to go retrieve

information as quickly as possible and

you just talk to it hey retrieve me this

information it goes if brings it back to

you do you mean this you go yeah perfect

okay and so we call it the Nemo

retriever well the Nemo service helps

you create all these things and we have

all all these different Nims we even

have Nims of digital humans I'm

Rachel your AI care

manager okay so so it's a really short

clip but there were so many videos to

show you I got so many other demos to

show you and so I I I had to cut this

one short but this is Diana she is a

digital human Nim and and uh you just

talked to her and she's connected in

this case to Hippocratic ai's large

language model for healthcare and it's

truly

amazing she is just super smart about

Healthcare things you know and so after

you're done after my my Dwight my VP of

software engineering talks to the

chatbot for bugs database then you come

over here and talk to Diane and and so

so uh Diane is is um completely animated

with AI and she's a digital

human uh there's so many companies that

would like to build they're sitting on

gold mines the the Enterprise IT

industry is sitting on a gold mine it's

a gold mine because they have so much

understanding of of uh the way work is

done they have all these amazing tools

that have been created over the years

and they're sitting on a lot of data if

they could take that gold mine and turn

them into co-pilots these co-pilots

could help us do things and so just

about every it franchise it platform in

the world that has valuable tools that

people use is sitting on a gold mine for

co-pilots and they would like to build

their own co-pilots and their own

chatbots and so we're announcing that

Nvidia AI Foundry is working with some

of the world's great companies sap

generates 87% of the world's Global

Commerce basically the world runs on sap

we run on sap Nvidia and sap are

building sa Jewel co-pilots uh using

Nvidia Nemo and dgx cloud service now

they run 80 85% of the world's Fortune

500 companies run their people and

customer service operations on service

now and they're using Nvidia AI foundary

to build service now uh assist virtual

assistance cohesity backs up the world's

data they're sitting on a gold mine of

data hundreds of exobytes of data over

10,000 companies Nvidia AI Foundry is

working with them helping them build

their Gaia generative AI agent snowflake

is a company that stores the world's uh