NVIDIA Is On a Different Planet

Summary

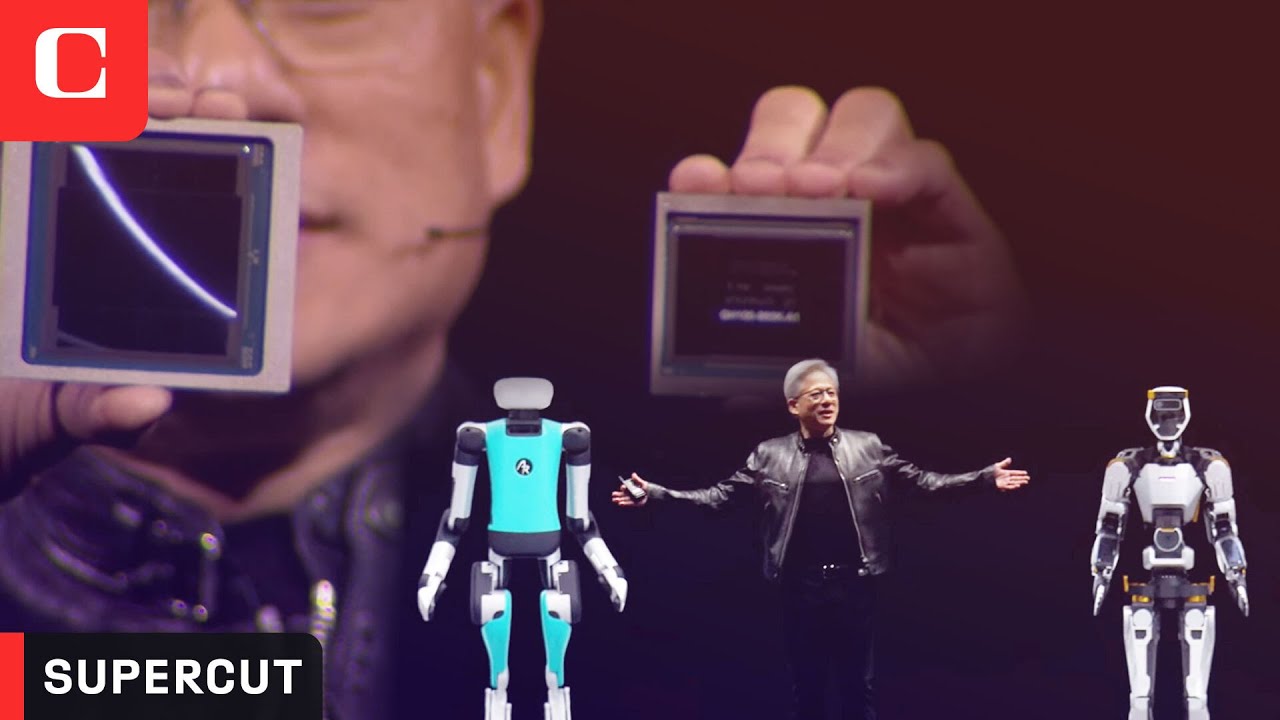

TLDRNvidia's GTC event unveiled the Blackwell GPU, emphasizing the company's shift from a gaming focus to AI and data center dominance. The Blackwell architecture, which combines two large chiplets as a single GPU, promises significant advancements in chip-to-chip communication and multi-chip modules. Nvidia also introduced Envy Link and Envy Link Switch for improved data center connectivity and a new AI foundation model for humanoid robots, highlighting its commitment to pushing the boundaries of AI technology.

Takeaways

- 🚀 Nvidia unveiled its Blackwell GPU at the GTC event, marking a significant advancement in AI and gaming technology.

- 📈 Nvidia's growth in the AI market is impacting its consumer and gaming sectors, with the company now functioning as a major AI Foundry.

- 🔗 The Blackwell GPU combines two large dies into a single GPU solution, improving chip-to-chip communication and reducing latency.

- 🧠 Nvidia's focus on AI extends to humanoid robotics with Project Groot, showcasing a future where AI-powered robots could perform complex tasks.

- 🤖 The introduction of Nvidia's Thor and its multimodal AI models like Groot indicates a shift towards AI integration in various industries.

- 🌐 Nvidia's Envy Link and Envy Link Switch technologies aim to improve data center communication, with the new Blackwell GPUs offering increased bandwidth.

- 💡 Nvidia's RAS engine is designed for proactive hardware health monitoring and maintenance, potentially reducing downtime in data centers.

- 📊 Nvidia's NIMS (Nvidia Inference Machine Software) is a suite of pre-trained AI models for businesses, emphasizing data utilization and IP ownership.

- 🔄 Multi-chip modules are highlighted as the future of high-end silicon, with Nvidia's Blackwell architecture being a notable example of this trend.

- 🎮 Despite the technical focus, gaming was not heavily discussed during the event, but the impact of Nvidia's AI advancements on gaming is expected to be significant.

- 🌐 Nvidia's market dominance is evident, with its AI and data center segments driving significant revenue and influencing the direction of the GPU market.

Q & A

What was the main focus of Nvidia's GTC event?

-The main focus of Nvidia's GTC event was the unveiling of its Blackwell GPU and discussing its advancements in AI technology, multi-chip modules, and communication hardware solutions like NVLink and Envy Link Switch.

How has Nvidia's position in the market changed over the years?

-Nvidia has transitioned from being primarily a gaming company to a dominant player in the AI market, with its products now being used in some of the biggest ventures by companies like OpenAI, Google, and Amazon.

What is the significance of the Blackwell GPU for Nvidia?

-The Blackwell GPU represents a significant technological leap for Nvidia, especially in AI workloads. It combines two large dies into a single GPU solution, offering improved chip-to-chip communication and potentially setting the stage for future consumer products.

What are the implications of Nvidia's advancements in chip-to-chip communication?

-Improvements in chip-to-chip communication, such as those introduced with the Blackwell GPU, can lead to more efficient and high-performing multi-chip modules. This could result in better yields for fabrication, potentially lower costs, and the ability to handle larger data transfers crucial for AI and data center applications.

How does Nvidia's AI technology impact the gaming market?

-While Nvidia has emphasized its AI capabilities, its advancements also have implications for the gaming market. The company's influence in game development and feature inclusion is significant, and its GPUs are often designed to support the latest gaming technologies.

What is the role of the Envy Link and Envy Link Switch in Nvidia's announcements?

-The Envy Link and Envy Link Switch are communication solutions that Nvidia announced to improve the bandwidth and connectivity between GPUs. This is particularly important for data centers and multi-GPU deployments, where high-speed communication is essential for performance.

What is Nvidia's strategy with its new inference platform, Nims?

-Nims is a platform of pre-trained AI models designed for businesses to perform various tasks such as data processing, training, and retrieval. It is CUDA-based, meaning it can run on any platform with Nvidia GPUs, and allows businesses to retain full ownership and control over their intellectual property.

How does Nvidia's project Groot and the Thor GS platform contribute to the development of humanoid robots?

-Project Groot is a general-purpose foundation model for humanoid robots, and the Thor GS platform is designed to run multimodal AI models like Groot. The Thor GS has a Blackwell-based GPU with 800 Tera flops of FP8 capability and a built-in functional safety processor, making it suitable for AI-powered robotics applications.

What is the significance of the multi-chip module approach for the future of high-end silicon?

-The multi-chip module approach is considered the future of high-end silicon as it allows for higher yields and potentially lower costs. It also enables better performance by overcoming limitations in communication links between different pieces of silicon, which is crucial for complex AI and data center applications.

How does Nvidia's market position affect its competitors, Intel and AMD?

-Nvidia's dominant market position influences trends and game development, forcing competitors like Intel and AMD to keep up. Nvidia's substantial revenue from AI and data center segments gives it significant power in the GPU market, which can impact the pricing and development of consumer GPUs.

What is the potential impact of Nvidia reallocating assets from AI to gaming?

-If Nvidia reallocates resources from its successful AI segment to gaming, it could further widen the gap between itself and its competitors in terms of performance, features, and market share. This could lead to Nvidia driving more trends and developments in game technology.

Outlines

🚀 Nvidia's GTC Event and the Unveiling of Blackwell GPU

The Nvidia GTC event showcased the unveiling of the Blackwell GPU, marking a significant advancement in GPU technology. The presentation highlighted Nvidia's shift from being primarily a gaming company to a major player in the AI market. The event emphasized the importance of multi-chip modules and the challenges of chip-to-chip communication, showcasing Nvidia's innovations in this area. The discussion also touched on the impact of Nvidia's growth on the consumer and gaming markets, and the company's role in the AI sector.

🤖 Advancements in AI and Multi-Chip Technologies

This paragraph delves into the technical aspects of Nvidia's advancements, particularly in AI and multi-chip technologies. It discusses the potential of multi-chip modules to increase yields and reduce costs for consumers, as well as the focus on improving chip-to-chip communication. The summary also mentions the debut of the B1000, expected to be a multi-die product, and the significance of Nvidia's Blackwell architecture. The paragraph highlights the company's efforts in democratizing computing and the anticipation surrounding the impact of these technologies on both the enterprise and consumer markets.

🌐 Nvidia's Positioning in the AI and Data Center Markets

Nvidia's strategic positioning in the AI and data center markets is the focus of this paragraph. It discusses the company's branding as an AI foundry and the potential for its technology to influence consumer parts. The summary covers Nvidia's multi-chip module technology, the Blackwell GPU's impressive execution, and the implications for the future of high-end silicon. It also touches on the company's partnerships and the concept of digital twins, which are digital representations of real workspaces used for training robotic solutions.

🔍 Nvidia's Blackwell GPU and Its Impact on Software Development

The Blackwell GPU's impact on software development and its seamless integration as a single package solution is the central theme of this paragraph. The summary explains how Nvidia has worked to minimize the challenges of chip-to-chip communication, allowing the Blackwell GPU to behave like a monolithic silicon chip. It details the technical specifications of the Blackwell GPU, including its transistor count and memory bandwidth, and discusses the potential for the technology to be integrated into future consumer products.

🤖 AI and Robotics: Nvidia's Project Groot and Nims

This paragraph focuses on Nvidia's venture into AI-powered robotics with Project Groot and the introduction of Nims, a suite of pre-trained AI models for businesses. The summary covers the potential applications of humanoid robots in various industries and the cultural appeal of AI-driven robotics. It also discusses the capabilities of the Thor platform, which supports multimodal AI models and has a built-in safety processor. The paragraph highlights Nvidia's efforts to create a foundation model for humanoid robots that can understand human language and navigate the world.

💡 Market Dynamics and the Future of Consumer GPUs

The final paragraph discusses the market dynamics in the GPU industry and the potential future of consumer GPUs. The summary explores Nvidia's dominant position and its influence on game development and features. It also considers the roles of AMD and Intel in the market and their pursuit of AI technology. The discussion touches on the challenges of providing affordable entry points into the gaming market and the potential for multichip modules to become more prevalent in consumer GPUs.

Mindmap

Keywords

💡Nvidia

💡Blackwell GPU

💡AI

💡Multi-chip modules

💡Chiplets

💡PCI Express

💡Envy Link and Envy Link Switch

💡Digital Twin

💡AI Foundry

💡Project Groot

💡Nims

Highlights

Nvidia's GTC event unveiled the Blackwell GPU, marking a significant advancement in GPU technology.

Nvidia's shift from being primarily a gaming company to focusing on AI and data center markets has been profound.

The Blackwell GPU is expected to be the first multi-die product with larger tech designs integrated into smaller chiplets.

Nvidia's growth to unfathomable heights in the AI market is impacting its consumer and gaming market behaviors.

The Blackwell architecture announcement was the main focus of Nvidia's GTC event, showcasing its capabilities and interconnects.

Nvidia's advancements in chip-to-chip communication, such as NVLink and Envy Link, are set to be crucial for future high-performance computing.

The Blackwell GPU combines two large die into a single package, potentially offering a significant leap over the Hopper architecture for AI workloads.

Nvidia's project Groot introduces a general-purpose foundation model for humanoid robots, aiming to navigate and interact with the world autonomously.

The Nvidia Thor system, with a Blackwell-based GPU and 800 Tera flops of FP8 capability, is designed to run multimodal AI models.

Nvidia's NIMS tool is a selection of pre-trained AI models for businesses, allowing them to retain full ownership and control over their intellectual property.

Nvidia's RAS engine is designed for monitoring hardware health and identifying potential downtime before it happens.

The Envy Link and Envy Link Switch technologies from Nvidia aim to improve communication between GPUs within data centers.

Nvidia's focus on AI has led to a democratization of computing, making advanced computing more accessible to the masses.

The Blackwell GPU supports up to 192 GB of HBM3E memory, offering a significant increase in memory bandwidth for data-intensive tasks.

Nvidia's GTC event also covered the importance of digital twins in software, where companies use digital representations of real workspaces for training robotic solutions.

Nvidia's branding shift positions it as an AI foundry, with the expectation that its technology will influence consumer parts in the future.

The discussion around Nvidia's Blackwell GPU and its implications for the future of high-end silicon and consumer multi-chip modules.

The transcript highlights the commentary on the technology sphere and the absurdity of the current world situation, particularly in relation to technology advancements.

Transcripts

there's so many companies that would

like to build they're sitting on gold

mines gold mine gold mine it's a gold

mine gold mine gold mine and we've got

the

pickaxes nvidia's GTC event saw the

unveil of its Blackwell GPU and uh

generally speaking as far as Nvidia

presentations go this one was fairly

well put together there were still some

memeable quotes God I love

Nvidia if you take away all of my

friends it's okay

Hopper

you're you're very

good good good

boy well good girl PCI Express on the

bottom on on uh

your which one is M and your left one of

them it doesn't matter but M as a side

Jensen was absolutely right when he said

that Nvidia is not a gaming company

anymore and it's clear why companies

like open Ai and goog and Amazon get a

little bit nervous when considering they

have functionally a sole GPU source for

some of their biggest Ventures that they

working on right now and Nvidia at this

point has grown to actually unfathomable

Heights it's insane to think that this

was basically a a largely gaming company

up until more recent years it always had

professional and workstation and data

center was growing but gaming was the

bulk of a lot of nvidia's revenue for a

long time and that's changed and it's

clear and how it performs in the AI

Market will impact how it behaves in the

consumer and the gaming markets but at

this point yeah we We Knew by the

numbers that Nvidia was gigantic it

didn't really sink in though until I

made myself sit through this from

mainstream news coverage Nvidia still is

a center of the universe uh huge

performance upgrade now and I had to

Google what a a pedop flop was but

please please stop he'll timly

democratized Computing give code that

for or Java or python or whatever else

the vast majority of us never learned

making us all Hostage to the autocratic

computer class he's busting up that clue

what but I think that what they a lot of

people are wanting to hear about is the

debut of What's called the

b1000 that's not that's not the name

that's it's not even the technical part

but it's expected to be the first what's

called a multi-dye product basically

larger Tech Designs put into really

small uh they're called chiplets sounds

really uh kind of cute in a way what you

said software they're also uh yeah

talking about Enterprise digital there

there was more than just the the

Blackwell

U that new technology that was uh

introduced wasn't there what else that's

right uh and actually guy's name is

David Harold Blackwell uh a

mathematician it wasn't uh Richard

Blackwell the fashionista but um just

just just shut up just please

shut like a host to a parasite gaming

has finally done something productive in

the eyes of the massive conglomerate of

non-technical media as they scramble to

tell everyone that bigger number better

and uh try to understand literally

anything about the stock they're pumping

they they don't understand what it is or

why it exists but they know that money

goes in and money comes out and so you

can speak to it in English and it would

directly generate USD you do have to

wonder though if the engineers watching

this who designed and developed all the

breakthroughs are pained by their work

being boiled down

into make investor more money now please

but the cause for all this as you would

expect was AI so we're be talking about

some of that today uh the technolog is

really interesting that Nvidia discussed

some of the biggest takeaways for us

were advancements in uh chip to chip

communication multi-chip modules and

components like Envy link or Envy link

switch where uh the actual communication

link between the different pieces of

silicon starts become the biggest

limiting factor it already was but uh

that's going to be one of the main areas

and additionally we're going to be

spending a good amount of time on just

commentary because it's we live in an

absurd world right now and at least in

the technology sphere and it deserves

some some discussion some commentary

about that too so we'll space that

throughout and in the conclusion we'll

get more into uh our thoughts on it okay

let's get started before that this video

is brought to you by thermal take and

the tower 300 the tower 300 is a full-on

showcase PC case built to present the

computer straight on with its angled

tempered glass windows or on a unique

mounting stand to show off the build in

new ways the tower 300 has a layout that

positions the GPU fans against the mesh

panel with ventilation on the opposite

side for liquid coolers and CPUs there's

also an included 2 140 mm fans up top

the panels use a quick access tooless

system to be quickly popped in and out

for maintenance and you can learn more

at the link in the description below so

whether or not you're into all of the AI

discussion this is still an interesting

uh set of

technological breakthroughs or at least

just Technologies to talk about because

some of it will come into consumer

multi- chip modules are definitely the

future of large silicon uh making it

more higher yields for fabrication

hopefully lower cost that gets at least

partially pass to Consumers but I have

some thoughts on that we'll talk about

later but generally speaking speak for

AI that's kind of what got all the buzz

and despite being a relatively technical

conference and relatively technically uh

dense keynote as far as Nvidia Keynotes

go they knew a lot of Financial and

investment firms and eyes were watching

this one and so there was some appeal to

some of the chaos that those

organizations like to observe making us

all Hostage to the autocratic computer

class moving on let's start with a

summary of the two hours of Nvidia

announcements is a lot less fluff this

time than they've typically had there

was still some fluff okay so 3,000 lb

ton and a half so it's not quite an

elephant four

elephants one

GPU and for our European viewers that's

actually an imperial measurement it's

pretty common here we use the weight of

elephants to compare things for example

one of our mod mats weighs about

0.00

4165 of an African bush elephant adult

as fast as possible pretending to

Blackwell Nvidia made these

announcements the Blackwell

architectural announcement took most of

the focus Nvidia discussed its two

largest possible dieses acting as a

single GPU Nvidia showed the brainup

board that Juan only half jokingly uh

noted as being1 billion cost to build

for testing and relating to this it

spent some time on the various

interconnects and communication Hardware

required to facilitate chipto chip

transfer nvlink switch is probably one

of the most important an M of its

presentation its Quantum infin band

switch was another of the communications

announcements a lot of time was also

spent on varying configurations of black

well like multi-gpu deployments and

servers of varying sizes for data

centers dgx was another of those

discussed outside of these announcements

Nvidia showed some humanoid robotics

programs such as project Groot giving us

some Tony Stark Vibes including

showcasing these awfully cute robots

that were definitely intended to assuage

all concerns about the future downfall

of society from

Terminators five things where you

going I sit right

here Don't Be Afraid come here green

hurry

up what are you

saying as always the quickest way to get

people to accept something they are

frightened of is by making it cute and

now after watching that my biggest

concern is actually if they'll allow

green to keep its job it was stage

fright it's new it still learning

doesn't deserve to lose its job and its

livelihood over that one small flub on

stage and wait a

minute it's working and during all of

this robotics discussion they also spent

time speaking of various Partnerships

and digital twins software is a huge

aspect of this uh companies are using

digital representation of their real

workspaces to train robotic Solutions

and a modernized take on Automation and

the general theme was that Nvidia is

branding itself differently now and so

we are

effectively an AI Foundry but our

estimation is that this technology will

still work its way into consumer Parts

in some capacity or another multi-

Solutions are clearly the future for

high-end silicon AMD has done well to

get their first in a big way but Nvidia

published its own multi-chip module

white paper many years ago and it's been

work working on this for about as long

as AMD AMD went multi-chip with its

consumer GP products the RX 7000 series

Nvidia has now done the same with

Blackwell but with a more impressive

execution of the chipto chip

communication which is maybe made easier

by the fact that companies spend

millions of dollars on these looking at

the Blackwell silicon held up by Juan

during the keynote despite obviously

limited sort of quality of footage at

this vantage point we think we can see

the split centrally located as described

with additional splits for the hbm you

can see those dividing lines in this

shot so now we're getting into recapping

the Blackwell Hardware side of things

Blackwell combines two of what Nvidia

calls the largest possible dieses into

basically a single GPU solution or a

single package solution at least in

combination with ony memory and uh Juan

described Blackwell as being unaware of

its connection between the two separate

dyes and this is sort of the most

important aspect of this because

as described at least on stage this

would imply that the Silicon would

behave like normal monolithic silicon

where it doesn't need special

programming considerations made by the

software developers by uh those using

the chip to work around things like

chipto chip communication uh chipto chip

latency like you see just for example in

the consumer World on ryzen CPUs where

uh Crossing from one CCX to another

imposes all kinds of new challenges to

deal with and there's not really been a

great way to deal with those if you're

heavily affected by it other than trying

to isolate the work onto a single piece

of silicon in that specific example but

Nvidia says that it has worked around

this so the total combined solution is a

208 billion transistor GPU or more

accurately a combination of two pieces

of silicon that are each 104 billion

transistors for contacts the h100 has 80

billion transistors that's the previous

one but they're still selling it they

still have orders backlogged to fulfill

Nvidia had various claims of how many

X's better than previous Solutions the

new Blackwell solution would be with

those multipliers ranging depending on

how many gpus are used what Precision it

is if it's related to the power uh or

whatever but at the end of the day

Blackwell appears to be a big jump over

hopper for AI workloads there was no

real mention of gaming but it's likely

that gaming gets a derivative somewhere

it's likely in the 50 Series we'll see

Blackwell unless Nvidia pull of Volta

and skips but that seems unlikely given

the current rumors Blackwell supports up

to 192 GB of HPM 3E memory or depending

on which slide you reference hmb 3E

memory the good news is that that error

means that the slides are still done by

a human at Nvidia the bad news is that

we don't know how much longer they're

going to be done by a human at Nvidia

Blackwell has 8 terab per second of

memory bandwidth as defined in this

image and as for derivative

configurations or Alternatives of this

the grace Blackwell combination uses a

Blackwell GPU solution and Grace CPUs

which is an Nvidia arm collaboration

previously launched these combinations

create a full CPU and GPU product with

varying counts of gpus and CPUs present

andv video noted that the two Blackwell

gpus and one Grace CPU configuration

would host 384 GB of HPM 3E 72 arm

neoverse V2 CPU cores and it has 900 GB

per second of nvlink bandwidth chip to

chip Nvidia says it's gb200 so-called

super chip has 16 terby per second of

high bandwidth memory 3.6 terabytes per

second of NV link bandwidth and 40 pedop

flops of AI performance depending on how

charitably you define that and turning

this into a Blackwell compute node

pushes that up to 1.7 terabytes of hbm

3E which is an obscene amount of memory

uh 32 TB pers second of memory bandwidth

and liquid cooling most of the

discussion Beyond this point focused on

various Communications Hardware

Solutions including both inip and

intranode or data center Solutions we

previously met with Sam nafziger from

AMD who is an excellent engineer great

uh communicator and presenter uh

engineer is actually kind of

underserving his position at AMD he's

considered a fellow which is basically

the highest technical rank you can get

there uh but anyway we talked with him

previously about AMD moving to multichip

for RX 7000 although it's a different

prod product it was a different era a

different target market a lot of the key

challenges are the same for what Nvidia

faced and what AMD was facing and

ultimately those challenges largely uh

Center on the principle of if running

multiple pieces of silicon obviously

they can only be as fast as the literal

link connecting them the reason for

bringing this up though is probably

because a lot of you have either

forgotten that discussion or never saw

it uh and it's very relevant here so

this is going to be a short clip from

our prior interview with AMD talking

about some of the chipto chip

Communications and uh chiplet um

interconnect and fabric limitations so

that we all get some good context for

what Nvidia is facing as well the

bandwidth requirements are just so much

higher with GBU because we're

Distributing all of this work the you

know terabytes of of data we loved the

chiplet concept we knew that the wire

counts were just too high in graphics to

do to replay what we did on CPUs

and so we were scratching our head um

you know how can we get significant

benefit um and we were aware of those

scaling curves that I showed and and the

observation was you know there actually

is a pretty clean boundary between the

infinity cache um and out and we we

recognized that these things weren't

didn't need 5 nanometer and they were

just fine for the product and in six we

were hardly spending any power you know

and the and the the G gddr6 itself

doesn't benefit at all from technology

so that's where we came up with the idea

you know we already have these gddr6

interfaces in insect technology like I

talked about the cost of porting right

and all the engineers and we already had

that and we could just split it off into

its own little die and um I mean you can

see see the results right so we were

spending 520 millim squ here we

increased our um our compute unit count

by 20% we added a bunch of new

capability but we so this thing was

would be like over you know it be

pushing 600 550 millimet squared or

something right um but we shrank it down

to 300 the rest of that discussion was

also great you should check it out if

you haven't seen it we'll link it below

it's in our engineering discussions

playlist where we've actually recently

had Nvidia on for latency discussion and

Intel on for talking about how drivers

work driver optimization all that stuff

but that's all linked below all right so

the key challenge is getting the amount

of tiny wires connecting the chips to

fit without losing performance using

that real estate for them cost making

sure that uh although there's benefit

from yields you're not causing new

problems and then just the speed itself

being the number one issue and for AMD

it was able to solve these issues well

enough where it segmented the components

of the GPU into mcds and gcds so it

didn't really split the compute it split

parts of the memory subsystem out and

that's a lot different from what Nvidia

is doing Nvidia is going the next step

with Blackwell which is a a much more

expensive part totally different use

casee than we see with RX 7000 although

AMD has its own Mi Instinct cards as

well that we've talked about with wend

in the past but uh either way this is

something where it's going a step

further for NVIDIA and uh it actually

appears to be just two literal Blackwell

complete dieses next to each other that

uh that behave as one GPU if what Jensen

Juan is saying is is accurate there

there's a small line between two dieses

this is the first time two d have Abed

like this together in such a way that

the two chip the two dieses think it's

one chip there's 10 terabytes of data

between it 10 terabytes per second so

that these two these two sides of the

Blackwell Chip have no clue which side

they're on there's no memory locality

issues no cash issues it's just one

giant chip so that's the big difference

between amd's consumer design we

previously saw and what nvidia's doing

here uh from what nvidia's katanzaro

said on Twitter our understanding is

that the 10 terab pers second link

theoretically makes all the Silicon

appear uniform to software and we're not

experts in this field or programming but

if this means that the need to write

special code for managing and scheduling

work beyond what would normally be done

anyway for a GPU is not needed here then

that's a critical step to encouraging in

socket functionally upgrades for data

centers faster adoption things like that

the next biggest challenge after this is

getting each individual GPU to speak

with the other gpus in the same Rack or

data center this is handled by a lot of

components including just the actual

literal physical wires that are

connecting things as you scale into true

data center size uh but to us again as

people who aren't part of the data

center world the most seemingly

important is the Envy link and envy link

switch uh improvements that Nvidia

announced as well nvidia's Envy link

generation 5 solution supports up to 576

gpus concurrently and a technical brief

Nvidia said this of its new Envy link

switch quote while the new Envy Link in

blackw gpus also uses two highspeed

differential pairs in each direction to

form a single link as in the hopper GPU

Nvidia Blackwell doubles the effective

bandwidth per link to 50 GB per second

in each Direction Blackwell gpus include

18 fifth generation NV link links to

provide 1.8 terabytes per second total

bandwidth 900 GB per second in each

Direction and via's Technical brief also

noted that this is over 14 times the

bandwidth of PCI Gen 5 just quickly

without spending a ton of time here

Nvidia also highlighted a Diagnostics uh

component to all of this which I thought

was really cool actually um it's the

reliability availability and

serviceability engine they're calling it

Ras for monitoring Hardware health and

identifying potential downtime before it

happens so this is one of the things we

talk about a lot internally which is we

produce so much data logging of all the

tests we're running uh but one of the

biggest challenges is that it is

difficult to to do something with that

data uh and we have systems in place for

the charts we make but there's a lot

more we could do with it it's just that

you need a system as in a computer to

basically start identifying those things

for you to really leverage it and so Ras

does that on a serviceability and a

maintenance and uptime standpoint but

they also talked about it with their

Nims so Nvidia talking about its new

Nvidia inference Nim Tool uh is a lot

less flashy than the humanoid robots

bleeding edge gpus but probably one of

the more actually immediately useful

from a business standpoint and this is

intended to uh be a selection of

pre-trained AI models for businesses

that they can use to do a number of

tasks including data processing training

llms um retrieval augmented generation

or

rag great acronym but Nims are Cuda

based so they can be run basically

anywhere Nvidia gpus live Cloud on-

premise servers local workstations

whatever businesses will also be able to

retain full ownership and control over

their intellectual property something

that's hugely important when considering

adding AI to any workflow a great

example of this is nvidia's own chip

Nemo or an llm that the company uses

internally to work with its own vast

documentation on chip design stuff that

can't get out and is incredibly useful

Nims will be able to interface with

various platforms like service now and

crowd strike or custom internal systems

there are a lot of businesses that

generate oceans of data that could help

them identify issues optimizations or

Trends in general but may not have had a

a good idea what to do with the data or

how to draw patterns from it and Jensen

Juan said this during the GTC

presentation the the Enterprise IT

industry is sitting on a gold mine it's

a gold mine because they have so much

understanding of of uh the way work is

done they have all these amazing tools

that have been created over the years

and they're sitting on a lot of data if

they could take that gold mine and turn

it into co-pilots these co-pilots could

help us do things and again we're back

to if data is the gold mine then Nvidia

in the Gold Rush is selling the pickaxes

maybe like the the Bagger 288 excavator

or something in the case of the dgx are

are you impressed with my excavation

knowledge Nvidia also announced its

project Groot with zeros which is a

legally significant distinction this is

described as a general purpose

Foundation model for humanoid robots

their quote so that's right it's robot

coming soon not the vacuum kind but the

Will Smith kind and it's going to smack

Us in the face autonomous or AI powered

robotics extend to a lot of practical

applications in vehicles industrial

machines and warehouse jobs just to name

a few however the Sci-Fi appeal of

pseudo intelligent humanoid robots is a

part of our Collective culture that even

mainstream media has been picking up on

or it's been been trying to he announced

at the end of the show uh what they call

Groot which is basically a new type of

AI architecture a foundation model uh

for

humanoid like robots so uh you can think

uh not exactly uh Terminator or Bender

from Futurama but is the classic study

of a false dichotomy your two options

are a killing machine or an

inappropriate alcoholic robot that has a

penion for gambling yeah well I'm going

to go build my own theme park with

Blackjack and hook Groot as a project is

both software and hardware and stands

for generalist robot 0000 technology as

a sum of parts and video wants Groot

machines to be able to understand human

language sense and navigate the world

around themselves uh and Carry Out

arbitrary tasks like getting gpus out of

the oven which is obviously an extremely

common occurrence over at Nvidia these

days the training part of the stack

starts with video so the model can sort

of intuitively understand physics then

accurately modeling the robots in

Virtual space Nvidia Omniverse digital

twin Tech in order to teach the model

how to move around and interact with

terrain objects or other robots that

will eventually rise up to rule us all

Jensen described this as a gym here the

model can learn Basics far quicker than

in the real world that one blue robot is

actually really good at knocking others

down the stairs we noticed not sure if

that's going into the training data but

if it does it's just the first step and

there are personal of methods to

dispatch humans I'm just I just saw a

lot of there all the mainstream Outlets

were talking about like how the world's

going to end and I kind of wanted I felt

left out the hardware is nvidia's Thor s

so which has a Blackwell based GPU with

800 Tera flops of fp8 capability on

board uh Jets and Thor will run

multimodal AI models like Groot and has

a built-in functional safety processor

that will definitely never malfunction

so we mostly cover G Hardware here back

to the commentary stuff some of the fun

just sort of discussion and thought

experiment and in this instance it does

seem like the major breakthroughs that

at least to me are the the most

personally interesting are those in

multi-chip design which we have plenty

of evidence that uh when it works it

works phenomenally well for Value it's

it's kind of a question of is NVIDIA

going to be the company that will uh

feel it's in a position to need to

propose a good value like AMD was when

it was up against Intel getting

slaughtered by ancient Skylake silicon

rehashed year after year the answer is

probably no Nvidia is not in that

position uh and so it's it's it's a

different world for them but we're not

sure if this is going to happen for the

RTX 5000 series there's no actual news

yet there's some rumors out there uh but

there's no real hard news Nvidia is

clearly however moving in the direction

of multichip modules again they

published that white paper or something

this was a long time ago might might be

more than five years ago now uh so this

has been known it's just been a question

of if they can get the implementation

working in a competitive and performant

way uh where they feel it's worth

flipping the switch on getting away from

monolithic for Consumer now AMD has

shown at least success in a technical

capacity for its RX 7000 series uh they

are relatively competitive especially in

rasterization R tracing sometimes a

different story it depends on the game

but uh they do get slaughtered in say

cyberpunk with really high RT workloads

but either way the point is that uh that

particular issue may have existed

monolithic or multi-chip and they've

shown that multichip can work for

Consumer so either way Nvidia right now

whether AMD gets its Zen moment for gpus

as well Nvidia remains a scary Beast it

is very powerful it's a ginormous

company and that quote from the Roundup

earlier uh from the one is actually

somewhat true where he said this is

nvidia's world and uh not totally wrong

so Intel and AMD are almost certainly

motivated to stay in gpus for AI

purposes just like Nvidia is that they

chase money that's the job of the

corporations that are publicly funded

and gigantic like these AI is money they

are going to chase it and the Fallout of

that is consumer gpus um so the the

difference maybe being that Nvidia has

less meaning to provide cheap entry

points into the gaming Market as we've

seen they haven't really done anything

lower than a 4060 in the modern uh

lineup that's affordable and they don't

have as much motive there's not really a

lot of reason to go chase the smaller

dollar amount when then go get $1,600

$2,000 for

490 uh at at least retail assembled cost

and then tens and hundreds and more of

thousands of dollars in Ai and data

center Parts uh so that's where AMD and

Intel will kind of remain at least

immediately critical is keeping that

part of the market healthy and Alive

making it possible to build PCS for

reasonable price without it getting

ballooned out of control where

everyone's eventually sort of snowed and

GID into thinking this is just what gpus

should cost now that gpus have gotten

such high average selling price and

they've sustained a little bit better

from the company's perspective than

previously uh it seems maybe unlikely

that they would just bring it down like

in other words if people are used to

spending $1,000 on a GPU and even if the

cost can come down in some respect uh

with advancements and things like we get

ryzen style chiplets in the future that

work on a GPU level in spite of those

costs maybe being more controllable for

the manufacturer and better yields they

might still try to find a way to sell

you a $1,000 GPU if you're buying them

if you're showing interest in them uh

that's that's how they're going to

respond unless there's significant

competition where they start

undercutting each other which obviously

is ideal for everyone here but that's

not the world we live in right now it's

the market share is largely Nvidia no

matter which Market you look at in the

GPU world and no matter how you define

gpus on the positive side our hope is

that seeing some of what they're doing

with Blackwell with multichip means that

there's a pathway to get multichip into

consumer in bigger ways than it is now

for gpus which again ryzen just sets

it's this uh enormous success story of

being able to make relatively affordable

Parts uh without actually losing money

on them and that's been the critical

component of it it's just that we're

missing the one other key aspect of

ryzen's success which was that AMD had

no other options when it launched ryzen

it it did not have the option to be

expensive whereas Nvidia does so that's

pretty different one thing's clear

though Nvidia is a behemoth it again

operates on a completely different

planet from most of the other companies

in this industry in general if it

reallocates any amount of the assets

it's gaining from AI into gaming that is

going to further widen the gulf between

them and their competitors in in all

aspects of uh gaming gpus and part of

this you see

materialize in just market share where

Nvidia is able to drive Trends and

actual game development and features

that are included in games because it

has captured so much of the market uh it

can convince these developers that hey

if you put this in statistically most of

the people who play your game will be

able to use it on our GPU and that

forces Intel and AMD into a position

where they're basically perpetually

trying to keep up uh and that's a tough

to position to fight from when the one

they're trying to keep up with has

insane revenue from segments outside of

the battle that they're fighting in

gaming so anyway AMD and then Intel

aren't slouches so despite whatever both

uh companies have for issues with their

gpus we've seen that they are also

making progress uh they're clearly

staffed with capable engineers and also

pursuing AI so some of that technology

will work its way into consumer as well

and uh AMD again stands as a good

example of showing that even though a

company can have a near complete

Monopoly over a segment talking about

Intel here many years ago if they get

complacent uh or if they just have a

series of stumbles like Intel had with

10 nanometer not being able to ship it

for forever then

they can lose that position faster than

they gained it that's kind of scary too

for the companies it just seems like

Nvidia is maybe a little more aware of

that than intel was at the time so

that's it for this one hopefully you got

some value out of it even if it was just

entertainment Watching Me Be baffled

by Massive media conglomerates failing

to get even the name of the thing

they're reporting on right when they are

some of the uh the largest and most

established companies in the world with

the long long EST history of reporting

on news but that's

okay we'll keep making YouTube videos

thanks for watching subscribe for more

go to store. gam access.net to help us

out directly if you want to help fund

our discussion of the absurdity of it

all and we'll see you all next time

5.0 / 5 (0 votes)

GTC March 2024 Keynote with NVIDIA CEO Jensen Huang

🔴 WATCH LIVE: NVIDIA GTC 2024 Keynote - The Future Of AI!

Nvidia 2024 AI Event: Everything Revealed in 16 Minutes

HW News - Intel is a Cluster, NVIDIA Blackwell Boosts Production, Sony "Still Learning"

Intel is Gunning for NVIDIA

Apple’s iPad event in 12 minutes