Microsoft's New PHI-3 AI Turns Your iPhone Into an AI Superpower! (Game Changer!)

Summary

TLDR微软推出的53迷你AI模型是一次重大创新,它将高级AI技术缩小到可以放入口袋的大小,甚至能在iPhone 14上运行,而不影响用户隐私。该模型拥有3.8亿参数,经过3.3万亿token的训练,性能可与更大的模型如Mixr 8x7 B和GPT 3.5相媲美。53迷你AI模型的训练重点在于提升数据的质量和有用性,而非仅仅增加模型大小。它采用了Transformer解码器,具有4K的默认上下文长度,能够处理广泛的信息。此外,该模型设计考虑了开源社区,与Llama 2模型结构相似,并使用了相同的分词器,识别词汇量达32,610。53迷你AI模型在iPhone 14上运行时,只需4位和大约1.8GB的空间,无需互联网连接即可每秒生成超过12个token,实现了高级AI功能的离线使用。在安全性测试中,53迷你AI模型在多轮对话中产生有害内容的风险较低。微软还开发了53小型和53中型模型,分别拥有7亿和14亿参数,使用相同高质量的数据进行训练。53迷你AI模型的开发注重社区参与和支持,设计灵活,支持长文本处理。微软的这一创新展示了AI技术在个人设备上的实践应用,预示着更智能、更适应性、更个性化的技术将如何融入我们的日常生活。

Takeaways

- 📱 微软推出了一款名为53 Mini的小型AI模型,它能够运行在iPhone 14上,提供先进的AI能力,同时保护用户隐私。

- 🔍 53 Mini拥有3.8亿参数,通过训练3.3万亿个token,使其性能与更大的模型如Mixr 8x7 B和GPT 3.5相当。

- 📈 微软通过改进训练数据的质量和有用性,而不是简单地增加模型大小,实现了性能提升。

- 🌐 53 Mini使用精心选择的网络数据和由其他语言模型生成的合成数据,提高了模型理解和生成类似人类文本的能力。

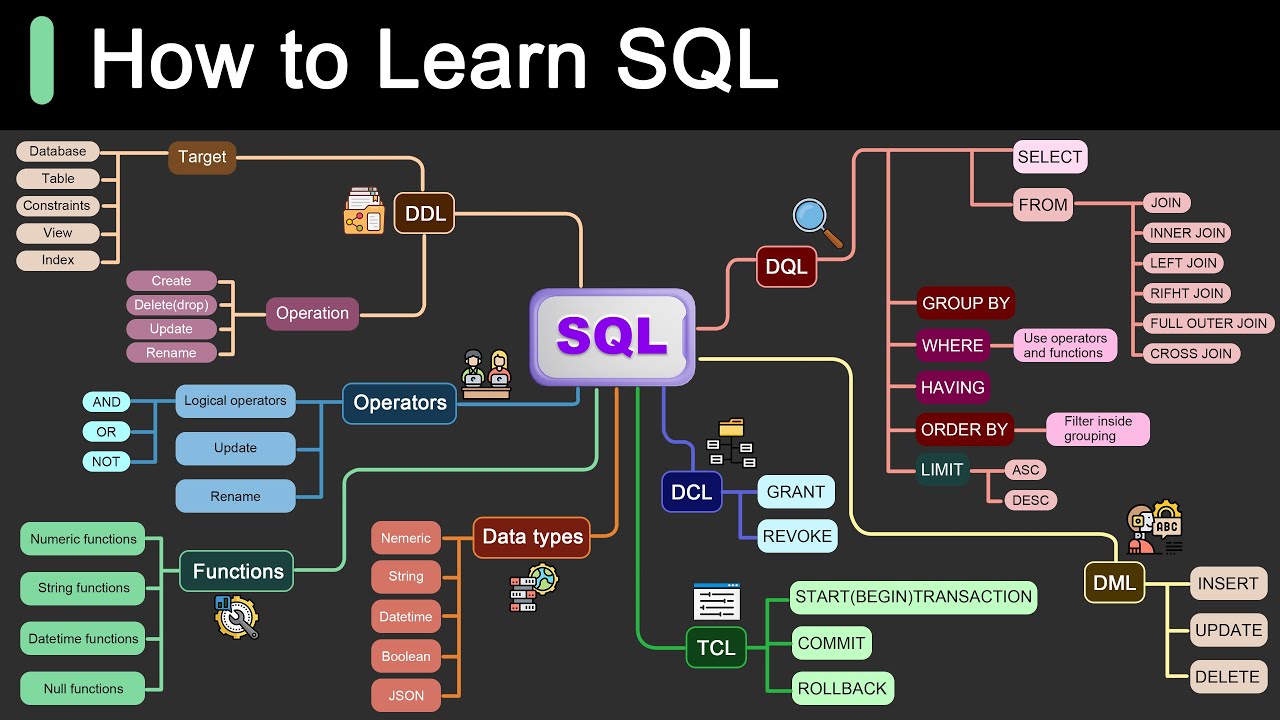

- 🔩 该模型采用Transformer解码器构建,具有4K的默认上下文长度,即使模型较小,也能处理广泛且深入的信息。

- 🔗 53 Mini旨在帮助开源社区,与Llama 2模型结构相似,并使用相同的tokenizer,识别32,610个词汇。

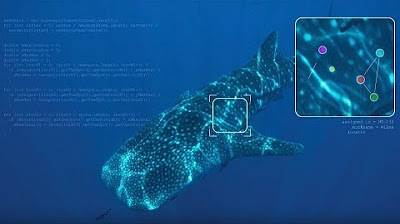

- 📊 53 Mini能够在iPhone 14的A16仿生芯片上直接运行,每秒生成超过12个token,无需互联网连接。

- 📈 53 Mini在内部和外部测试中表现强劲,在知名AI测试如MLU和MT Bench上与更大模型得分相当。

- 🔧 微软还开发了53 Small和53 Medium版本,分别拥有7亿和14亿参数,使用相同高质量数据进行更长时间的训练。

- 🔬 53 Mini在开发过程中进行了大量测试,以确保不产生有害内容,并通过安全检查和自动化测试来强化模型。

- 🌟 53 Mini的设计注重社区参与和支持,具有灵活性,包括能够处理长达128,000个字符的长文本的功能。

- ✅ 微软的53 Mini标志着在将强大的AI工具以实用的方式带入我们日常生活方面取得了重要进步。

Q & A

微软在人工智能领域做出了什么重大举措?

-微软开发了一款名为53 Mini的小型AI模型,它能够运行在普通智能手机上,如iPhone 14,提供先进的AI功能,同时不牺牲用户隐私。

53 Mini模型有多大的参数量,它与哪些大型模型的性能相当?

-53 Mini模型拥有38亿参数,并在3.3万亿个token上进行了训练,其性能可与Mixr 8x7 B和GPT 3.5等更大的模型相媲美。

53 Mini模型在数据训练方面有哪些突破?

-53 Mini模型的突破在于其训练数据的精心升级,微软投入了大量精力提高数据的质量和有用性,而不是仅仅增加数据量。

53 Mini模型是如何实现在iPhone 14上运行的?

-53 Mini模型通过智能设计,可以压缩到仅4位,并且只占用大约1.8GB的空间,能够在iPhone的A16仿生芯片上直接运行,无需互联网连接。

53 Mini模型在性能测试中的表现如何?

-53 Mini在内部和外部测试中表现出色,在诸如MLU和MT Bench等知名AI测试中得分与更大的模型一样高,展示了其架构的效率和训练制度的有效性。

微软是否还开发了53 Mini的更大版本?

-是的,微软还尝试了53 Mini的更大版本,称为53 Small和53 Medium,分别拥有70亿和140亿参数,并使用了更长时间的高质量数据训练。

53 Mini模型在安全性方面做了哪些测试?

-53 Mini模型在开发过程中进行了大量的测试,以确保它不会产生有害内容,包括彻底的安全检查、红队测试以及自动化测试。

53 Mini模型的设计如何支持开放源代码社区?

-53 Mini模型采用与Llama 2模型类似的设计,并使用相同的tokenizer,识别32,610个词汇和工具,旨在与开发者已经使用的工具兼容,并具有灵活性。

53 Mini模型在多语言支持方面有哪些进展?

-微软的开发团队对53 Mini模型在多语言支持方面的改进感到兴奋,早期的类似小型模型53 Small的测试已经显示出希望,尤其是当它包含多种语言的数据时。

53 Mini模型如何平衡AI的功率和大小?

-53 Mini模型通过数据优化实现了功率和大小的平衡,它以高效率和可访问性为特点,为更智能、更适应性强和更个性化的日常生活技术铺平了道路。

53 Mini模型的局限性是什么?

-由于尺寸较小,53 Mini模型的容量不如更大的模型,可能会在需要大量特定信息的任务上遇到困难,例如回答需要大量信息的复杂问题。

53 Mini模型对未来AI技术发展有何启示?

-53 Mini模型不仅是数据优化的突破,也是AI发展方向的标志。它表明即使是小型数据优化模型也能像更大的系统一样表现良好,这可能会激发整个技术行业的更多创新,并可能改变我们与技术互动的基本方式。

Outlines

📱 微软53迷你AI模型:口袋里的强大AI

微软推出了53迷你AI模型,这是一个小型但功能强大的AI模型,能够在iPhone 14上运行,提供先进的AI能力,同时保护用户隐私。该模型拥有3.8亿参数,经过3.3万亿个token的训练,性能可与更大模型如Mixr 8x7 B和GPT 3.5相媲美。53迷你的突破在于其训练数据的精心升级,微软专注于提高数据的质量和有用性,而非仅仅增加模型大小。该模型使用Transformer解码器构建,具有4K的默认上下文长度,能够处理广泛和深入的信息。此外,53迷你的设计旨在支持开源社区,与Llama 2模型结构相似,使用相同的tokenizer,识别词汇量为32,610。53迷你能够在iPhone 14上直接运行,占用空间仅1.8GB,无需互联网连接即可每秒生成超过12个token,实现高级AI功能的离线使用。在性能测试中,53迷你在MLU和MT Bench等知名AI测试中得分与大型模型相当,展示了其架构的效率和精心设计的训练制度的有效性。微软还尝试了53迷你的更大版本,53小型和53中型,分别有7亿和14亿参数,使用相同高质量数据进行更长时间的训练,结果表明模型越大,性能越好。53迷你的开发采用了分阶段的方法,结合了网络数据和合成数据,专注于逻辑思考和专业技能,这种逐步的方法帮助模型在不增加大小的情况下表现良好。

🔒 53迷你AI模型:安全性和隐私性

53迷你AI模型在安全性和隐私性方面也进行了深入考虑。微软团队进行了彻底的安全检查和自动化测试,以确保模型不会生成有害内容。在多次对话中,53迷你产生有害内容的风险低于其他模型。此外,53迷你的设计注重社区参与和支持,使用与Llama相似的设计,并确保与开发者已使用的工具兼容。模型设计灵活,包括长绳(long rope)功能,可以处理长达128,000个字符的文本。使用53迷你在iPhone 14上,可以轻松访问高级AI技术,同时增强隐私保护,因为所有处理都在手机上完成,无需将个人信息发送到远程服务器。尽管53迷你有许多优点,但由于其较小的尺寸,它可能在处理需要大量特定信息的任务时存在局限性,例如回答需要大量信息的复杂问题。然而,通过将模型连接到搜索引擎,可以在需要时检索信息,从而减轻这个问题。微软的开发团队对改进模型的多语言工作能力感到兴奋,早期的53小型模型测试显示出有希望的结果,特别是当它包含多种语言的数据时。这表明未来的模型版本可能会支持更多语言,使技术对全球人民更有用。微软通过展示一个小型数据优化模型可以像更大的系统一样表现良好,鼓励行业对AI模型的制造和使用方式进行不同的思考,这可能会带来新的创新方法,在以前因计算能力要求过高而无法使用的领域使用AI。53迷你不仅是数据优化的突破,也是AI发展方向的标志,它平衡了功率和尺寸,提高了效率和可访问性,为更智能、更适应性、更个性化的日常生活技术铺平了道路。

Mindmap

Keywords

💡AI

💡53 Mini

💡参数

💡隐私保护

💡Transformer解码器

💡数据优化

💡安全性测试

💡多语言支持

💡开源社区

💡长期记忆(Long Context)

💡数据集

Highlights

微软在人工智能领域取得了重大进展,推出了53迷你模型,将强大的AI技术缩小到可以放入口袋的大小。

53迷你模型可以在iPhone 14上运行,无需牺牲隐私即可带来先进的AI功能。

该模型拥有3.8亿参数,经过3.3万亿token的训练,性能可与更大的模型相媲美。

53迷你模型能够在常规智能手机上使用,无需额外的计算帮助。

模型的训练数据经过精心升级,强调数据质量而非数量是提高模型性能的关键。

53迷你模型使用Transformer解码器构建,具有4K的默认上下文长度。

模型设计考虑了开源社区,与Llama 2模型结构相似,使用相同的tokenizer。

53迷你模型能够在iPhone 14上直接运行,占用空间仅1.8GB。

该模型能够在不需要互联网连接的情况下,每秒产生超过12个token。

53迷你模型在AI测试中的表现与更大的模型相当,证明了其架构的效率和训练制度的有效性。

微软还尝试了53小型和53中型模型,分别有7亿和14亿参数,使用相同高质量数据进行更长时间的训练。

53迷你模型的训练采用了不同于传统方法的逐步优化,结合了网络数据和合成数据。

53小型模型使用tick token tokenizer,展示了微软对多语言处理的承诺。

团队进行了大量测试,以确保模型不会产生有害内容,并通过自动化测试进行了安全检查。

53迷你模型的设计灵活,支持长文本处理,最多可处理128,000个字符。

53迷你模型的创建鼓励社区参与,并支持开发者已经使用的工具。

尽管53迷你模型有许多优点,但由于其较小的尺寸,它在处理需要大量特定信息的任务时可能存在局限性。

微软的开发团队对53迷你模型在多语言工作能力上的改进感到兴奋,早期测试显示出有希望的结果。

微软通过53迷你模型展示了小型数据优化模型可以与更大的系统相媲美,鼓励行业重新思考AI模型的构建和使用方式。

53迷你模型不仅标志着数据优化的突破,也是AI发展方向的信号,平衡了功率、尺寸、效率和可访问性。

Transcripts

Microsoft just made a big move in the AI

World by shrinking powerful AI down to

fit right in your pocket with the 53

mini and I mean that literally this

little Powerhouse can run on your iPhone

14 bringing Advanced AI capabilities

without compromising your privacy it's a

GameChanger for anyone looking to use

advanced technology simply and securely

in the past developing AI meant creating

bigger and more complex systems with

some of the latest models having

trillions of parameters these large

models are powerhouses of computing and

have been able to perform complicated

tasks that are similar to how humans

understand and reason but these big

models need a lot of computing power and

storage usually requiring strong

cloud-based systems to work now with 53

mini there's a change this model fits an

advanced AI right in your hand literally

it has 3.8 billion parameters and was

trained on 3.3 trillion tokens making it

as good as much larger models like Mixr

8x7 B and even GPT 3.5 what's even more

impressive is that it can be used on

regular Smartphones without needing

extra Computing help one of the major

breakthroughs with this model is how

carefully its training data has been

upgraded instead of just making the

model bigger Microsoft put a lot of

effort into improving the quality and

usefulness of the data it learns from

during the training they understood that

having better data not just more of it

is key to making the model work better

especially when they have to use smaller

Compu computer systems 53 mini came

about by making the data set it learns

from bigger and better than the one its

older version f 2 used this new data set

includes carefully chosen web data and

synthetic data created by other language

models this doesn't just ensure the data

is top-notch but it also greatly

improves the model's ability to

understand and create text that sounds

like it was written by a human now the

53 Mini model is built using a

Transformer decoder which is a key part

of many modern language models and it

has a default context length of 4K this

means that even though it's a smaller

model it is still able to handle a wide

and deep range of information during

discussions or when analyzing data

Additionally the model is designed to be

helpful to the open-source community and

to work well with other systems it has a

similar structure to the Llama 2 model

and uses the same tokenizer which

recognizes a vocabulary of 32,610

skills and tools with 53 mini without

having to start from scratch one of the

coolest things about 53 mini is that it

can run right on your iPhone 14 thanks

to the smart way it's built it can be

squeezed down to Just 4 bits and still

only take up about 1.8 GB of space and

even with its small size it works really

well it can create more than 12 tokens

per second while running directly on the

iPhone's a16 bionic chip without needing

any internet connection what this means

is pretty huge you can use some really

Advanced AI features anytime you want

without having to be online this keeps

your information private and everything

runs super fast when it comes to how

well it performs 53 mini has really

shown its strength in both in-house and

outside tests it scores just as well as

bigger models do on well-known AI tests

like M mlu and Mt bench this

demonstrates not only the efficiency of

its architecture but also the

effectiveness of its training regimen

which was meticulously crafted to

maximize the models learning from its

enhanced data set now When developing

this they also tried out larger versions

of the model called 53 small and 53

medium which have 7 billion and 14

billion parameters respectively these

bigger models were trained using the

same highquality data but for a longer

time totaling 4.8 trillion tokens the

results from these models were actually

really good showing major improvements

in their abilities as they got bigger

for instance the 53 small and 53 medium

scored even higher on the mlu an Mt

bench test proving that making the

models bigger can be very effective

without using more data than necessary

but the way they trained the 53 mini was

different from the usual method of just

making models bigger and using more

computing power the training process

started with using web sources to teach

the model general knowledge and how to

understand language then it moved to a

stage where it combined even more

carefully chosen web data with synthetic

data focused on logical thinking and

specialized skills this care

step-by-step approach helped the model

perform really well without just making

it bigger in training the model they

also made use of the latest AI research

including new ways of breaking down text

into tokens and focusing the model's

attention for example the 53 small model

uses a tokenizer called tick token to

handle multiple languages better showing

Microsoft's commitment to improving how

the model Works in different languages

after the model's development the team

did a lot of testing to make sure it

wouldn't produce harmful content this

included thorough safety checks red

teaming where they tried to find

weaknesses and automated testing these

steps are very important as AI becomes a

bigger part of everyday gadgets and

handles more important tasks and 53 mini

has been shown to produce harmful

content less often than other models in

conversations that have multiple turns

this lower risk of the model saying

something inappropriate or harmful is

key for its use in the real world the

creation of 53 Mini also focused on

getting the community involved and

supporting them by using a design

similar to L and making sure it works

with tools developers already use plus

the model's design is flexible it

includes features like long rope which

lets the model handle much longer texts

up to 128,000 characters using the 53

mini on your iPhone 14 really changes

the game by making Advanced AI

technology easy to access right on your

phone and the best part is in my opinion

that it ramps up our privacy we don't

have to worry about sending our personal

info to far off servers to use AI apps

anymore everything happens right on our

phones which keeps our data safe and

private just the way it should be now

although 53 mini has many benefits like

all Technologies it has its limits one

big issue is that it doesn't have as

much capacity as larger models because

of its smaller size for example it might

struggle with tasks that need a lot of

specific information like answering

complex questions in a trivia game

however this problem might be lessened

by connecting the model to search

engines that can pull up information

when needed as shown in tests using the

hugging face chat UI looking ahead

Microsoft's development team is excited

about improving the model's ability to

work in multiple languages early tests

with a similar small model called 53

small have been promising especially

when it includes data from many

languages this suggests that future

versions of the feries could support

more languages making the technology

useful to people all over the world more

by showing that a smaller data optimized

model can perform as well as much bigger

systems Microsoft is encouraging the

industry to think differently about how

AI models are made and used this could

lead to new creative ways to use AI in

areas where it was previously too

demanding in terms of computing power

Microsoft's 53 mini marks an important

advancement in bringing powerful AI

tools into our daily lives in a

practical way as this technology keeps

improving it is set to broaden what we

we can do with our personal devices

enhancing our experiences and abilities

in new and exciting ways the ongoing

development of such models will likely

Inspire more Innovation throughout the

tech industry potentially transforming

how we interact with technology at a

basic level and when you think about it

the 53 mini isn't just a data

optimization breakthrough it's actually

a sign of where AI is headed it balances

power and size with efficiency and

accessibility setting the stage for

smarter more adaptive and personal techn

technology in our everyday lives all

right don't forget to hit that subscribe

button for more updates thanks for

tuning in and we'll catch you in the

next one

5.0 / 5 (0 votes)