Building long context RAG with RAPTOR from scratch

Summary

TLDRLance 讨论了长上下文语言模型(LLMs)在检索和处理大型文档时的挑战。他介绍了一种新方法 Raptor,该方法通过聚类和摘要技术,创建了一个文档树,以有效地检索和整合信息。这种方法允许在不超出模型上下文限制的情况下,处理比单个模型窗口更大的文档集合。Lance 通过实验展示了 Raptor 在处理长文档时的有效性,并提供了代码和工具,以便观众可以尝试和应用这种方法。

Takeaways

- 🔍 Lance 介绍了一种名为 Raptor 的新方法,用于处理长文本的检索和长上下文语言模型(LLM)。

- 📈 他讨论了长上下文 LLM 如 Gemini 和 Claude 3 的优势和成本问题。

- 📝 Lance 使用长上下文 LLM 来构建代码助手,无需检索即可直接生成答案。

- ⏱️ 他提到长上下文生成的成本和延迟问题,以及与 RAG 系统相比的效率。

- 🤔 Lance 探讨了使用本地 LLM 的可能性,如 32,000 标记窗口的 Mistol 7B V2。

- 🌳 他提出了一种构建文档树的方法,以解决长文档检索的挑战。

- 📚 Raptor 方法通过聚类和递归摘要来构建文档的层次结构。

- 🔢 Raptor 使用高斯混合模型(GMM)来确定聚类的最佳数量。

- 🔄 Lance 展示了如何使用 Anthropic 的新模型进行文档摘要和检索。

- 🔍 他强调了 Raptor 方法在处理超出 LLM 上下文限制的大型文档时的适用性。

- 📊 Lance 通过实验展示了 Raptor 方法在检索过程中如何结合原始文档和摘要文档。

- 💡 他鼓励观众尝试 Raptor 方法,并提供了相关代码以便进一步探索。

Q & A

Lance 在视频中提到了哪些长文本LLMs?

-Lance 提到了Gemini、Claude 3以及Anthropic的CLAE 3。

Lance 使用长文本LLMs的目的是什么?

-Lance 使用长文本LLMs来创建一个代码助手,该助手能够回答有关Lang chain表达语言的文档的编码问题。

Lance 在评估长文本LLMs时考虑了哪些因素?

-Lance 在评估时考虑了p50延迟、p99延迟和成本。

Lance 提到的Raptor方法是什么?

-Raptor是一种新的检索策略,它通过嵌入和聚类文档,然后递归地总结信息,构建一个文档树,以便于在长文本LLMs中进行检索。

Raptor方法如何帮助解决长文本LLMs的局限性?

-Raptor通过构建文档树和摘要,允许在不分割文档的情况下进行检索,同时能够整合来自多个文档的信息,解决了KNN等传统检索方法在处理长文本时可能遇到的问题。

Lance 在视频中提到了哪些关于Raptor的实现细节?

-Lance 提到了使用高斯混合模型(GMM)来确定聚类数量,使用UMAP进行降维,以及在聚类过程中应用阈值来允许文档属于多个聚类。

Lance 如何处理超过长文本LLMs上下文窗口大小的文档?

-Lance 使用Raptor方法,通过嵌入整个文档并构建文档树,来确保即使文档大小超过LLMs的上下文窗口,也能有效地进行检索。

Lance 在视频中提到的KNN是什么?

-KNN(K最近邻)是一种基于距离的检索方法,它根据文档之间的相似度来检索最接近的K个文档。

Lance 在视频中提到的Anthropic的CLAE 3模型有什么特点?

-Anthropic的CLAE 3模型是一个新发布的模型,具有强大的性能,适合用于长文本的总结任务。

Lance 在视频中提到了哪些关于文档树构建的步骤?

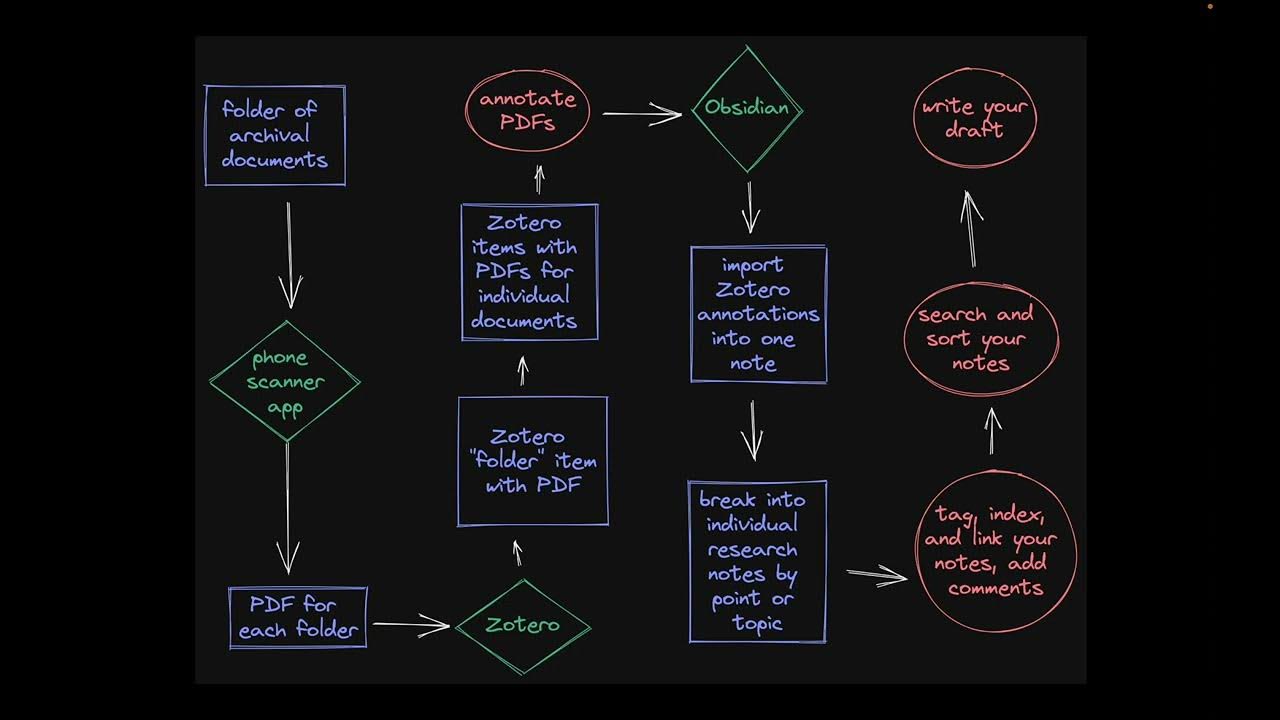

-文档树构建的步骤包括:嵌入原始文档,通过聚类分组文档,对每个聚类进行总结,然后递归地执行这些步骤,直到得到一个单一的聚类。

Lance 在视频中提到的文档树检索的优势是什么?

-文档树检索的优势在于它能够整合来自多个文档的信息,提供了一种更健壮的方法来处理需要跨多个文档整合信息的问题,同时能够适应不同类型问题的检索需求。

Outlines

🗣️ 兰斯讲述长文本LLMs和Raptor方法

兰斯介绍了长文本语言模型(LLMs)在项目中的应用,如代码助手,它利用长文本LLM直接生成答案,无需检索。他提到了使用长文本LLM的一些考虑因素,包括成本和响应时间,并探讨了是否可以用本地LLM替换。他还提出了一种新方法Raptor,这是一种轻量级的检索策略,适用于长文本模型。

🔍 探索Raptor:一种长文本检索策略

Raptor是一种新方法,它通过嵌入和聚类文档,然后递归地总结信息,构建一个文档树。这种方法可以整合来自多个文档的信息,解决了KNN检索中可能无法获取所有所需信息的问题。Raptor通过嵌入文档和摘要来执行检索,展示了在长文本模型中整合信息的能力。

📊 分析文档分布并应用Raptor方法

兰斯分析了一组文档的令牌计数分布,并使用Anthropic的新模型来执行文档的嵌入和总结。他解释了聚类过程,包括使用高斯混合模型(GMM)来确定聚类数量,以及如何通过UMAP和阈值化来改善聚类。这个过程允许文档属于多个聚类,提供了灵活性。

🛠️ 实施Raptor并构建索引

兰斯展示了如何将Raptor方法应用于实际文档,通过迭代聚类和总结来构建文档树。他创建了一个索引,包含了原始文档和所有级别的摘要,这使得检索可以从原始页面和摘要页面中进行,提供了不同类型问题的解决方案。

📘 Raptor方法的适用性和未来展望

兰斯强调,尽管在当前案例中,文档的总令牌数没有超过60,000的限制,但Raptor方法对于那些可能超出LLM上下文限制的更大文档集也是适用的。他鼓励人们尝试这种方法,并指出所有相关代码都将公开,以便人们可以进行实验。

Mindmap

Keywords

💡检索(Retrieval)

💡长文本模型(Long Context LLMs)

💡Raptor

💡文档树(Document Tree)

💡聚类(Clustering)

💡摘要(Summarization)

💡上下文窗口(Context Window)

💡K最近邻(KNN)

💡成本(Cost)

💡性能(Performance)

Highlights

Lance from Lang chain discusses retrieval and long context LLMs.

New method called Raptor is introduced for long context LLMs.

Long context LLMs like Gemini and Claude 3 can handle up to a million tokens.

Lance used long context LLMs for a code assistant project.

Long context LLMs can directly perform answer generation without retrieval.

Evaluations were run on 20 questions using long context LLMs.

P50 latency and P99 latency were measured for the evaluations.

Cost per generation for long context LLMs was discussed.

Considerations for using long context LLMs versus RAG systems were highlighted.

Mistol 7B V2, a local LLM with a 32,000 token context window, was mentioned.

The idea of indexing at the document level was proposed for retrieval.

Building a document tree for retrieval was suggested to address limitations.

Raptor's approach to clustering and summarizing documents was explained.

Raptor allows for embedding full documents and building a document abstraction tree.

The process of clustering, summarizing, and indexing documents was demonstrated.

Raptor's method can integrate information across different documents for retrieval.

The Raptor approach is applicable for cases where document size exceeds the context limit of LLMs.

The Raptor code and process will be made public for experimentation.

Transcripts

hi this is Lance from Lang chain I'm

going to be talking about retrieval and

long context llms and a new method

called

Raptor so over the last few weeks

there's been a lot of talk about is rag

Dead with the Advent of new long Contex

llms like Gemini a million tokens Claude

3 now with up to a million tokens it's

an interesting

question um I've recently been using

long Contex llms for certain projects

like like for example this code

assistant that I put out last week

basically used a long context llm to

answer coding questions about our docs

on L expression language I'll kind of

zoom in here so you can see it um so

these are around 60,000 tokens of

context we take the question we take the

docs we produce an answer and this is

really nice no retrieval required just

context stuff all these docs and perform

answer generation directly

so I'm a big fan of using La context

llms in this

way but there are some considerations I

wanted to like to to kind of point out

here so I ran evaluations and for those

evaluations I look at 20 questions um so

basically it's 20

Generations now look here so this is the

Langs Smith dashboard that I used for

those EV vals and you can see something

kind of interesting the p50 latency

tells you the 50th percentile latency

for each of those Generations um so

again remember there's

20 so it's around 35 to you know 46

seconds depending on the the trial this

is on the same data set same 20

Questions there's some variance run to

run so that's kind of expected and again

the P99 it's up to like okay 420 seconds

that's really long in that

case but maybe more interestingly if you

look at the cost again there's 20

questions so the cost is ranging from

maybe like a dollar to you know a dollar

a dollar like 30 per

generation so you know C and Lanes your

things to think about when you're

talking about using really long Contex

llms as opposed to like a rag system

where you're per you're performing

retrieval of much smaller more directed

chunks to your

question now the other thing that came

up is a lot of people asked hey can you

swap this out and use a local

llm and my go-to local llm is mistol 7B

V2 which actually has a 32,000 token

context window but that's still a little

bit big relative to my docs which are

around 60,000 tokens so you know I

couldn't just context stuff them as I

did here so these three considerations

kind of led me to think

about I really like working with long

context models and it's absolutely going

to be the continuing thing but are there

retrieval strategies that are like

lightweight easy to use with long

context models um that kind of like

preserve the ability to utilize a lot of

context uh but can address some of these

limitations um in particular this last

piece was important because this is

something I want to do kind of in the

near term and I need kind of like a nice

lightweight retrieval strategy that

still uses long context but can operate

in cases where my documents are maybe

just a little bit bigger than my context

window in this case like around

2x so I kind of put this out on Twitter

and said hey has anyone come across like

good like maybe minimalist splitting

strategies for long contuct LMS you know

like I wanted to graag with mrol 7B with

a 32,000 token context window but my

docs are 60,000 tokens I can't just

context stuff them but I also don't want

some like very fine scale chunking thck

thing like I get it we don't want to

mess with all that we want something

simple that just can like kind of work

across larger

documents so one point that was raised

which is a really good one is well just

um just index at the document level so

you can take full documents and just

embed them directly it's a fair point

and then you do something like KNN on

those embedded documents so again no

chunking of any documents no splitting

of documents you have your set of

documents embedded one and just retrieve

at the document level that's a pretty

good idea that's pretty

reasonable another idea that came up

though is this idea of building a

document tree and part of the reason for

that is when you talk about something

like KNN or like you know K nearest

neighbor retrieval on a set of embedded

documents it is true that sometimes an

answer requires maybe two or three

different documents kind of integrated

in order to answer it now if you context

St everything that's not a problem

because it's all there if you're doing

retrieval well you're setting your K

parameter to be some value it's kind of

brittle do you need to be like four or

five or six to capture all the context

needed for certain particular questions

so it's kind of hard to set that so this

idea of building a documentary is kind

of an interesting way to potentially

address this challenge with like basic

Cann so a paper Raptor came out recently

on this exact

idea um and their code recently open

sourced which led the folks at llama

index to come out with a llama pack for

it which is great um and the idea is

pretty interesting so I wanted to kind

of lay it out here and talk about how it

might benefit this exact case of kind of

long context

retrieval so the intuition is pretty

simple First We Take a set of documents

now note that these documents can be any

sized so in their case they're just

chunks so they're like 100 tokens but it

doesn't matter so we start with a set of

raw documents now what we do is we embed

them and then we cluster them so this

clustering process groups together like

documents and then we do one important

thing we summarize information in that

cluster into what we call kind of like a

more abstract or higher level summary of

that

content and we do that recursively until

we end up with one cluster that's it so

what's happening is you're starting with

the set of what they call leaves or like

raw documents you do a

grouping uh via clustering you do a

summarization steps you're kind of

compressing and then you do it again and

the idea is that these kind of midlevel

or eventually like root level or highest

level summaries can consolidate

information from different places in

your documents now what they do is they

basically just embed those summaries

along with the raw leavs and they

perform retrieval and we'll talk about

that a little bit later but what they

show is actually just doing retrieval on

all of these together like as a whole

pool performs best um and that's kind of

a nice result it's pretty easy then to

basically just index that and and use

it I will make a note that their paper

talked about you know these leavs being

chunks which I didn't love because look

I want to work with long context models

and like I don't want to deal with

chunking at all and I've replied you

know I replied to Jerry's tweet on this

and Jerry made a fair point that you

know this can scale to anything so for

example those leavs can be full

documents they don't have to be chunks

that's completely reasonable Point um so

again you can kind of think about this

as if idea one was let's just take each

document and embed it idea two is well

let's embed each document like we did

and we can also build kind of like a a

document abstraction Tree on top and

embed those so we have these like higher

level summaries in our embeddings which

we can retrieve from if we need an

answer to conate information from like a

small set of documents right so it's a

little bit more robust maybe to this

problem which is that if I'm just doing

KNN on Raw documents and I need

information from like two or three

documents I'm not guaranteed to always

get that because of this K parameter

that I set I'm only retrieving k docks

whereas here I'm building these docks

that contain information from multiple

leaves or multiple you know suboc so to

speak um and it can actually just

capture that information uh in in a in

kind of a a nice way um such that it can

it can basically integrate information

across different individual leads or

individual documents so that's the key

Point um and so we can you can kind of

see when you think about like working

long context models of course context

stuffing is a great option if you can do

it but there are some other interesting

ideas one is actually just embedding

full documents and another is this idea

of again documents and an abstraction

tree so let's go ahead and just build

Raptor because it's pretty interesting

and to do this I'm actually going to

going to look at clae 3 which just came

out today it's a new set of model Str

anthropic really strong performance and

should be really good for this use case

because what I want to do is I want to

perform summaries of individual

documents and I don't really want to

worry about the size of those

documents um so I'm going to use the

same set of documents that I previously

did with the code generation example

that video came out last week and I have

an empty notebook here um it we just do

a few pip installs I'm setting a few

environment variables for lsmith and now

I'm just going to say grab my docs so

that's right here

and this is going to grab around 33 web

pages of documentation from for Lang

chain related to Lang chain expression

language okay and what I'm going to plot

here is a histogram of the token counts

of every page so a bunch are kind of

small that's find easy to work with so

less than 2,000 tokens a few are pretty

big so up to like 12,000

tokens so that kind of gives you a sense

of the distribution of pages that we

want to work with and we're going to

apply this approach to those pages um

now I'm going to use anthropics new

model to do that um and I'll use open I

embeddings so that's fine I set those

and now what I'm going to do so this

code was released uh by the authors of

the paper and I'm going to explain how

this works in a little bit but for right

now I'm just going to copy this over and

this is all going to be accessible to

you in the notebook that we're going to

make public uh so this is all the

clustering code and we're going to talk

about what it's doing later I added

comments and Doc strings to this um so

it's it's a little bit more

understandable

here's some code that I wrote um that

basically is going to do like

orchestrate the process of the cluster

summarize um and then like iteratively

perform that until you end up with a

single

cluster um so there we go I'm going to

copy this code over and I'm going to

kick this process off and then I'm going

to walk through it while this is running

so that's running now now first I want

to kind of explain how this clustering

process works it's it's kind of

interesting um so the idea actually

incorporates three important actually

Four important

points so it's using this GMM this

gussian mixture model to model the

distribution of the different clusters

so what's kind of cool about this

approach is that you don't actually tell

it group the data into some number of

clusters like you do some of approaches

you kind of set the parameter you want

like n clusters here um it actually can

kind of infer or deter determine the

optimal number of clusters and it uses

this like Bic again you can dig into

this in more detail if you want but the

intuition is that uh this approach will

kind of guess or attempt to to determine

the number of clusters automatically for

you um and it's also modeling the

distribution of your individual

documents across the

Clusters um it uses this umap or

basically it's a dimensionality

reduction approach to improve the

clustering process so if you want to

like really read into this that you

should actually just go and do that um

the intuition is that this actually

helps improve

clustering um it also does clustering

what they call like local and Global so

it tries to analyze the data at two

different scales um like kind of look at

like patterns kind of within smaller

groups and then like within the full

data set to try to improve how you're

going to group these documents uh

together and it applies thresholding to

assign the basically the group

assignment for every document or the

cluster assignment for every document so

this is really the

idea here's all my documents let's look

at this one what's happening is it's

using this GMM to basically assign of

probability that this document belongs

to each one of our clusters so like

here's cluster one here's cluster two

here's cluster three each cluster will

get a

probability and this thresholding then

is applied to those

probabilities such that a document can

actually belong to more than one cluster

so that's actually really nice cuz in a

lot of other approaches it's kind of

mutually exclusive so document can only

live in one or another cluster but with

this approach it can actually be long to

multiple clusters so that's like a nice

benefit of this

approach um I think that's kind of all I

want to say initially about this

clustering strategy uh but you should

absolutely have a look at the paper

which I uh will also ensure that we

link um so right now let's actually go

look at the code so we can see that it's

performing this this cluster

generation and let's actually look at

kind of what it's doing so it really

follows what we just talked

about we have a set of texts and in this

case my texts are just that those you

know those 33 web pages uh that I'm

passing in so we can actually look at

that so what I passed in these Leaf text

Leaf text I Define as my docs again

let's actually go back and look at our

diagram so we can like follow

along uh here we go so these leaves are

my web pages that's it so here's my leaf

text and you can see let's look at the

length there uh okay there's 31 of them

so that's

fine um and what's happening is those

first

get embedded as

expected and then so here's the

embeddings and then they get clustered

and this perform clustering is taken

directly from basically the results uh

or the code provided by the authors of

the paper so it's doing that process I

just talked about um of clustering

basically cluster assignment um and we

get our cluster labels out we put those

in a data frame um and so so then we

have our clusters you can see that here

and because each docking can belong to

more than one cluster we actually expand

out the data frame um so that the

cluster column um Can may contain

duplicates uh for a single document so

one document can live in multiple

clusters and we just flatten it out to

show that then all we do is we get the

whole list of clusters here um the

Define a summarization prompt pretty

simple and all we do is we have our data

frame Just Fish Out give me all the

texts within each cluster and that's all

we're doing here for each cluster get

all the text Plum it into our

summarization prompt generate the

summary here's our summary data frame

that's really it so again iterate

through our clusters get the text for

every cluster summarize it write that

out to a data frame and that's all we do

here's our cluster data frame here's our

summary data frame from that function um

and this is just orchestrating that

process of like iteration so we just

keep doing this until I provide like a

level or n levels parameter you can say

do this end times or um you know uh or

um the number of clusters is is equal to

one so so basically this is saying

continue until either we've done n

levels or like n number of of of

attempts um in our tree or the number of

clusters is one keep doing that and

that's it so you can see we've actually

run that process we have our results

Here and Now what we can do is pretty

simply um we can just put those

into uh an index like we can use chroma

as a vector store um so here's just some

really simple code to do that or just

iterating through our results we're

getting all our summaries out so first

maybe I should make this a little B more

clear we take all those raw

documents and we add we create like we

add those to our text lists we then get

all of our summaries from our tree we

add those and we just index all of them

so let's do that so these These are

going to all be added them to chroma and

very finally we can set up a retrieval

chain that is

using this index which contains both our

leaves so all those raw web pages and

these higher level summary pages that's

all that's happening here we pull in a

rag prompt um here's our retriever

here's our question so let's give this a

shot so this is running and I want to

just bring you back to the diagram so

again you can kind of see what's going

on

here

um right here so again we took our web

pages uh again 31 of them we cluster

them we summarize them we do that

iteratively um then what we do is we

take those summaries that we generated

along with the raw web pages and we

index all of them that's it and we can

use that index for retrieval so this is

like a nice what we might call a long

context index because it contains just

raw web pages which vary from 2,000 to

12,000 tokens and it contains in our

case these higher level summaries in

case we need an integration of

information across those pages um which

may or may not be captured just using

K&N retrieval so that's the big idea

okay this ran we got our answer we can

check Langs Smith and we can see here's

our

retriever um and let's see here's the

raw

documents so it looks like it retrieved

some kind of higher level summaries as

well as some raw leavs so this is like a

raw web page and then some of these are

more like summary Pages which looks like

we produced so what's kind of cool about

this is you can retrieve from a

combination of like your raw Pages as

well as these higher level summaries

which gives you some robustness and

cement the coverage for different types

of questions that require like different

resolutions of of abstraction or

detailed answer like a really detailed

code question you might retrieve

directly from your raw pages but like a

higher level question that integrates

information from a bunch of pages you

might retrieve from these midlevel or

even top level summaries so it's a cool

approach it integrates kind of nicely

with long context models and I know one

thing that will come up here is well

look your full context was only 60,000

tokens you could just stuff all of that

into one of these models you didn't need

to do any of this that is absolutely

true for this case but what I think the

high level point is that's not true for

every case for example this exact set of

documents I want to use with mraw mraw

is only 33 32,000 tokens so this is a

really nice approach for that case where

I can kind of guarantee that I can index

across all these pages but I won't

exceed the context limit or and likely

to exceed the context limit of my llm

because none of these individual Pages

exceed 32,000 tokens so you know you can

see and again this scale is arbitrarily

large so it is true that this set of

documents is only 62,000 tokens

but of course there's much larger

corpuses which could extend beyond even

the 200,000 of CLA 3 in which case this

type of approach of kind of indexing

across documents um and building these

like kind of mid-level high level

summaries can be applicable so it's a

cool method it's a neat paper um I

definitely encourage you to experiment

with it um and all this code will be

available um for you to to work with and

um I think that's about it thanks very

much

5.0 / 5 (0 votes)