Stop paying for ChatGPT with these two tools | LMStudio x AnythingLLM

Summary

TLDR本视频由Implex Labs创始人Timothy Carat主讲,介绍了如何轻松地在本地运行功能强大的LLM(语言模型)应用程序。通过使用LM Studio和Anything LLM Desktop两个工具,用户可以在拥有GPU的笔记本电脑或台式机上获得更佳体验。视频中详细演示了如何在Windows操作系统上安装和设置这两个程序,并展示了如何通过LM Studio下载和使用不同的模型,以及如何将其与Anything LLM集成,以实现更全面的LLM体验。此外,还强调了Anything LLM的开源特性,鼓励用户贡献和自定义集成。

Takeaways

- 😀 Timothy Carat是Implex Labs的创始人,也是Anything LLM的创建者。

- 🚀 Anything LLM与LM Studio是两个可一键安装的应用程序,用于在本地轻松运行高能力的对话型AI。

- 🖥️ 支持Windows操作系统,并推荐使用GPU以获得更好体验,尽管只有CPU也可运行。

- 🔧 Anything LLM是一个全面的、完全私有的桌面聊天应用,可连接至几乎所有内容,并且完全开源。

- 📦 LM Studio支持通过简单界面下载和管理不同的AI模型,便于用户探索和实验。

- 🔑 使用LM Studio的内置聊天客户端可以快速测试模型,但功能相对基础。

- ⚙️ 通过配置LM Studio启用本地服务器,可以将AI模型与Anything LLM集成,以利用更高级的聊天功能。

- 📈 Anything LLM允许用户添加和嵌入文档或网页内容,以提高AI的上下文理解能力。

- 🔍 利用Anything LLM的嵌入功能,可以实现更精准的信息检索和回答生成。

- 💡 该教程展示了如何无需向OpenAI支付费用,就能在本地搭建和使用强大的聊天型AI系统。

Q & A

Implex Labs的创始人是谁?

-Implex Labs的创始人是Timothy Carat。

Timothy Carat创建了哪个应用程序?

-Timothy Carat创建了Anything LLM应用程序。

LM Studio支持哪些操作系统?

-LM Studio支持三种不同的操作系统,但视频中只提到了Windows操作系统。

使用LM Studio和Anything LLM Desktop有什么好处?

-使用LM Studio和Anything LLM Desktop可以轻松地在本地运行功能强大的LLM应用程序,而且完全免费。

Anything LLM是什么?

-Anything LLM是一个全功能的桌面聊天应用程序,它完全私密,可以连接到几乎所有东西,并且是完全开源的。

LM Studio中的模型是如何下载的?

-在LM Studio中,用户可以通过点击模型并选择相应的操作系统来下载模型。下载模型可能需要一些时间,具体取决于模型的大小和用户的网络速度。

LM Studio中的GPU offloading功能有什么作用?

-GPU offloading功能允许LM Studio尽可能多地使用GPU,从而加快token的处理速度,提供更快的响应时间。

如何在LM Studio中开始使用模型?

-在LM Studio中,用户可以选择模型并启动一个本地服务器来运行模型。这通常涉及到配置服务器端口,启用日志记录和提示格式化等调试工具,并确保GPU offloading被允许。

如何将LM Studio的推理服务器连接到Anything LLM?

-要将LM Studio的推理服务器连接到Anything LLM,用户需要复制LM Studio的本地服务器URL,并将其粘贴到Anything LLM的设置中。同时,用户需要输入模型的最大token窗口大小。

在Anything LLM中如何增强模型的理解能力?

-在Anything LLM中,用户可以通过添加文档或抓取网站内容来增强模型的理解能力。这些信息会被嵌入到模型中,使模型能够提供更准确和有用的回答。

使用LM Studio和Anything LLM的目的是什么?

-使用LM Studio和Anything LLM的目的是创建一个完全私密的、端到端的系统,用于在本地机器上聊天和处理文档,同时利用开源的最新模型,而无需支付额外费用。

Outlines

🚀 引言与介绍

Timothy Carat介绍了自己以及他创立的Implex Labs公司和Anything LLM产品。他提出了一个简单方法,可以让用户在本地设备上运行功能强大的、类似ChatGPT的应用程序。他强调了使用GPU设备将提供更好的体验,但也可以在只有CPU的设备上运行。他介绍了两个单点击安装的应用程序:LM Studio和Anything LLM Desktop,并强调了Anything LLM的全面性、隐私性和开源性。

📱 使用LM Studio与Anything LLM Desktop

Timothy展示了如何在Windows机器上使用LM Studio和Anything LLM。他指导用户如何下载和安装这两个程序,并解释了LM Studio的功能,包括如何下载和使用不同的模型。他还提到了GPU加速的重要性,并展示了如何在LM Studio中设置和使用模型。接着,他介绍了如何通过LM Studio与Anything LLM集成,以及如何通过添加上下文和文档来增强模型的理解能力。

🌐 集成与应用

在最后一段中,Timothy总结了如何将LM Studio和Anything LLM Desktop集成在一起,以及这种集成如何为用户提供强大的本地LLM使用工具。他强调了使用开源模型和本地AI工具的优势,以及如何避免每月向OpenAI支付费用。他还提到了选择合适的模型对于提升聊天体验的重要性,并鼓励用户尝试流行的模型,如Llama 2或Mistol。最后,他邀请用户提供反馈,并将相关链接放在视频描述中。

Mindmap

Keywords

💡implex labs

💡Anything LLM

💡LM Studio

💡GPU

💡模型下载

💡隐私

💡开源

💡本地运行

💡聊天客户端

💡模型兼容性

💡GPU offloading

Highlights

介绍Implex Labs创始人Timothy Carat和他的作品Anything LLM。

展示在本地运行功能强大的类似GPT的聊天应用的最简单方法。

推荐使用LM Studio和Anything LM Desktop两个工具,它们都可以一键安装。

LM Studio支持三种不同的操作系统,本次演示使用Windows系统。

Anything LM是一个全私有、可连接到几乎所有东西的聊天应用,并且完全开源。

演示如何在Windows机器上使用LM Studio与Anything LM。

LM Studio的安装和运行过程简单快捷。

LM Studio内置了一个简单的聊天客户端,用于测试模型。

介绍如何在LM Studio中下载和选择模型,以及如何检查模型与GPU的兼容性。

展示如何使用LM Studio的聊天功能和性能指标。

讲解如何配置LM Studio的本地服务器以运行模型。

介绍如何将LM Studio的推理服务器连接到Anything LM。

演示如何通过添加文档和网页信息来增强LM的理解和响应能力。

强调使用开源模型和LM Studio以及Anything LM桌面应用程序的私有性和无需支付额外费用的优势。

鼓励用户尝试LM Studio和Anything LM桌面,作为本地LLM的核心部分。

提供链接以便用户下载和体验LM Studio和Anything LM。

Transcripts

hey there my name is Timothy carat

founder of implex labs and creator of

anything llm and today I actually want

to show you possibly the easiest way to

get a very extremely capable locally

running fully rag like talk to anything

with any llm application running on

honestly your laptop a desktop if you

have something with the GPU this will be

a way better experience if all you have

is a CPU this is still possible and

we're going to use two tools

both of which are a single-click

installable application and one of them

is LM studio and the other is of course

anything LM desktop right now I'm on LM

studio. a they have three different

operating systems they support we're

going to use the windows one today

because that's the machine that I have a

GPU for and I'll show you how to set it

up how the chat normally works and then

how to connect it to anything LM to

really unlock a lot of its capabilities

if you aren't familiar with anything llm

anything llm is is an all-in-one chat

with anything desktop application it's

fully private it can connect to pretty

much anything and you get a whole lot

for actually free anything in LM is also

fully open source so if you are capable

of programming or have an integration

you want to add you can actually do it

here and we're happy to accept

contributions so what we're going to do

now is we're going to switch over to my

Windows machine and I'm going to show

you how to use LM studio with anything

LM and walking through both of the

products so that you can really get

honestly like the most comprehensive llm

experience and pay nothing for it okay

so here we are on my Windows desktop and

of course the first thing we're going to

want to do is Click LM Studio for

Windows this is version

0.216 whatever version you might be on

things may change a little bit but in

general this tutorial should be accurate

you're going to want to go to use

anything.com go to download anything LM

for desktop and select your appropriate

operating system once you have these two

programs installed you are actually 50%

done with the entire process that's how

quick this was let me get LM Studio

installed and running and we'll show you

what that looks like so you've probably

installed LM Studio by now you click the

icon on your desktop and you usually get

dropped on this screen I don't work for

LM studio so I'm just going to show you

kind of some of the capabilities that

are relevant to this integration and

really unlocking any llm you use they

kind of land you on this exploring page

and this exploring page is great it

shows you basically some of the more

popular models that exist uh like

Google's Gemma just dropped and it's

already live that's really awesome if

you go down here into if you click on

the bottom you'll see I've actually

already downloaded some models cuz this

takes time downloading the models will

probably take you the longest time out

of this entire operation I went ahead

and downloaded the mistal 7B instruct

the Q4 means 4bit quantized model now

I'm using a Q4 model honestly Q4 is kind

of the lowest end you should really go

for Q5 is really really great Q8 if you

want to um if you actually go and look

up any model on LM Studio like for

example let's look up mistol as you can

see there's a whole bunch of models here

for mistol there's a whole bunch of

different types these are all coming

from the hugging face repository and

there's a whole bunch of different types

that you can find here published by

bunch of different people you can see

that you know how many times this one

has been downloaded this is a very

popular model and once you click on it

you'll likely get some options now LM

studio will tell you if the model is

compatible with your GPU or your system

this is pretty accurate I've found that

sometimes it doesn't quite work um one

thing you'll be interested in is full

GPU offloading exactly what it sounds

like using the GPU as much as you can

you'll get way faster tokens something

honestly on the speed level of a chat

GPT if you're working with a small

enough model or have a big enough

graphics card I have 12 gigs of vram

available and you can see there's all

these Q4 models again you probably want

to stick with the Q5 models at least uh

for the best experience versus size as

you can see the Q8 is quite Hefty 7.7

gigs which even if you have fast

internet won't matter because it takes

forever to download something from

hugging face if you want to get working

on this in the day you might want to

start to download now for the sake of

this video I've already downloaded a

model so now that we have a model

downloaded we're going to want to try to

chat with it LM Studio actually comes

with a chat client inside of it it's

very very simplistic though and it's

really just for experimenting with

models we're going to want to go to this

chat bubble icon and you can see that we

have a thread already started and I'm

going to want to pick the one model that

I have available and you'll see this

loading bar continue There are some

system prompts that you can preset for

the model I have GPU offloading enabled

and I've set it to Max already and as

you can see I have Nvidia Cuda already

going there are some tools there are

some other things that you can mess with

but in general that's really all you

need to do so let's test the chat and

let's just say hello how are you and you

get the pretty standard response from

any AI model and you even get some

really cool metrics down here like time

to First token was 1.21 seconds I mean

really really kind of cool showing the

GPU layers that are there however you

really can't get much out of this right

here if you wanted to add a document

you'd have to copy paste it into the

entire user prompt there's really just a

lot more that can be done here to

Leverage The Power of this local llm

that I have running even though it's a

quite small one so to really kind of

Express how powerful these models can be

for your own local use we're going to

use anything llm now I've already

downloaded anything llm let me show you

how to get that running and how to get

to LM Studio to work work with anything

llm just booted up anything llm after

installing it and you'll usually land on

a screen like this let's get started we

already know who we're looking for here

LM studio and you'll see it asks for two

pieces of information a token context

window which is a property of your model

that you'd already be familiar with and

then the LM Studio base URL if we open

up LM studio and go to this local server

tab on the side this is a really really

cool part of LM Studio this doesn't work

with multimodel support So once you have

a model selected that's the model that

you are going to be using so here we're

going to select the exact same model but

we're going to start a server to run

completions against this model so the

way that we do that is we can configure

the server Port usually it's 1 2 3 4 but

you can change it to whatever you want

you probably want to turn off cores

allow request queuing so you can keep

sending requests over and over and they

don't just fail you want to enable log

buing and prompt formatting these are

all just kind of debugging tools on the

right side you are going to still want

to make sure that you have GPU

offloading allowed if that is

appropriate but other than that you just

click Start server and you'll see that

we get some logs saved here now to

connect the LM Studio inference server

to anything llm you just want to copy

this string right here up to the V1 part

and then you're going to want to open

anything ilm paste that into here I know

that my models Max to token window is

496 I'll click next embedding preference

we don't really even need one we can

just use the anything LM built in EMB

better which is free and private same

for the vector database all of this is

going to be running on machines that I

own and then of course we can skip the

survey and let's make a our first

workspace and we'll just call it

anything llm we don't have any documents

or anything like that so if we were to

send a chat asking the model about

anything llm will'll either get get a

refusal response or it will just make

something up so let's ask what is

anything llm and if you go to LM Studio

during any part you can actually see

that we sent the requests to the model

and it is now streaming the response

first token has been generated

continuing to stream when anything llm

does receive that first token stream

this is when we will uh start to show it

on our side and you can see that we get

a response it just kind of pops up

instantly uh which was very quick but it

is totally wrong and it is wrong because

we actually don't have any context to

give the model on what anything llm

actually is now we can augment the lm's

ability to know about our private

documents by clicking and adding them

here or I can just go and scrape a

website so I'm going to go and scrape

the use.com homepage cuz that should

give us enough information and you'll

see that we've scraped the page so now

it's time to embed it and we'll just run

that embedding and now our llm should be

smarter so let's ask the same question

again but this time knowing that it has

information that could be

useful and now you can see that we've

again just been given a response that

says anything LM is an AI business

intelligence tool to form humanlike text

messages based on prompt it offers llm

support as well as a variety of

Enterprise models this is definitely

much more accur it but we also tell you

where this information came from and you

can see that it cited the use.com

website this is what the actual chunks

that were used uh to formulate this

response and so now actually we have a

very coherent machine we can embed and

modify create different threads we can

do a whole bunch of stuff from within

anything llm but the core piece of

infrastructure the llm itself we have

running on LM Studio on a machine that

we own so now we have a fully private

endtoend kind of system for chatting

with documents privately using the

latest and greatest models that are open

source and available on hugging face so

hopefully this tutorial for how to

integrate LM studio and anything llm

desktop was helpful for you and unlocks

probably a whole bunch of potential for

your local llm usage tools like LM

studio oama and local AI make running a

local llm no longer a very technical

task and you can see that with tools

that provide an interface like LM Studio

pair that with another more powerful

tool built for chatting exclusively like

anything llm on your desktop and now you

can have this entire experience and not

have to pay open AI 20 bucks a month and

again I do want to iterate that the

model that you use will determine

ultimately your experience with chatting

now there are more capable models there

are more Niche models for programming so

be careful and know about the model that

you're choosing or just choose some of

the ones that are more popular like

llama 2 or mistol and you'll honestly be

great hopefully LM Studio Plus anything

llm desktop just become a core part of

your local llm stack and we're happy to

be a part of it and hear your feedback

we'll put the links in the description

and have fun

5.0 / 5 (0 votes)

6款工具帮你自动赚钱,轻松上手帮你打开全新的收入渠道,赚钱效率高出100倍,用好这几款AI人工智能工具,你会发现赚钱从来没如此简单过

FREE writing software | Longform and shortform

Upgrade Your REPORT DESIGN in Power BI | Complete Walkthrough From A to Z

Best AI Music Generator in 2024 - SUNO vs UDIO

Beginner's Guide to ControlNets in ComfyUI

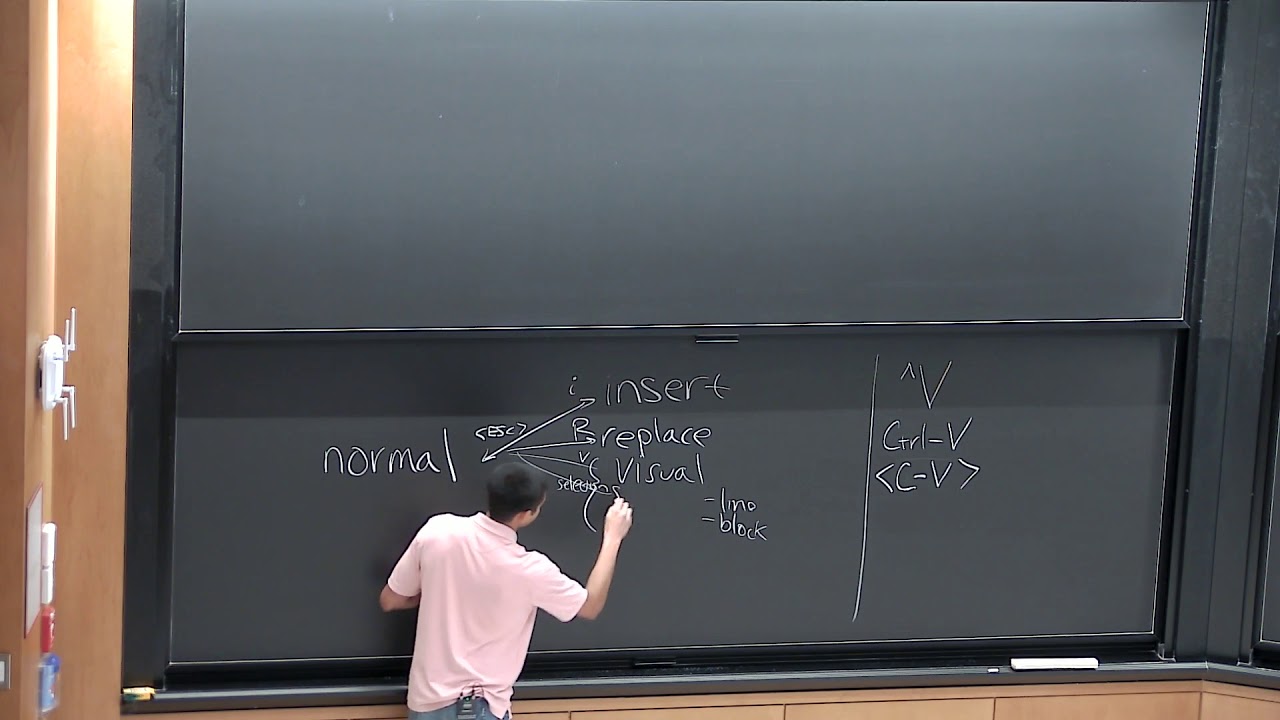

Lecture 3: Editors (vim) (2020)