What is Retrieval-Augmented Generation (RAG)?

Summary

TLDR在这段视频中,IBM的高级研究科学家Marina Danilevsky介绍了一种名为Retrieval-Augmented Generation(RAG)的框架,旨在帮助大型语言模型(LLM)提供更准确、更新的信息。通过结合检索增强技术,LLM现在能够查询内容存储库以获取与用户问题相关的最新信息,从而解决了信息过时和缺乏来源支持的问题。这种方法不仅提高了模型回答问题的准确性,还减少了错误信息的产生,并允许模型在无法提供可靠答案时诚实地表示“我不知道”。

Takeaways

- 🌐 大型语言模型(LLMs)在回答用户查询时可能会犯错误。

- 🔍 LLMs的挑战包括缺乏最新信息和没有引用可靠来源。

- 🤖 检索增强生成(RAG)框架旨在帮助LLMs更准确和及时。

- 📚 RAG通过结合内容存储库来增强LLMs的回答。

- 🌟 RAG允许LLMs在生成回答前先检索相关信息。

- 🔄 RAG框架的提示现在包含三个部分:指令、检索到的内容和用户问题。

- 💡 RAG有助于解决LLMs的过时问题,通过更新数据存储库而不是重新训练模型。

- 🔗 RAG让LLMs在给出回答前关注原始数据源,减少误导用户的风险。

- 🚫 如果检索器未能提供高质量的信息,LLMs可能无法回答可解答的问题。

- 🌟 IBM等组织正在努力改进检索器和生成器,以提供更优质的LLMs回答。

- 📈 RAG框架鼓励LLMs在无法可靠回答时说“我不知道”,避免误导。

Q & A

什么是大型语言模型(LLM)?

-大型语言模型(LLM)是一种人工智能模型,能够根据用户输入的提示(prompt)生成文本。

大型语言模型在回答问题时可能面临哪些挑战?

-大型语言模型可能面临的挑战包括没有引用来源(无源)和信息过时。这可能导致模型提供的答案缺乏准确性和时效性。

Marina Danilevsky提到的RAG是什么?

-RAG是Retrieval-Augmented Generation的缩写,它是一个框架,旨在帮助大型语言模型通过检索相关信息来提高其回答问题的准确性和时效性。

RAG框架如何帮助大型语言模型解决信息过时的问题?

-RAG框架通过添加内容存储(如互联网或特定文档集合)来辅助LLM。当有新信息出现时,只需更新数据存储,而无需重新训练模型,从而使模型能够检索到最新的信息。

RAG框架如何确保大型语言模型提供的答案有据可依?

-在RAG框架中,LLM在生成答案前会先检索相关内容,并结合用户的问题来生成答案,这样可以使模型在回答问题时提供证据支持,减少凭空捏造的可能性。

RAG框架如何帮助大型语言模型避免泄露数据?

-通过让LLM在给出响应前关注原始数据源,RAG框架减少了模型仅依赖于训练期间学到的信息,从而降低了数据泄露的风险。

RAG框架如何教导大型语言模型在不确定时说“我不知道”?

-RAG框架通过检索增强的方式,让LLM在数据存储中找不到可靠答案时,选择说“我不知道”,而不是编造可能误导用户的信息。

如果检索器提供的信息质量不高,会怎样影响大型语言模型的回答?

-如果检索器不能提供高质量、准确的信息,那么即使是可以回答的用户问题也可能得不到答案,影响LLM的响应质量。

IBM研究人员如何改进RAG框架中的检索器和生成器?

-IBM的研究人员正在努力改进检索器,以提供更高质量、更准确的数据支持LLM的回答,并同时改进生成器,以确保LLM最终能够为用户提供最丰富、最好的答案。

在RAG框架中,用户如何与大型语言模型互动?

-在RAG框架中,用户首先向LLM提出问题,然后LLM会检索相关内容,并结合这些内容与用户的问题来生成答案。

RAG框架对于大型语言模型的发展有何意义?

-RAG框架对于大型语言模型的发展意味着能够提供更准确、更及时的信息,同时减少错误信息的传播和数据泄露的风险,提高了模型的可靠性和用户的信任度。

Outlines

🤖 大型语言模型的挑战与RAG框架

本段落介绍了大型语言模型(LLMs)在回答问题时可能遇到的挑战,如信息的准确性和时效性问题。Marina Danilevsky,IBM Research的高级研究科学家,通过一个关于太阳系中哪个行星拥有最多卫星的问题,阐述了LLMs可能自信地给出错误答案的情况。她解释了RAG(Retrieval-Augmented Generation)框架如何帮助LLMs通过检索相关信息来提高答案的准确性和时效性。RAG框架通过结合用户问题和检索到的内容,生成更有根据的回答,从而解决了LLMs的两大挑战:信息过时和缺乏来源支持。

🔍 提升LLMs的准确性和数据源质量

这一段落进一步讨论了如何通过RAG框架提升LLMs的准确性。通过指导LLMs在给出回答前先关注原始数据源,模型能够减少错误信息的产生,并且能够提供证据支持其回答。这种方法使得LLMs在无法可靠回答用户问题时,能够诚实地表示“我不知道”,而不是编造可能误导用户的答案。同时,段落也提到了提高检索器质量的重要性,以便为LLMs提供最高质量的数据支持,从而生成最丰富、最准确的回答。最后,Marina Danilevsky感谢观众了解RAG,并邀请大家关注和订阅频道。

Mindmap

Keywords

💡大型语言模型

💡检索增强生成

💡信息过时

💡缺乏可靠来源

💡内容存储库

💡生成文本

💡用户查询

💡数据更新

💡证据

💡信息检索

💡生成回答

Highlights

大型语言模型(LLMs)在回答用户查询时可能存在不准确和过时的问题。

Marina Danilevsky介绍了一种提高LLMs准确性和时效性的框架:检索增强生成(RAG)。

LLMs在生成文本时可能会表现出不期望的行为,如缺乏来源支持和信息过时。

通过检索增强,LLMs可以在回答前查询内容存储库,以获取与用户查询相关的最新信息。

RAG框架让LLMs在生成答案前先检索相关内容,提高了答案的准确性。

RAG允许LLMs提供支持其回答的证据,减少了错误信息的可能性。

RAG框架通过更新数据存储库来适应新信息,而无需重新训练模型。

LLMs现在被指导在给出回答前关注原始数据源,减少了幻觉或数据泄露的风险。

RAG框架鼓励模型在无法可靠回答用户问题时说“我不知道”,而不是编造可能误导用户的答案。

检索器的质量对LLMs提供高质量基础信息至关重要,IBM等机构正在努力改进检索器。

RAG框架旨在提高LLMs生成答案的质量,同时提供更好的用户体验。

RAG框架的引入是为了解决LLMs在信息更新和来源准确性方面的挑战。

通过RAG,LLMs能够更准确地反映最新的科学发现,如太阳系中卫星数量的变化。

RAG框架通过结合检索到的内容和用户问题来生成答案,提高了答案的相关性和可信度。

RAG框架的应用有助于减少LLMs在生成答案时的自信错误。

IBM研究人员正在努力改进LLMs的生成部分,以便为用户提供更丰富的回答。

RAG框架是LLMs发展中的一个重要进步,它强调了信息检索与生成相结合的重要性。

Transcripts

Large language models. They are everywhere.

They get some things amazingly right

and other things very interestingly wrong.

My name is Marina Danilevsky.

I am a Senior Research Scientist here at IBM Research.

And I want to tell you about a framework to help large language models

be more accurate and more up to date:

Retrieval-Augmented Generation, or RAG.

Let's just talk about the "Generation" part for a minute.

So forget the "Retrieval-Augmented".

So the generation, this refers to large language models, or LLMs,

that generate text in response to a user query, referred to as a prompt.

These models can have some undesirable behavior.

I want to tell you an anecdote to illustrate this.

So my kids, they recently asked me this question:

"In our solar system, what planet has the most moons?"

And my response was, “Oh, that's really great that you're asking this question. I loved space when I was your age.”

Of course, that was like 30 years ago.

But I know this! I read an article

and the article said that it was Jupiter and 88 moons. So that's the answer.

Now, actually, there's a couple of things wrong with my answer.

First of all, I have no source to support what I'm saying.

So even though I confidently said “I read an article, I know the answer!”, I'm not sourcing it.

I'm giving the answer off the top of my head.

And also, I actually haven't kept up with this for awhile, and my answer is out of date.

So we have two problems here. One is no source. And the second problem is that I am out of date.

And these, in fact, are two behaviors that are often observed as problematic

when interacting with large language models. They’re LLM challenges.

Now, what would have happened if I'd taken a beat and first gone

and looked up the answer on a reputable source like NASA?

Well, then I would have been able to say, “Ah, okay! So the answer is Saturn with 146 moons.”

And in fact, this keeps changing because scientists keep on discovering more and more moons.

So I have now grounded my answer in something more believable.

I have not hallucinated or made up an answer.

Oh, by the way, I didn't leak personal information about how long ago it's been since I was obsessed with space.

All right, so what does this have to do with large language models?

Well, how would a large language model have answered this question?

So let's say that I have a user asking this question about moons.

A large language model would confidently say,

OK, I have been trained and from what I know in my parameters during my training, the answer is Jupiter.

The answer is wrong. But, you know, we don't know.

The large language model is very confident in what it answered.

Now, what happens when you add this retrieval augmented part here?

What does that mean?

That means that now, instead of just relying on what the LLM knows,

we are adding a content store.

This could be open like the internet.

This can be closed like some collection of documents, collection of policies, whatever.

The point, though, now is that the LLM first goes and talks

to the content store and says, “Hey, can you retrieve for me

information that is relevant to what the user's query was?”

And now, with this retrieval-augmented answer, it's not Jupiter anymore.

We know that it is Saturn. What does this look like?

Well, first user prompts the LLM with their question.

They say, this is what my question was.

And originally, if we're just talking to a generative model,

the generative model says, “Oh, okay, I know the response. Here it is. Here's my response.”

But now in the RAG framework,

the generative model actually has an instruction that says, "No, no, no."

"First, go and retrieve relevant content."

"Combine that with the user's question and only then generate the answer."

So the prompt now has three parts:

the instruction to pay attention to, the retrieved content, together with the user's question.

Now give a response. And in fact, now you can give evidence for why your response was what it was.

So now hopefully you can see, how does RAG help the two LLM challenges that I had mentioned before?

So first of all, I'll start with the out of date part.

Now, instead of having to retrain your model, if new information comes up, like,

hey, we found some more moons-- now to Jupiter again, maybe it'll be Saturn again in the future.

All you have to do is you augment your data store with new information, update information.

So now the next time that a user comes and asks the question, we're ready.

We just go ahead and retrieve the most up to date information.

The second problem, source.

Well, the large language model is now being instructed to pay attention

to primary source data before giving its response.

And in fact, now being able to give evidence.

This makes it less likely to hallucinate or to leak data

because it is less likely to rely only on information that it learned during training.

It also allows us to get the model to have a behavior that can be very positive,

which is knowing when to say, “I don't know.”

If the user's question cannot be reliably answered based on your data store,

the model should say, "I don't know," instead of making up something that is believable and may mislead the user.

This can have a negative effect as well though, because if the retriever is not sufficiently good

to give the large language model the best, most high-quality grounding information,

then maybe the user's query that is answerable doesn't get an answer.

So this is actually why lots of folks, including many of us here at IBM,

are working the problem on both sides.

We are both working to improve the retriever

to give the large language model the best quality data on which to ground its response,

and also the generative part so that the LLM can give the richest, best response finally to the user

when it generates the answer.

Thank you for learning more about RAG and like and subscribe to the channel.

Thank you.

5.0 / 5 (0 votes)

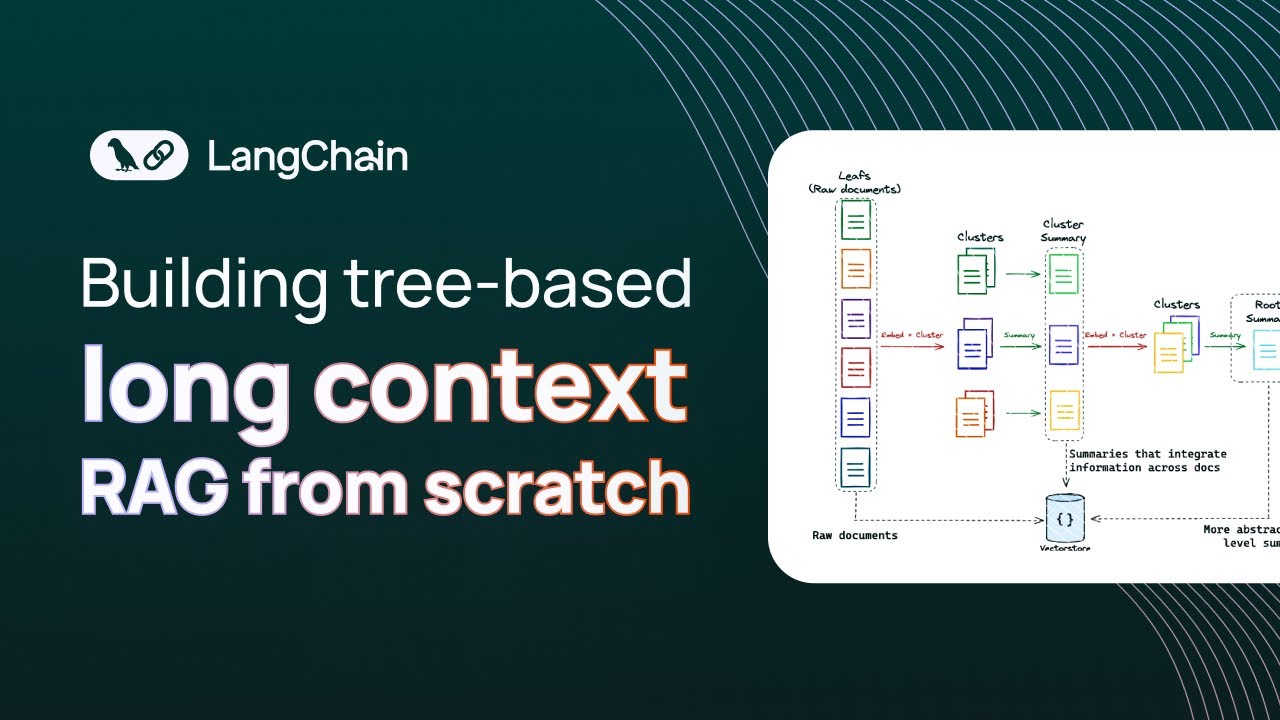

Building long context RAG with RAPTOR from scratch

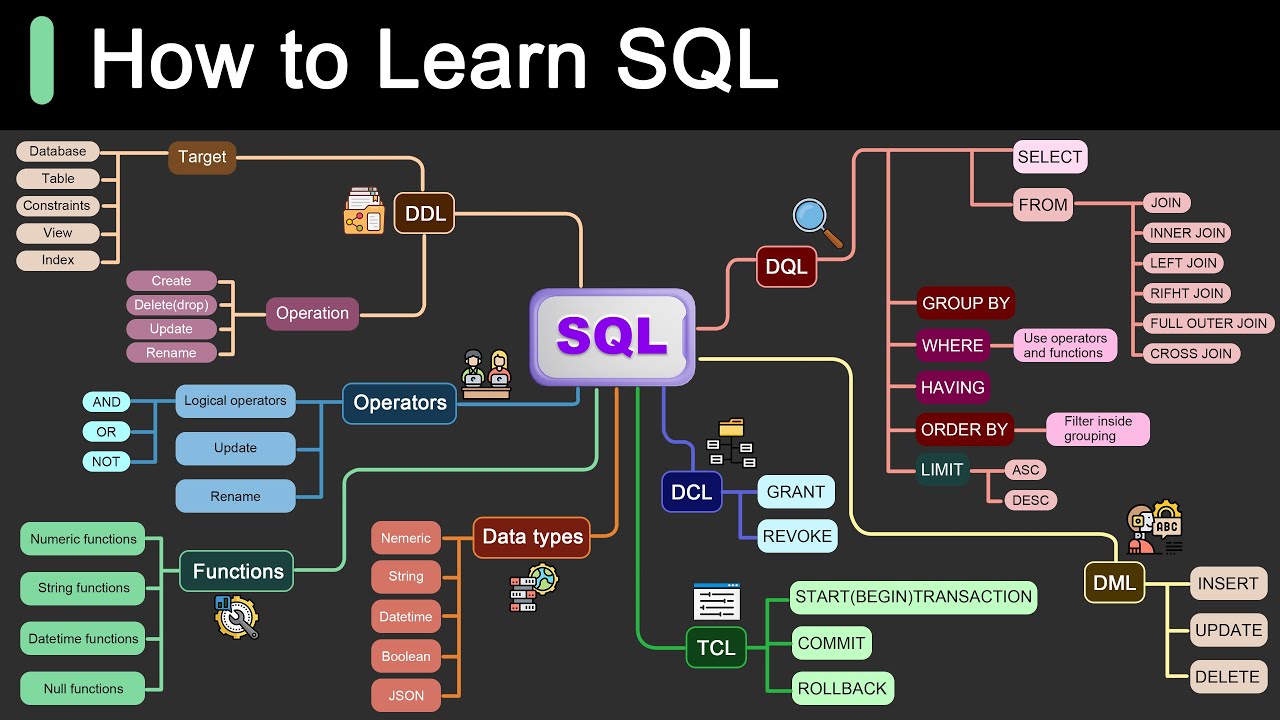

Roadmap for Learning SQL

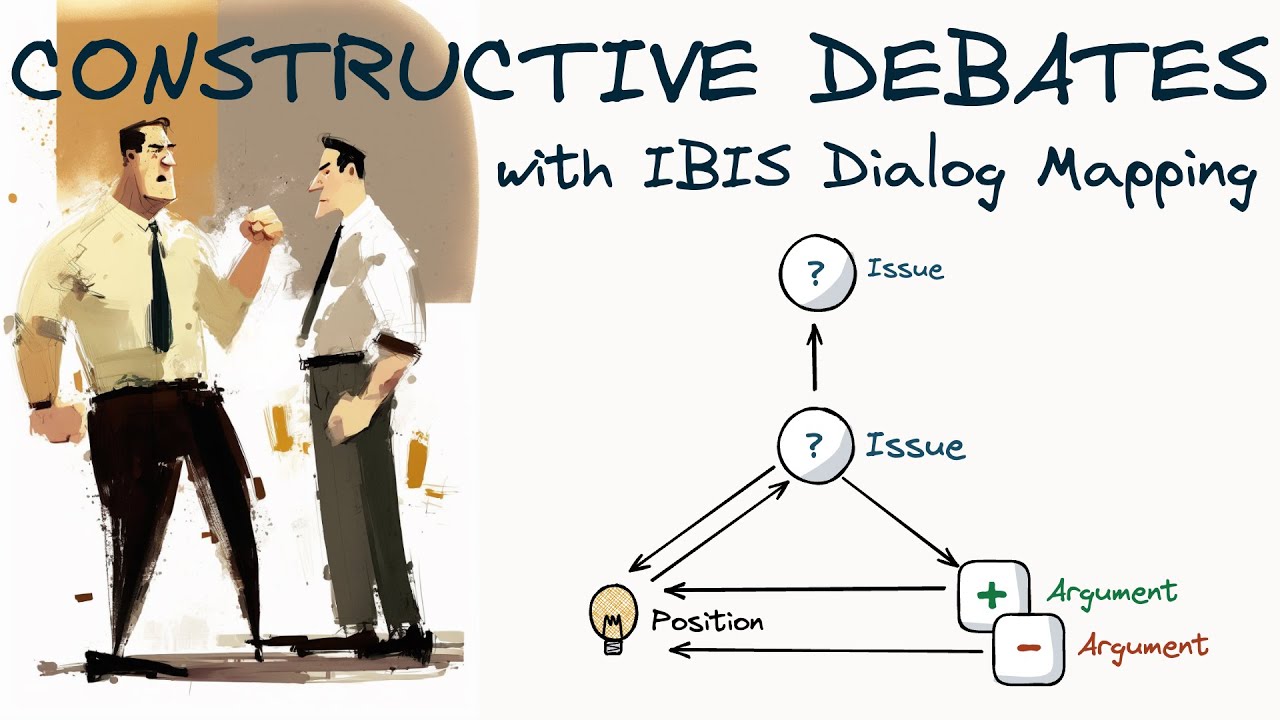

Develop the Superpower of Constructive Debating with Dialog Mapping - includes ChatGPT case study

Microsoft's New PHI-3 AI Turns Your iPhone Into an AI Superpower! (Game Changer!)

【機器學習2021】自注意力機制 (Self-attention) (上)

【结构化思考】因为平庸,我才努力点满这项奇技。