Never install locally

Summary

TLDRThis video script introduces containers as a game-changing technology for developers, offering a fast, portable, and isolated environment for application deployment. It contrasts containers with traditional virtual machines (VMs), highlighting the efficiency and resource-light nature of containers. The script explains how containers leverage the host's kernel and file system layers, allowing for a consistent development and deployment experience across different environments. It also touches on the use of Docker as a popular container platform, the concept of container images and layers, and the benefits of container registries for image management. The video concludes with a brief mention of container orchestration tools like Kubernetes for advanced deployment management.

Takeaways

- 📦 Containers are pre-configured environments that include your code, libraries, and OS, ready to run upon deployment.

- ⚡ Containers offer fast startup times and are less resource-intensive compared to virtual machines (VMs).

- 🔄 Containers share the host's kernel, which makes them more efficient than VMs that emulate hardware.

- 🌉 The kernel is the core of an OS, managing tasks like CPU, memory, device I/O, file systems, and process management.

- 🌍 Containers allow developers to work in multiple environments simultaneously without conflicts on their local machine.

- 🔒 The 'it works on my machine' problem is mitigated by containers, ensuring consistent environments across different machines.

- 🛠️ Docker is a popular container platform used for creating and running containers, with a well-supported ecosystem.

- 📋 Container images are built from layered file systems, allowing for efficient updates and customization.

- 🔄 Dockerfiles contain commands that Docker executes sequentially to create new layers in the container image.

- 🔄 Each container instance has its own file system layer, allowing for runtime changes without affecting other containers.

- 🔖 Containers can be tagged and published to a container registry for easy referencing and deployment.

Q & A

What is the main advantage of using containers over traditional virtual machines?

-Containers are faster to spin up and typically less resource-intensive than virtual machines. They provide isolated environments that are more lightweight and efficient.

How does a container differ from a virtual machine in terms of resource usage and performance?

-Containers share the host's kernel and emulate a minimal file system, which makes them more efficient and faster to start compared to virtual machines that are tricked into thinking they're running on real hardware.

What is the core component of an operating system that containers interact with?

-The kernel is the core component of an operating system. It acts as a bridge between software requests and hardware operations, handling tasks like CPU and memory management, device I/O, file systems, and process management.

How do containers help developers manage different environments for their applications?

-Containers allow developers to work in multiple environments simultaneously without compromising their local machine. They can maintain old apps on their original OS and dependencies while also using the latest technologies for new projects without conflicts.

What is the 'it works on my machine' problem, and how do containers address it?

-The 'it works on my machine' problem refers to inconsistencies in development environments leading to issues when deploying applications. Containers ensure a consistent environment across different machines, eliminating this problem by providing the same OS and dependencies wherever they run.

What is Docker and how does it facilitate container creation and management?

-Docker is a container platform that provides the necessary tools to create, manage, and run containers. It uses container images, which are composed of overlapping layers, allowing for efficient and flexible containerization of applications.

How do container images work in terms of file system layers?

-Container images are formed with overlapping layers that track file changes as differences to the previous layer. This allows for efficient extension of custom images from any previous image or layer, leveraging pre-made and officially supported base images.

What is a Dockerfile and what role does it play in creating a container image?

-A Dockerfile is a script that contains commands to be executed by Docker. These commands are executed sequentially, and each change generated is added as a new layer to the final container image.

How do containers handle runtime changes and data persistence?

-When a container is created, the image's file system is extended with a new file system layer dedicated to that container. Runtime changes are made in this layer, which persists until the container is deleted, allowing for data preservation and consistent container behavior.

What is a container registry and how does it relate to deploying containers?

-A container registry is an online storage warehouse for container images. It allows developers to tag, store, and publish their images, making them accessible for deployment on various platforms or machines.

How can container orchestration platforms like Kubernetes enhance deployment and management of containerized applications?

-Container orchestration platforms like Kubernetes allow for the declarative description of the desired state of a deployment. They automate the details of reaching that state, handling tasks like scaling, load balancing, and self-healing, creating a more efficient and robust container-based cloud environment.

Outlines

🚀 Understanding Containers and Docker

This paragraph introduces the concept of containers, emphasizing their speed, portability, and isolation. It differentiates containers from virtual machines (VMs), highlighting that containers are more efficient and resource-friendly. The core of an operating system, the kernel, is explained, and its role in facilitating software-hardware communication is discussed. The benefits for developers are outlined, including the ability to work in multiple environments simultaneously without conflicts and the elimination of the 'it works on my machine' issue. The paragraph concludes with an introduction to creating a container using Docker, explaining the concept of container images and their layered structure, similar to source control. It also touches on the creation of custom images, the use of Dockerfiles, and the isolation of container file system layers for runtime changes.

🛠️ Container Orchestration and Deployment

The second paragraph delves into the topic of container orchestration, using Kubernetes as an example. It describes the declarative approach to defining the desired state of a deployment and allowing Kubernetes to manage the details. The paragraph also briefly mentions the potential for creating a personal container-based cloud and ends with a personal appeal for support to make video creation a full-time endeavor.

Mindmap

Keywords

💡Container

💡OS (Operating System)

💡VM (Virtual Machine)

💡Kernel

💡Docker

💡Dockerfile

💡Container Image

💡Container Registry

💡Orchestration

💡Deployment

Highlights

Containers are pre-configured environments that can run as soon as they are deployed.

Containers are faster to spin up and less resource-intensive compared to virtual machines (VMs).

Containers share the host's kernel, providing a more efficient and lightweight approach.

The core of any operating system is the kernel, which manages low-level tasks like CPU and memory management.

Containers allow developers to work in multiple environments simultaneously without local machine conflicts.

Containers ensure consistent environments across different machines, eliminating the 'it works on my machine' problem.

A container platform like Docker is needed to create and run containers.

Container images are formed with overlapping layers, similar to how source control tracks changes.

Dockerfiles are used to define the steps for creating a custom container image.

Each container has its own file system layer, allowing for runtime changes without affecting other containers.

Containers can be entered and interacted with, similar to a VM.

Communication between containers is facilitated by a virtualized network layer.

Containers can be tagged with unique identifiers for easy referencing and deployment.

Container images can be published to a container registry for storage and distribution.

Modern cloud platforms support deploying containers as standalone units.

Container orchestration platforms like Kubernetes allow for the creation of a container-based cloud.

Kubernetes manages the deployment state declaratively, handling the details of scaling and managing containers.

Transcripts

and I don't just mean your code and its libraries, I mean the OS too,

all pre-configured to run as soon as you deploy it.

That's a container. They're lightning fast, portable, yet

isolated environments that you can create in mere moments,

and after this video you're going to wonder how you ever developed and

deployed applications without them.

But that's just a VM!

No, firstly, please sit down. Secondly, no, not quite.

Okay, it's true that both give you an isolated environment where you can run

an OS, but containers are quite a bit quicker

to spin up, and typically less resource intensive.

If there was a scale with VMs on one side and normal native applications on the

other, containers would sit somewhere in the middle.

Virtual machines are pretty much just tricked by the host's hypervisor layer

into thinking they're actually running on real hardware.

Containers, on the other hand, are more friendly with the host system and just

emulate a minimal file system, while piggybacking resources by sharing

the host's kernel. The kernel is the... no, not that kernel.

Close enough. The kernel is the core of any operating system.

It's the bridge between what the software asks for and what the hardware

actually does. It's responsible for all sorts of

critical low-level tasks like CPU and memory management, device I.O.,

file systems, and process management. So how does this all help us as

developers? Well, now that we have an OS at our

fingertips, we can work in several different

environments at once without having to really compromise anything on our local

machine. For example, we can maintain an old app

using the OS and package dependencies it was originally built on top of,

while also being able to use bleeding edge tech for our next multi-million

dollar project without having to worry about any conflicts in doing so.

We're also now able to put an end to the it works on my machine problem,

which is a pretty common phrase to hear in the tech industry, unfortunately.

Because a container is essentially a full OS at its core,

you can be sure that wherever it runs you're going to get the exact same

environment, whether it's on your colleague's

machine, your server machine, or somewhere in the cloud.

Now we've got the basics out of the way. Let's see how we can make a container

of our own. The first thing we're going to need is

a container platform. This will give us all the tools we need to create and run

our container, and for this video I'll be using Docker,

just because it's the most well supported. All containers run from a base

file system and some metadata, presented to us as a container image.

And the way container images work is kind of fascinating,

because they are formed with overlapping layers.

Here's a banana to kind of badly demonstrate this idea.

Okay, so in the context of a file system, I mean that instead of changing data at

its source, file changes are tracked by their

differences to the previous layer, and then composed together to achieve

the final system state. It's somewhat similar to how source

control tracks changes in your code. This concept is really powerful for

containers, because it lets us extend our custom image from

any previous image or image layer. There's loads of pre-made and officially

supported base images out there, that you can match to your project's core

requirements, and then add your own packages, code and configuration to.

To do this in Docker, we add the commands we want to execute to a

file called a Dockerfile. Docker will execute each command in sequence,

and then add each generated change to the final image as a new file system

layer, or a metadata layer. We can run as many containers as we

like from a single image. We can do this because when a container

is first created, the image's file system is extended with a new file system

layer, completely dedicated to that container.

This means that we can make any runtime changes we like, and it won't affect

other containers using that same image. What's more, this new layer will persist

until we delete the container, so we can stop and start them as we like,

without losing any data. We can even enter our running containers,

like we do with a VM. With Linux containers, for example,

we can start a shell prompt when executing it, giving us access to the

environment to explore and kind of just play around with as we please.

Communication between containers is usually pretty simple as well,

as most runtimes virtualize a network layer for you.

When our app is ready to be published into the world, we're going to want to

tag it with something unique, like a version, so that we can reference

it again later. We can then publish it to something

called a container registry, which is just like an online storage

warehouse for our images. By default, Docker assumes that you're

using the official Docker registry. However, this can be easily overridden

if you wish to use another. When it comes to deployment, many modern

cloud platforms have built-in support for deploying containers as

standalone units. Alternatively, you can install a

compatible container runtime on whatever machine you want to use, and

pull your image from the registry you pushed to earlier.

It does require a few more steps doing it this way, but you generally get better

value for money and quite a bit more control. If you

want to go even deeper, container orchestration platforms such as

Kubernetes essentially allow you to create your own container-based cloud.

You describe the desired state of your deployment declaratively,

and let Kubernetes handle the details of how to get there.

And that's it. Oh, I'd really love to make these videos full-time for you all,

and with enough support, that might just be possible. Thank you,

and I'll see you next time.

5.0 / 5 (0 votes)

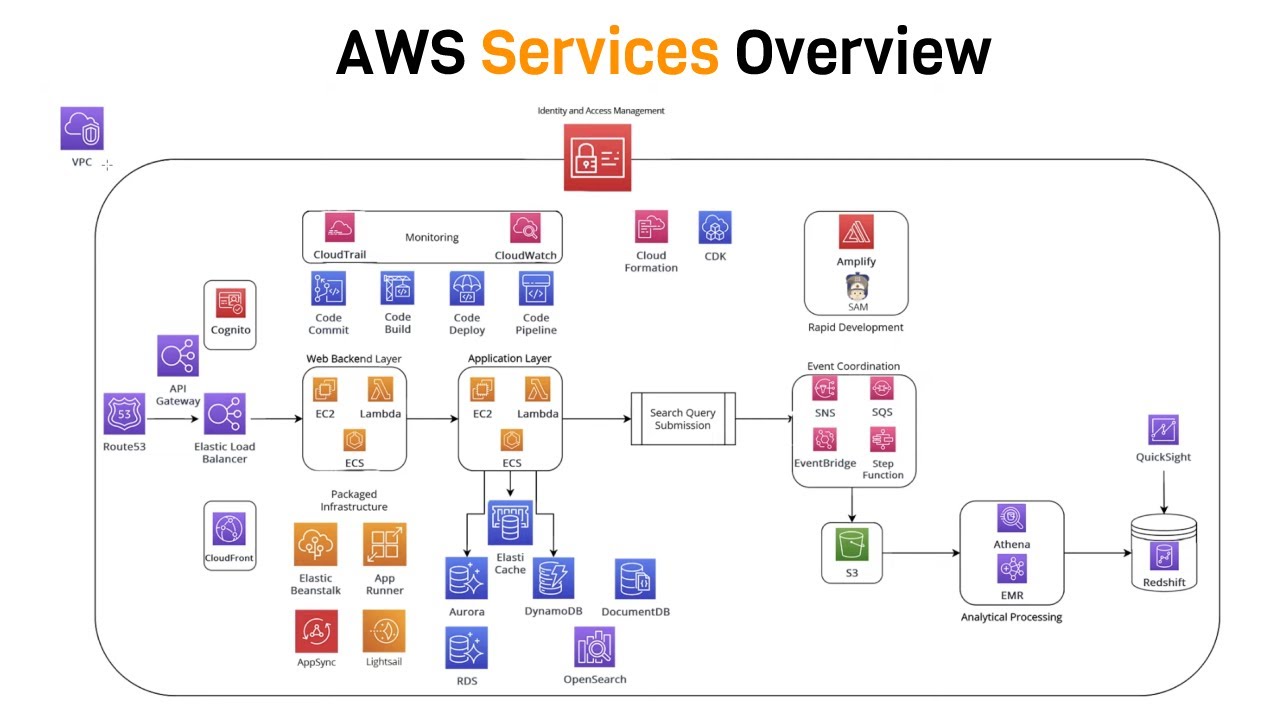

Intro to AWS - The Most Important Services To Learn

Angular Material Tutorial - 11 - Sidenav

Boosie Shows Boosie Town: 4 Homes He Built for His Kids on His 88 Acre Property (Part 1)

How to Play ANY Retro Game on iPhone! (Delta Emulator)

How Bridge Engineers Design Against Ship Collisions

Protect The Yacht, Keep It!