Langchain vs LlamaIndex vs OpenAI GPTs: Which one should you use?

Summary

TLDR本视频探讨了如何有效利用大型语言模型(LLMs)进行应用开发。比较了从零开始构建自己的框架与使用现成平台(如Lang chain、L index和Open AI)的路径。自建框架提供了最大的自由度和控制权,但需要大量的技术专长、时间和资源。而使用现成平台则可以快速部署,但可能缺乏独特价值。Lang chain和L index提供了定制化与易用性之间的平衡,支持与不同的LLMs和数据源集成,并具备强大的数据处理和检索能力,非常适合构建数据驱动的应用。

Takeaways

- 🌟 构建自己的框架从零开始可以提供最大的自由度和控制权,但需要大量的技术专长、时间和资源。

- 🛠️ 使用现成的平台如Lang chain和OpenAI助手可以快速部署应用程序,但可能难以提供独特价值,适合快速构建概念验证。

- 🔧 采用开源方法并对其进行修改,可以有效地实现复杂的技术如合成文档生成和嵌入式检索。

- 🔄 利用Lang chain等框架可以简化提示工程和数据解析过程,提高开发效率。

- 📈 Lang chain的LCL编程语法和Lang serve功能可以加速原型设计和部署过程。

- 🔍 Lama index擅长处理和检索复杂数据集,适合数据密集型应用程序。

- 🔗 Lama index提供数据连接器、索引能力和高效的检索方法,便于将数据与语言模型连接。

- 📚 通过Lang chain和Lama index的结合使用,可以构建各种领域的LLM应用程序。

- 💡 选择构建框架的路径取决于项目目标、可用资源和特定需求。

- 📈 通过课程和实践例子,可以更深入地了解Lang chain和Lama index的应用。

- 🎯 针对不同的项目需求,合理选择使用从头构建、现成平台或介于两者之间的框架。

Q & A

构建自己的框架与使用已建立平台(如Lang Chain、L Index和OpenAI助手)在LLM应用开发中的区别是什么?

-构建自己的框架提供了无价的自由和控制,允许用户从头开始编码一切,适合追求完全拥有知识产权和更新的长期产品。而使用已建立的平台如OpenAI助手、Lang Chain和L Index,则提供了快速部署和易于使用的体验,适合那些寻求快速集成LLM功能且不需要深入技术参与的用户。

什么是检索增强生成(RAG)系统,以及如何使用Buster库实现?

-检索增强生成(RAG)系统结合了检索和生成技术,以提高语言模型的回答质量。通过使用Buster库,开发者可以方便地构建RAG系统,例如实现Hide技术,这种技术基于用户的提示生成合成文档,并使用这些文档的嵌入进行检索,以找到比原始查询更接近的数据点。

什么是Lang Chain表达式语言(LCL),它如何简化LLM应用开发?

-Lang Chain表达式语言(LCL)是一种编码语法,允许开发者通过使用管道符号将不同的组件简单地连接起来。这使得开发者能够快速原型化并尝试不同的组件组合,简化了LLM应用的开发过程。

如何使用Lang Chain在应用中维护用户上下文?

-Lang Chain提供了工具如提示模板和输出解析器,这些工具允许开发者构造有效的提示并将语言模型的文本响应转换成结构化数据,如JSON对象。这些特性非常适合需要在对话中维护用户上下文的应用,如医疗聊天机器人或教育辅导应用。

Lama Index在处理复杂数据集和高级查询技术方面的优势是什么?

-Lama Index的优势在于其强大的数据管理和操控特性,使其成为数据密集型应用的有力工具。它提供了数据连接器、数据索引能力和有效的索引与检索方法,适合构建复杂的文档问答系统、知识代理和结构化分析等应用。

OpenAI助手(如GPT-3.5 Turbo和GPT-4)适合哪些类型的项目?

-OpenAI助手适合需要快速部署和易于访问LLM功能的项目,特别是对于那些不需要深入技术参与或希望快速创建概念证明并展示给他人的开发者。这些助手提供了流畅且用户友好的体验,允许快速构建强大的应用。

为什么说Lang Chain是在定制化和易用性之间的理想选择?

-Lang Chain通过提供与不同LLM提供商和外部数据源的无缝集成、用户友好的提示工程工具和数据解析功能,为开发者提供了定制化与易用性之间的平衡。这些特性使Lang Chain成为构建各种LLM驱动应用的理想选择。

在开发LLM应用时,如何选择适合的框架或平台?

-选择适合的框架或平台取决于项目的具体需求、资源和约束。如果需要完全控制和拥有知识产权,从头开始构建可能是最佳选择。如果需要快速部署和简化开发过程,使用OpenAI助手或其他预建平台可能更合适。Lang Chain和Lama Index分别提供了定制化和数据处理能力的中间地带。

Lang Chain和Lama Index分别在哪些场景下最为适用?

-Lang Chain适用于需要灵活性、定制化提示和维护对话上下文的应用,而Lama Index适合于数据密集型和需要高级数据检索技术的应用,如复杂的文档问答系统和知识增强的聊天机器人。

开发LLM应用时面临的主要挑战是什么?

-开发LLM应用时的主要挑战包括技术专业知识的需求、资源和时间的投入、以及在完全定制化与快速部署之间做出权衡。此外,还需要考虑数据处理能力、用户上下文维护和与外部数据源的集成等复杂性。

Outlines

🌟 自主开发与现有平台的比较

本段落讨论了在应用开发中使用大型语言模型(LLMs)时,自主构建框架与利用现有平台(如Lang chain、L index和Open AI助手)之间的比较。自主开发虽然技术要求高、耗时长,但提供了极大的自由度和控制权,适合长期产品开发和拥有完全知识产权。而现有平台则提供了快速部署和易用性,适合快速构建原型和展示,但长期依赖性较强。

🛠️ Lang chain和L index的特点与应用

本段落详细介绍了Lang chain和L index两个平台的特点和应用场景。Lang chain以其灵活性和易于使用的提示工程工具脱颖而出,适合构建各种LLM驱动的应用。L index则专注于复杂的数据处理和检索能力,特别适合数据密集型应用,如构建基于数据的检索增强型生成(RAG)系统。两者都提供了调试工具和优化功能,但L index是开源的,持续开发,而Lang chain则提供了更多的定制化选项和易于使用的数据处理工具。

Mindmap

Keywords

💡大型语言模型(LLMs)

💡框架构建

💡Lang chain

💡L index

💡Open AI助手

💡提示工程

💡数据源

💡检索增强型生成系统(RAG)

💡代码解释器

💡自定义

💡开放源代码

Highlights

构建自己的框架与使用现成平台如Lang chain和Open AI的比较

从零开始构建框架需要大量的技术专长、时间和资源

自定义框架可以轻松地编辑开源方法,如AI助手Buster

实施基于用户提示生成合成文档的hide技术

Lang chain和L index框架可以在一行代码中集成复杂的技术

Open AI助手如GPT 3.5 turbo和GP4提供流线型和用户友好的体验

Lang chain框架支持提示工程,这是与LLMs工作的关键方面

Lang chain提供的工具简化了提示构建和数据解析过程

Lang chain expression language (LCL) 允许通过简单的管道符号链接组件

Lang serve功能旨在简化链条部署过程

Lama index擅长复杂的数据处理和检索能力

Lama index提供数据连接器,集成多种数据源

Lama index支持高效的索引和检索方法,更好的分块策略和多模态

Lama index框架适合构建数据增强的聊天机器人和知识代理等应用

递归检索技术允许应用在多个数据块中导航以找到精确信息

Lama packs是一组基于现实世界的RAG应用,可快速部署

选择最佳框架取决于项目目标和可用资源

Transcripts

are you using large language models or

llms in your work and seeking the most

effective way to leverage the power for

your application then this video is for

you let's dive into llm application

development comparing the path of

building your own framework from scratch

with utilizing established platforms

like Lang chain L index and open AI

assistants the first obvious choice is

to construct your own framework from the

ground up you need to code everything

this route well demon in terms of

technical expertise time and resources

gives you invaluable freedom and control

plus you can easily fork and edit open

source approaches as we did with our AI

tutor with Buster a useful repository if

you aim to build retrieval augmented

generation or rag systems imagine

implementing the hide technique which

generates synthetic documents based on

the user's prompt and uses the generated

documents embedding for retrieval which

may be Clos closer to a data point in

the aming space than the original query

it's a challenging technique to

implement from scratch but it's possible

to incorporate it in Frameworks like

Lama index and Lang chain in one line of

code if you aim for a very long-term

product that you can fully own its IP

and updates then going from scratch is

the way to go the results will be the

perfect fit for your specific

requirements but you will encounter many

challenges that you didn't expect and it

will take much more time to develop if

you do not have unlimited time and

resources then you may want to take a

look at pre-built platforms like using

open

gpts if quick deployment and

accessibility are your priorities this

path is the go-to openi assistance

including GPT 3.5 turbo and gp4 provide

a streamlined and userfriendly

experience you can build very powerful

apps super quickly but they will be

quite dependent on open a and you will

hardly be able to bring unique value

this is definitely not an an ideal

long-term option but it's a powerful way

to quickly build a proof of concept and

show it to others they are perfect for

those eager to integrate llm

capabilities swiftly and efficiently

into their applications without the

complexities of building and training

models and Frameworks from scratch plus

the code interpreter noledge Retriever

and custom function code they provide

allow you to build a quite powerful app

especially if you can code your own apis

or use external ones the cost while

present is just generally more

manageable than undertaking the entire

development process on your own as well

it's going to cost you a few dollars to

make it and then it will depend on how

much you share it with others obviously

but what if you need something more

tailored than offir Solutions yet not as

time incentive as building from scratch

this is where Lang chain and L index

come into play but you need to

understand the difference between

both Lang chain offers a powerful and

flexible framework for building

applications with llms it stand out for

its ability to integrate seamlessly with

various llm providers like openi cohere

and hugging face or your own as well as

data sources such as Google search and

Wikipedia use l chain to create

application that can process user input

text and retrieve relevant responses

leveraging the latest NLP technology a

key advantage of Lang chain is its

support for prompt engineering a crucial

aspect of working with llms by

constructing effective prompts you can

significantly influence the quality of

the model's output Lang chain simplifies

this process with tools like prompt

templates which allow for the easy

integration of variables and context

into the prompts additionally output

parsers in L chain will transform the

language models text responses into

structured data like Json objects which

you don't have to code yourself L chain

is also quite useful for applications

requiring maintaining a user's context

throughout a conversation similar to

chat GPT like a medical chatbot or a ma

tutor for example they also recently

introduced Lang chain expression

language or LCL for short a coding

syntax where you can create chains by

simply piping them together using the

bar symbol it enables Swift prototyping

and trying different combinations of

components they also introduced The Lang

serve feature designed to facilitate

chains deployment process using fast API

they provide great features like

templates for different use cases and a

simple chat interface in summary Lang

chain is a nice middle ground for a

balance between customization and ease

of use its flexibility and integrating

with different llms and external data

sources coupled with its userfriendly

tools for prompt engineering and data

parsing make it an ideal choice for

building a wide range of llm powered

applications across various domains

another Advantage is their debugging

tools that simplify the development

process reducing the technical burden

significantly if you are curious about L

chain we shared two free courses using

it in the Gen 360 course series Linked

In the description below in contrast

Lama index excels in sophisticated data

handling and retrieval

capabilities it's particularly suited

for projects where you must handle

complex data sets and use Advanced

querying techniques Lama index's

strength lies in its robust data

management and manipulation features

making it a powerful tool for data

intensive applications practical terms l

IND offers key features such as data

connectors for integrating diverse data

sources including apis PDFs and SQL

databases it's data indexing capability

organizes data to make it readily

consumable by llms enhancing the

efficiency of data retrieval this

framework is particularly beneficial for

building rag applications where it acts

as a powerful data framework connecting

data with language models simplifying

programmers lives L index supports

efficient indexing and retrieval methods

better chunking strategies and

multimodality making it suitable for

various applications including Document

qna Systems data augmented chat buts

knowledge agents structured analytics

and Etc these tools also make it well

suited for advanced use cases like

multi-document analysis and querying

complex PDFs with embedded tables and

charts one example query tool is the sub

question query engine which breaks down

a complex query into several sub

questions and uses different data

sources to respond to each it then

complies all the retrieved documents to

construct the final answer as I

mentioned the Lama index framework

offers a wide range of advanced

retrieving techniques but more

specifically there's the recursive

retrieval enabling the application to

navigate through the graph of

interconnected nodes to locate precise

information in multiple chunks they also

introduced the concept of Lama packs a

collection of real world rag based

applications ready for deployment and

easy to build on top of these were just

a few concrete examples but there are

many other techniques that they can

facilitate for us which makes the

library really useful in essence Lama

index is your go-to for a rag based

application also offering fine-tuning

and embeding optimizations and the best

thing is that it's free open source and

continually

developed each of these paths offer its

unique set of advantages and challenges

building your own framework from scratch

gives you complete control but demands

substantial resources and expertise open

ey assistants offer an accessible and

quick to deploy option suitable for

those looking to integrate llms without

deep technical involvement or to create

a quick proof of concept L chain

provides a balance of customization and

ease of use ideal for developers seeking

flexibility in their llm interactions in

most cases Lama index stands out in its

robust data handling and retrieval

capabilities perfect for data Centric

applications like rag in the end the

choice boils down to your project and

the company's specific requirements and

constraints the key is to align your

decision with the projects goals and the

resources at your disposal they each

have a purpose and I personally used all

of them for different projects we also

have detailed lessons on Lang chain and

Lama index with practical examples in

the course we've built in collaboration

with 2di active Loop and the Intel

disruptor initiative I hope this video

was useful to help you choose the best

framework for your use case thank you

for

[Music]

[Music]

watching

5.0 / 5 (0 votes)

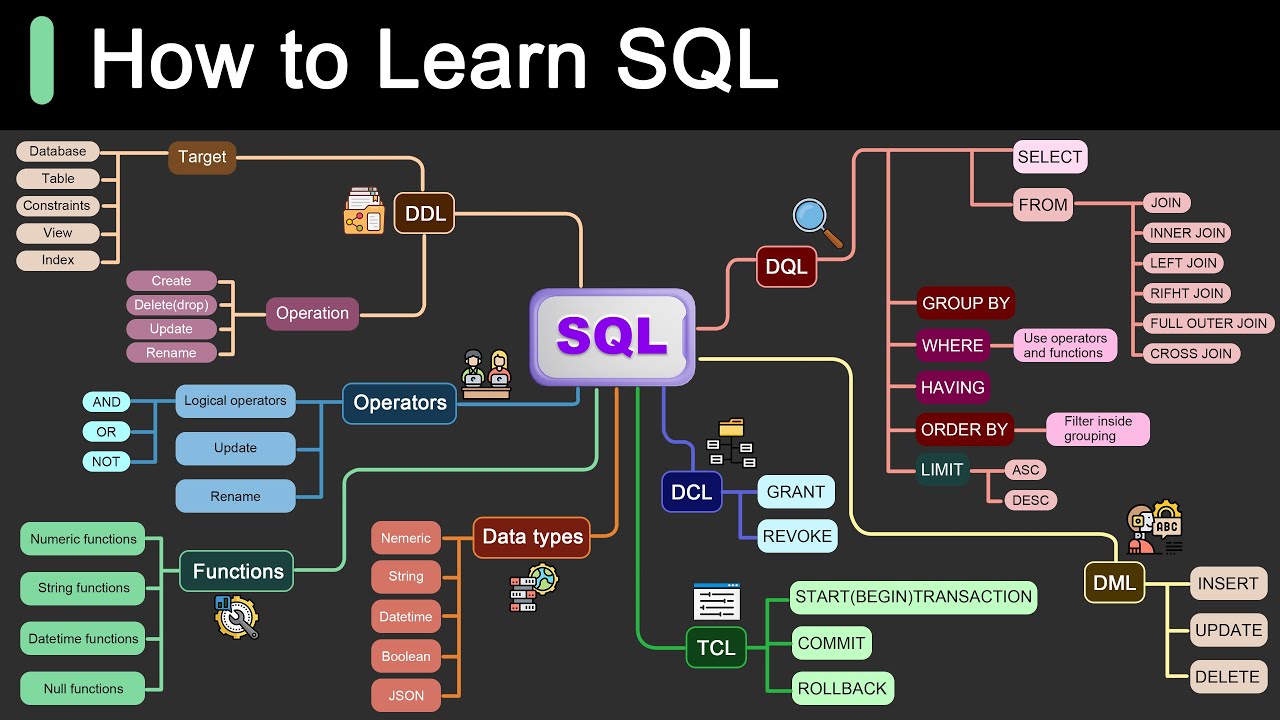

Roadmap for Learning SQL

Microsoft's New PHI-3 AI Turns Your iPhone Into an AI Superpower! (Game Changer!)

Beginner's Guide to ControlNets in ComfyUI

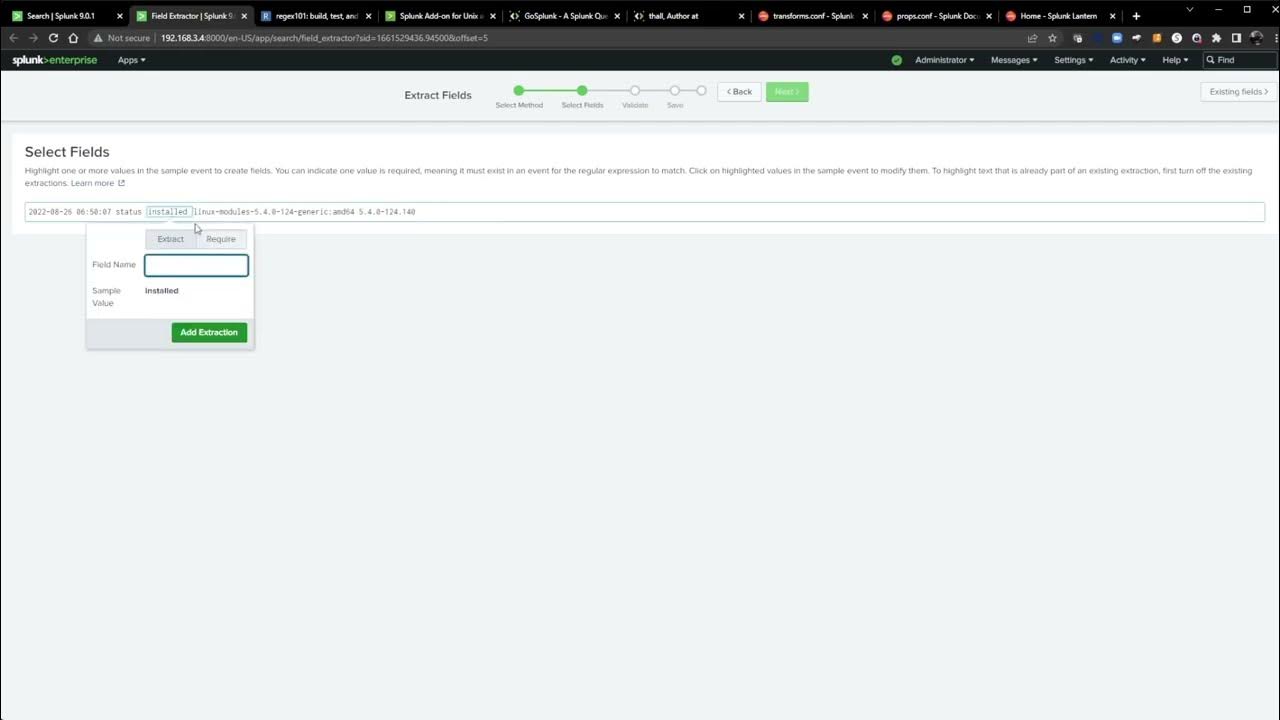

Splunk Field Extraction Walkthrough

Risk-Based Alerting (RBA) for Splunk Enterprise Security Explained—Bite-Size Webinar Series (Part 3)

【人工智能】中国大模型行业的五个真问题 | 究竟应该如何看待国内大模型行业的发展现状 | 模型 | 算力 | 数据 | 资本 | 商业化 | 人才 | 反思