DDL: Data Mesh - Lessons from the Field

Summary

TLDR在本集DDL节目中,AutoTrader的工程总监Darren Hacken与主持人、ACIL联合创始人兼CTO Shashanka讨论了数据领域的演变和数据网(Data Mesh)的概念。Darren分享了他个人的职业经历,以及AutoTrader如何通过分散数据团队来提高数据处理能力。他们还探讨了数据网的实施,包括如何通过数据产品和元数据管理来实现更好的数据治理和可观察性。Darren对数据网的未来充满期待,认为它将帮助组织以更分散的方式构建和利用数据产品。

Takeaways

- 🎉 Darren Hacken 是 AutoTrader 的工程总监,负责平台和数据,该公司是英国最大的汽车平台。

- 🚀 Darren 初期对数据工作不感兴趣,但随着大数据技术的兴起,他对数据领域产生了热情。

- 🌐 AutoTrader 的数据团队设置相对分散,有多个平台团队和专注于特定问题领域的数据团队。

- 🔄 数据团队的演变从集中式到分散式,反映了随着组织规模的扩大,对数据管理方式的适应。

- 🤖 数据网格(Data Mesh)是一种社会技术概念,强调了文化和团队结构的重要性,以及如何实现去中心化。

- 🛠️ 实施数据网格的过程中,AutoTrader 遇到了技术工具集中化与去中心化需求之间的差距。

- 📊 通过 Kubernetes 和 Data Hub,AutoTrader 正在构建数据产品的思维和实践,以提高数据的可发现性和治理。

- 🔧 数据网格的实施带来了对数据产品命名和数据建模实践的新挑战。

- 🌟 Darren 认为数据产品的概念是数据网格中最有力的部分,它有助于更好地组织和利用数据。

- 🚫 数据网格的实施并非一蹴而就,需要时间和持续的技术进步来克服现有的挑战。

- 🔮 未来,Darren 期待数据网格和数据产品能够进一步推动组织内部的数据使用和创新,特别是在 AI 和 ML 领域。

Q & A

Darren Hacken目前担任什么职位?

-Darren Hacken目前担任AutoTrader公司的工程总监,负责平台和数据方面的工作。

AutoTrader公司主要业务是什么?

-AutoTrader公司是一个汽车市场和科技平台,主要业务是作为英国最大的汽车平台,涉及买卖汽车等相关服务。

Darren Hacken对于数据领域有哪些看法?

-Darren Hacken非常关注数据领域,他认为数据是非常重要的,可以塑造和改变组织,并且随着AI和ML等技术的发展,数据领域一直在成长。

Darren Hacken的职业经历中有哪些转变?

-Darren Hacken在职业生涯初期并不喜欢数据相关工作,因为他不喜欢基于ETL工具的重复性工作。但随着大数据技术的兴起,他发现数据领域变得非常吸引人,最终成为了他热爱的领域。

Darren Hacken提到的数据产品是什么?

-数据产品是指将数据和相关功能捆绑在一起的产品,它可以帮助组织更有效地管理和使用数据,支持数据的发现、分析和治理。

AutoTrader公司的数据团队是如何运作的?

-AutoTrader公司的数据团队是分散式的,有多个平台团队和数据团队,他们专注于不同的业务领域,如广告、用户行为、车辆定价等,并致力于构建数据产品和提供自助分析服务。

Darren Hacken如何看待数据治理和元数据管理?

-Darren Hacken认为数据治理和元数据管理是实现数据分散化后的关键需求,特别是在数据产品之间建立清晰的所有权和依赖关系,以及确保数据的质量和安全性。

Darren Hacken提到了哪些技术在数据领域的应用?

-Darren Hacken提到了DBT、Kubernetes、Cuberes、数据Hub等技术在数据领域的应用,这些技术帮助他们实现了数据产品的创建、管理和治理。

Darren Hacken对于数据领域的未来有哪些期待?

-Darren Hacken期待数据产品的概念能够更加深入人心,同时他也希望看到更多支持数据分散化的技术出现,使得数据管理和治理变得更加容易。

Darren Hacken如何看待数据领域的挑战?

-Darren Hacken认为数据领域的挑战在于如何保持数据质量和实践的高标准,以及如何在没有中央团队的情况下维持这些标准。此外,数据命名和建模也是持续存在的挑战。

Darren Hacken对于数据合同有何看法?

-Darren Hacken认为数据合同是一个有趣的领域,他们目前更多地隐含地使用数据合同,通过标准化的方法和验证器来检测模式变化,并对未来数据合同的发展持开放态度。

Outlines

🎤 开场与介绍

本段介绍了视频节目的开场,主持人表达了对讨论话题的兴奋之情,并欢迎嘉宾Darren Hacken加入节目。Darren是AutoTrader的工程总监,负责平台和数据。主持人Shashanka是acil的联合创始人和CTO,也是数据Hub项目的创始人。Darren分享了他与数据结缘的经历,以及他如何从不喜欢数据工作转变为对数据充满热情。

🔍 数据团队的结构与运作

Darren描述了AutoTrader的数据团队结构,包括平台团队和专注于特定领域的数据团队。他强调了数据团队的去中心化,以及如何通过构建数据平台来支持组织中的数据能力。他还提到了数据团队与其他团队的互动,以及如何围绕问题组织团队。

🌐 数据网格的理解和实践

Darren分享了他对数据网格的理解,将其视为一种社会技术实践和文化转变。他提到了数据网格的起源和它如何帮助组织实现去中心化。Darren讨论了他们如何开始应用数据网格原则,特别是在技术架构上从集中式模型转变为更加分散的数据产品。

🛠️ 数据产品的治理与挑战

Darren讨论了在实施数据网格过程中遇到的挑战,特别是在数据治理、元数据管理和可观察性方面。他提到了技术工具在支持去中心化方面的不足,并分享了他们如何使用元数据和数据Hub来解决这些问题。

🔄 数据产品的创建与管理

Darren解释了他们如何通过使用Kubernetes作为控制平面来创建和管理数据产品。他讨论了如何通过自动化和代码化的方式来处理数据产品的元数据,并分享了他们如何使用数据Hub来收集和连接数据产品。

🤔 数据网格的挑战与未来

Darren探讨了数据网格在组织中可能带来的架构压力,以及如何在没有中央团队的情况下保持数据实践的质量。他还提到了数据命名和建模的挑战,以及他们如何使用数据合同来隐含地处理这些问题。

🚀 数据网格的未来展望

Darren对未来的数据网格和数据产品表示兴奋。他预见了数据产品思维将如何帮助组织更好地利用数据,以及数据网格如何帮助缩短产品上市时间并提高市场响应速度。他还提到了AI和数据产品如何相互促进,并对未来的技术发展表示乐观。

🙌 结语与感谢

节目的最后,主持人Shashanka感谢Darren的参与和分享,并对未来的合作表示期待。他们讨论了数据产品和数据网格的未来,以及如何通过社区和开源项目来推动这些概念的发展。

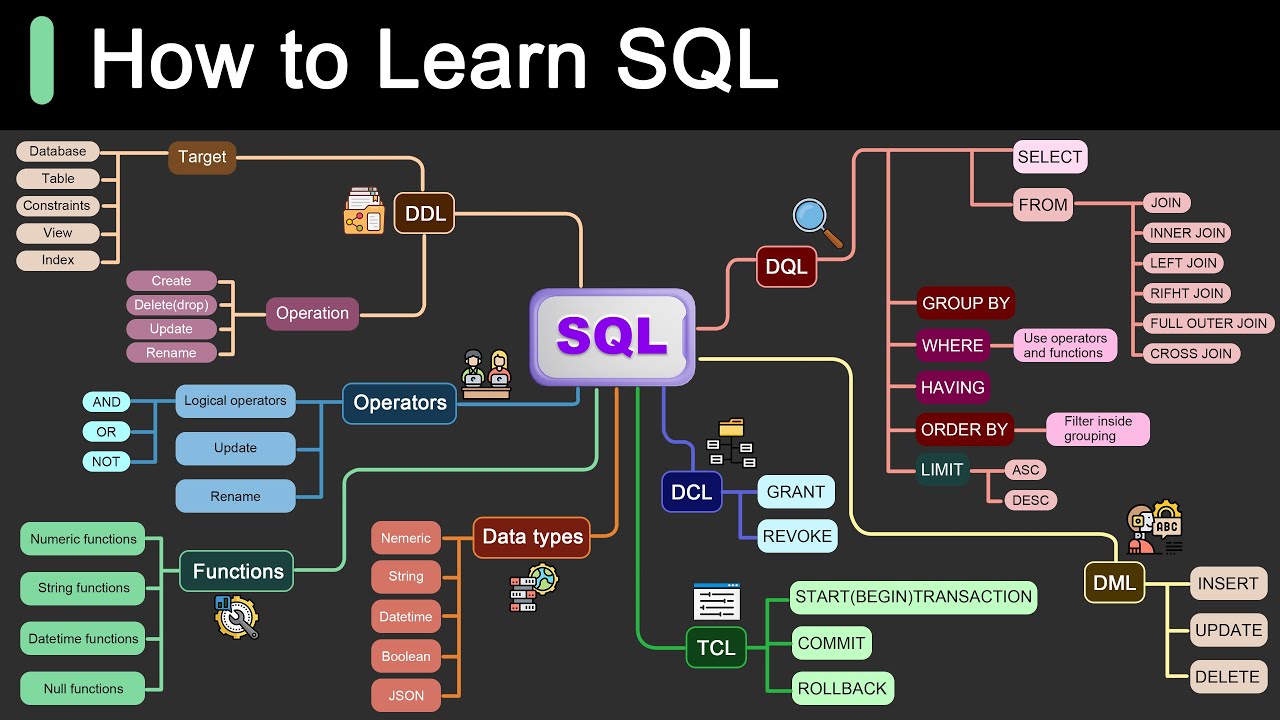

Mindmap

Keywords

💡数据网格(Data Mesh)

💡数据产品(Data Products)

💡元数据(Metadata)

💡数据治理(Data Governance)

💡数据平台(Data Platform)

💡数据团队(Data Teams)

💡数据所有权(Data Ownership)

💡数据质量(Data Quality)

💡数据发现(Data Discovery)

💡数据合同(Data Contracts)

💡数据架构(Data Architecture)

Highlights

Darren分享了自己对数据领域的热情以及其在AutoTrader的角色和职责。

Darren讲述了自己职业生涯的转变,从最初不喜欢数据工作到成为数据领域的领导者。

AutoTrader的数据团队结构是分散式的,有专门针对不同领域如广告和用户行为的数据团队。

Darren解释了数据产品的概念以及如何通过数据产品实现团队间的协作和数据共享。

AutoTrader在数据平台建设上面临的挑战,特别是在技术分界和数据治理方面。

Darren讨论了数据网格(Data Mesh)的概念以及它如何帮助组织实现数据的去中心化。

Darren分享了AutoTrader实施数据网格的经验,包括技术挑战和文化变革。

讨论了数据治理、元数据管理和可观察性在数据网格实施中的重要性。

Darren提到了使用Kubernetes作为数据产品的控制平面,并如何通过自动化提高效率。

讨论了数据网格的未来,以及它如何影响组织内部的数据使用和产品开发。

Darren对于数据产品和数据合同在数据网格中的作用和未来发展的展望。

讨论了数据网格的挑战,包括如何保持数据质量和实践中的困难。

Darren分享了对于数据网格概念未来的看法,以及它如何适应不断变化的技术环境。

讨论了数据网格如何帮助组织更好地利用数据,并提高决策的速度和质量。

Darren对于数据网格和数据产品的未来发展表示乐观,并期待技术的进步。

Transcripts

[Music]

[Music]

[Music]

[Music]

hello everyone and welcome to episode

four of the ddl show I am so excited

that we're going to be talking about a

topic that used to be exciting and has

stopped being exciting and that itself

is exciting so I'm super excited to

bring on Darren hacken uh I think our

first conversation Darren was literally

on the data mesh learning group first

time we met um and so it's it's kind of

a full circle I'm super excited to

welcome you to the show Darren is an

engineering director heading up uh

platform and data at AutoTrader and I'm

your host shashanka co-founder and CTO

at uh acil and founder of the data Hub

project so Darren tell us uh about

yourself and how you got into Data hi

shash thank you for having me today um

yeah so my name is Darren I work for a

company in the UK in the United Kingdom

called aut Trader so we're a

automotive Marketplace and Technology

platform that drives it's the UK's

largest um Automotive platform so buying

and selling cars that kind of thing and

one of the areas I deeply deeply care

about is is the data space um so here at

aut Trader I kind of look after our kind

of data platform um the capabilities

that we need in order to surface data

been working in data a long time now

maybe eight nine years um I my I Funny

Story I v I would never work in data

because when I started my career I

worked in fintech for in a in a data

team and I absolutely hated it because

it was all guwy based ETL tools and I

got out of this F as I possibly could

and said never again I love engineering

I you know I'm a coder I need to get

away and do this other thing you don't

like pointing and clicking clearly I

didn't like pointing and clicking I like

I like code um and then it kind of got

really sexy and big data and technology

changed and I think it's one of the most

exciting areas of Technology now so

never say never is probably my I always

find that a funny kind of starting point

for me in terms of data to leave a leave

a rooll and go never again and here I am

um so yeah passionate about data really

think it's one of them things that

really can shape and change

organizations it's um and it's it's

growing all the time right with things

like Ai and LMS and hype Cycles around

things like that but yeah thanks for

having me they do say data has gravity

and you know uh normally it's like

pulling other data close to it but uh

clearly people also get attracted to it

and can never leave I was literally the

same way uh well I never went to data

and I wasn't able to leave so I was um

you know an engineer on the um online

data infrastructure teams right so I was

uh doing U display ads and uh doing

real-time bidding on ads at Yahoo and

then I uh was offered the uh chance of a

lifetime to go rebuild linkedin's data

infrastructure and I didn't actually

know what data meant at that point I was

scared of databases honestly because you

know it's hard to build something that's

supposed to be a source of Truth like

wait you're responsible for actually

making sure the right actually made it

to dis and it actually got flushed and

was replicated three times so that no

one loses an update well that seems like

a hard problem so you know that was my

mission impossible that I went to

LinkedIn for and I never left I've just

been in data this whole time so can

totally relate you never escape the

gravity you do not um so well so you're

you're leading big uh teams at auto

trader right now you know platform and

data tell me a little bit about what

that team does because you know as I

have talked to so many data leaders

around the world it seems clear to me

that all data teams are similar but not

all teams are exactly the same so maybe

walk our audience through what does the

data team do and who are the surrounding

teams and how do they interact with them

yeah um so we've so interestingly aut

Trader as a or A's been around for about

40 years so they started as a magazine

you could go into your you know local

store and find the magazine and pick it

up so that's interestingly means that as

Technologies evolved throughout the

decades you know they've gone through

many chapters of of it um but today

we're relatively decentralized in terms

of our data team setup and you know

we'll get into that I guess a little bit

more when we talk about data mesh today

um but we have a kind of platform team

so we have several platform teams and we

have a platform team um predominantly

built made up of Engineers and kind of

Sr de you know folks and they build um

what we call our data platform and that

is the kind of product name I guess for

the bundling of

technology which would would help Drive

data capabilities across the

organization you know that might be

building data products which we can get

into later it could be um metadata

management how to create security

policies with data um but crucially

their play is about building

capabilities that let other people um

lose these capabilities and and build

technology and other than that we try to

keep data teams closer to um the domain

of of a of an area or a problem so we

may have data teams we focus a lot on

like advertising or user Behavior maybe

more around like vehicles and pricing

and fulfillment type problems um but we

we tend to have kind of Engineers or

Engineers that specialize in data um

scientists and analysts so they they're

kind of as a discipline together and

manage together from a craft perspective

but then in terms of how how they work

together we chend to form form them

around problems um pricing as I said

earlier and things like that and they

would maybe do analytics self- serve

analytics um product analytics machine

learning um you know feature engineering

very much that kind of thing and we're

trying to keep it as close to kind of

engineering as as possible so very much

a decentralized play or that's been our

current our current generation of people

wear and team topologies um got it got

it and by the way for the audience who's

listening in um definitely uh feel free

to ask questions we'll we'll try to pull

them up uh as they come in so you know

this is meant to be me talking to Darren

and Darren talking to me and all of you

being uh having the ability to kind of

participate in the conversation so um

definitely as we keep talking about this

topic uh keep asking questions and we'll

try to pull them up and um combine them

so Darren you talked a little bit about

how the teams were structured it

definitely resonated with kind of how uh

LinkedIn evolved over the over the years

I was there we started out uh with uh a

single data team that was uh responsible

for both platform as well as

uh business so you know they were

responsible for making decisions like

what warehousing technology to use and

how to go about it and then but also

building the executive dashboard and

building the foundational data sets we

had so many debates about whether to

call them foundational or gold but the

concept was still the same you build

kind of the the the canonical business

model on top of which you want all um

insights as well as you know analytics

as well as AI to be derived from and

then over the years we definitely had a

lot of stress with that centralization

and had to kind of split apart the

responsibilities uh we ended up going to

a model where there was essentially a

data unaware or semantics unaware team

that was fully responsible just for the

platform and um sub teams that emerged

out of those out of that original team

that sometimes got fully embedded into

product delivery teams to actually um

essentially have a local Loop where

product gets built data comes out of it

and then the whole Loop of creating

insights models and features and then

shipping it back into the product was

all owned and operated by um a specific

team so it looks like that's kind of

where you've ended up as well yeah in

fact that's spookily similar I mean we

started definitely more centralized and

then these teams sort of came out of

that more centralized model so like we

we built a team about use behavior and

advertising kind of build that that went

really well and then they felt a lot

more connected and it did evolve like

that um and and a lot of this I think

just spawns from scale really so I mean

my organization is definitely another

the figers where you were previously

working shashanka but we definitely find

that you know the more hungaryan

organization gets for data eventually

you you simply can't keep up with this

centralized team with this scarcity of

resource and everyone fighting over the

same thing gets really hard to think

about you know do I invest in the

finance team do I uh invest in our

advertising or our marketing team so

like eventually like partitioning almost

your resource in some way feels

inevitable that you have to to otherwise

it becomes it becomes so

hard cool so let's let's let's talk

about the topic of

the day what does data mesh mean to you

then now that we've kind of understood

how the teams have evolved and what your

uh teams are doing day today yeah and I

think it's a really good point that we

started around teams and culture

actually because that is really what I

think the heart of what J mesh is um so

I I used to work um For Thought Works

where shaku also kind of came up with

the the data mesh thing um kind of came

from and I I wasn't working at the time

but I remember reading it and we've

we're already on this journey of like we

need to decentralize and our platform is

really important to us and we need

capabilities and we want more people to

do that and in fact you know we were

succeeding at decentralizing and scaling

um but I think when we did that we were

entering new spaces where a lot of

people hadn't really talked about it so

for me data mesh one of the things that

it means it's a you know socio technical

thing a cultural thing it's like devops

really or something like that for me

she's done a great job describing how

to

um you know get there like data products

and all this kind of thing but one of

the great things I think that J did with

talking about dat mesh was built a

lexicon a grammar a way of us all

communicating to each other like

shashanka me and you met on a on a data

mesh you know community and immediately

we we were able to speak at a level that

we simply wouldn't have been able to

maybe if we would have met five years

ago and try to have the same

conversation um so a lot of it's that

for me that's what data mesh is it's

about it's a it's a method or an

architectural pattern or set of

principles or guidelines about how you

could achieve decentralization and and

move away from this this Central team

and kind of break apart from it um and

that has been and that has been the big

draw right to of of the concepts because

a lot of people relate to it uh and kind

of resonate with it and then that from

that um what is it Summit of Hope comes

the the valley of Despair where you you

start figuring out okay how do I

translate this idea into reality and how

much do I need to change um so walk us

through your journey of like how have

you implemented data mesh how have you

taken these principles and brought them

to life or at least attempted to bring

them to life and we'll see how you feel

about it like would you give yourself an

a grade or a b-grade we we'll we'll

figure that out later but what have you

done in in BR to life so so at the point

when we started trying to apply data

mesh um we were in this place where we

we decentralized some of our teams but

our technology underneath is still very

much centralized and shared so almost

like a monolith with teams contributing

to it but everything was partitioned or

structured around technology so we'd end

up with I don't know a DBT projects or

something right or we had a monolith

around spark jobs and things it's very

technology partitioned um and then when

we started looking at data mesh we were

really excited because one of the big

things that we took out it was this term

data product and we're like great we've

now found this this this language to

describe how we were going to try and

break things down like before that we

were trying to break break you know lots

of data down into chunks of data but we

just couldn't think of like the wording

gave us a lot more power to to start

communicating so we we started trying to

break down our DBT monolith essentially

into Data products um and that's been

one of our journeys of like breaking it

partitioning it and doing that so that

was the big starting point of doing that

um so it was very much like we had some

teams that were decentralized and then

like how do we almost catch the

technology up so DBT was the starting

point of

that so you went from a monolithic repo

where all of your transformation logic

was being

hosted to chopping it up and um

splitting it up uh across multiple

different teams um great so once you did

that what did you then

find well then you find that the tooling

and system that we've got today has some

gaps when you start to think about

decentralization like a lot of the

technologies that we use in the data

space do promote very much very Central

centralized approach um like I think

it's becoming a little bit less popular

but you know airflow it' be like one

airflow for your whole

organization EBT might say one big

projects even though they are saying

that less now but there was definitely a

period where like you know that was the

that was the popular approach so we you

broke things apart

and now you've got gaps between data

products where you've got DBT and DBT

and now you've got gaps and that's where

you really start to realize that there

are other requirements that start to

come in that you need and two big ones

that felt obvious for us were around

data governance metadata kind of knowing

more about these data products at at a

met at a meta level observability and

how you define that and also how you

start creating security policy between

them so it's the classic thing of when

organizations move to microservices like

all of a sudden like monitoring between

things things breaking in you know in

the infrastructure level between the the

network protocol starts to

happen I think the data world is not

there and is catching up and I think it

will one day but today they were some of

the gaps that we started to see um so

like by breaking down I'll give you an

example so like by breaking down dbts

have this monolith with maybe I don't

know 50 people working on an area of a

monolith and then you break that down

into Data products you then start to

realize well we didn't really have clear

ownership with that like who owned it

like people were contributing together

as maintainers maybe but who owns who

owns this data asset who actually who is

the team that do it and that's where we

started to realize well you need kind of

metadata over the top to start labeling

things like that or we also had this

other symptom coming out because we had

all of our code in one place it was very

easy for like team a and Team B to use

data between each other and not really

realize and start creating dependencies

so then we were almost trying to start

using metadata to say well who should be

allowed to use my data product and that

stuff starts to get teased out so cross

cross team discoverability cross team

lineage and visibility and some sort of

understandability and governance and

observability

across uh started to become an important

need for you yeah exactly like if you're

a analyst or a scientist when it was all

in one monolith they essentially just

open the Brows expand and try to find

data they were looking for and then when

we break things out more into Data

products and you've not got that kind of

ability we started to see people kind of

move into slack and looking for tribal

knowledge and being like hey does anyone

know where I can

find this data product I used to see it

in the monolith somewhere where is it

now who owns it and things like that so

this is where like

discoverability um lineage became even

more critical who was the owner should

this person change this code or should

it be only the owner that kind of thing

and these were really positive things

for is actually but when it was one

monolith we just couldn't we couldn't

really see that we were kind of missing

some of these quality components I guess

to to data

management so what did you end up uh

using for that uh to solve that

problem um so initially um we started

really

simple and what we did is we we used um

there's like a meta Block in DBT and we

started to Define Mead at that level and

then we started building kind of CIS or

tooling around it in our in our build

processes to grab that metad dator and

and make

decisions and that sort of gave us the

confidence the confirmation right that

this hypothesis we had that metadata was

going to like a metadata aware

environment was going to help drive a

lot of automation a lot of um data

management decisions right through

systems not through humans and then we

ended up um moving to uh to data Hub to

acrel and and using that as the the

product to start collecting U metadata

and and like building this kind of

connections between data products and

treating that as a first class citizen

now you started the conversation talking

about not liking pointand click

experiences and not liking you know

being in the UI too much if you could

avoid it so how have you tried to apply

those same principles in how you've

implemented data mesh like are your data

owners and data producers and consumers

kind of going into acry and typing out a

ton of documentation or annotating data

sets or like how how are they bridging

these two worlds between you know the

the product experience and you know the

the DBT meta yaml you know

yeah yeah I wish I wish we'd fully solve

this I mean our preference is always to

try and do as much inversion control as

possible um so like one of the big

initial challenges we had that has made

this journey feel frankly quite slow it

there's no there's no tooling that

exists to create a data product that I'm

whereever all if it is it's it's it's

hot off the press so um we're heavy

users of cuberes that's how we manage a

lot of our services um and for those on

today that don't know much about cuberes

one of the great things that it has is

is almost like a resource manifest or

it's got a database underneath it where

if I want to create a resource in the

cloud I can create this resource and it

is like a a gaml definition and I can I

can do that so what we started to do was

Define definitions for data products

create them as resources and store them

in in kubes um and kubernetes is very

nice because it also has like events so

it can send events when new resources

created and when they're updated and all

that kind of thing so we've gone to this

place where we've provisioned data

products and then we've automated

creating them so again that's very um

kind of data products as code I guess

and we try to do the same for as much as

we can with tools like me Data Systems

and and other things um and that's

mostly to have that governance of of the

metadata so like The Meta to to be

active with the metadata and to automate

things with it we need so youve

essentially used kubernetes as your

control plane for for data and anytime

any changes happen you've got your

operators kind of publishing metadata as

events that comes into acry and that's

why everything stays fresh and life I

think uh that's that's essentially how

we've implemented it so and then yeah we

use like like a broader view so like

you've almost got like the

infrastructure view in cues and then the

data product view is almost um wrapped

around that and we we use data hub for

that to kind of fully complete the

picture so if I'm a product manager or

somebody like that they would gravitate

more towards the viewing in data Hub my

data platform team probably gravitate

more into the kind of QB's world because

they're looking get you know like big

query provisioning snowflake

provisioning um object storage service

service accounts that kind of thing

right right so you fully embraced kind

of this shift left philosophy of

defining data metadata all of these

things as code checking them in

versioning them and I guess you're

waiting for the promised land where this

and the product experience kind of bire

work with each other and are able to to

you know stay in sync and you know you

can kind of live between the two worlds

uh without

losing uh

context yeah and I think I think that's

one of the big challenges with data mesh

today is just I guess it's like the cost

still is very high to to apply these

principles um but you don't need to

apply them all in one go like I mean

we've been kind of progressing towards

this this as a as a kind of Journey but

I I really still hope that we will start

to see more emerging technology um it's

just really hard because like as I kind

of said before we moved into a data mesh

world every technology almost is very

they own one piece of the stack so you

have a one company that just own

scheduling airflow one company that own

transformation for example and it's

really hard because you kind of need to

Pivot that round and have somebody just

say we'll let you define data products

and they're going to span multiple

Technologies that's a hard problem but

it it doesn't feel

unsolvable I mean we we did it right

internally um other companies are doing

this it it must be possible um and and

even I I mean I feel promised today that

things like data Hub exist and we have

far better observability tools yeah like

five years ago I we we didn't have any

observability tools really that even

remotely close to anything you could get

in terms of monitoring a microservice it

just didn't exist yeah so I feel hopeful

but it's we know it's a journey we all

have to kind of we go on together

definitely a journey I mean we started

out with um you know data Hub the

project started out first with saying

let's just bring visibility let there be

light I guess is how we started out with

like let's actually shine a light on all

the corners of your data stack and to

you know right now we're just talking de

DBT right but in general we talk DBT and

upstream and further Upstream in fact

when we look at our Telemetry and we

look at what are the things that people

are connecting data Hub up to guess what

is the number one source that people

connected to

postgress so postgress is still winning

and is still dominant because a lot of

data lives on postgress and so you know

that width and breadth is kind of the

central piece that we went after making

sure visibility was kind of prioritized

and now we're starting to see stories

where people are using it for

definitional data uh checkout.com for

example has done data products and they

Define them and register them on data

Hub and on the back of the chain stream

once you have a data product registered

they're starting to provision stuff in

the back like they're starting to

provide access or even set up those

tables so I think we're starting to see

that next step that you were alluding to

happen uh Even in our community so we've

talked a lot about kind of things you

did the technology you used let's talk

about the things that didn't work the

things you haven't yet

implemented so I think one of the

hardest things that's come from data

mesh um

is the architectural strain that it can

it can put on an organization so like

we've we've decentralized and now we

have like data teams focused on domains

and other things and that goes well but

it also it's much harder to encode at a

platform level what good looks like for

some for architecture around software

and even more so for um for data

products like what do you name a data

product like people people with te when

people did data warehousing and they had

a you facts and dimensions there are

bucks and bucks and Bucks telling you

recommended practices about how to name

tables based on certain character St

istics of the table I'm yet to find like

the you know like if I think about my

background in building apis you'd have

like different design patterns for them

and all that like we're still we're

still lacking that so we spend a lot of

time trying to think about like what do

we call this is it performance data is

it metrics is it like what are these

words that we should use but then also

gener encode um design patterns in them

because when you've got smaller units of

data like the design patterns that you

would create them is kind of different

if it was one humongous thing that you

would have apply these two so that's

being really difficult and then I think

not having that centralized team it it's

it's much harder to keep the sort of the

quality of the the practice is high when

it's decentralized it just takes a lot

of work in a way um that you wouldn't

need in a centralized team and we also

see this other scenario where if like if

a data team's closer to the product team

and they're also very skilled at

engineering you know they might say oh

well maybe we could stop doing that data

work and they could do some more product

work for example so you get like

stresses like that where you know You'

kind of decentralized and generalized

more and that tension now is sometimes

you want specialized and you want to

hold on to that and then there's there's

just that like think that challenge that

as a as a data leader you wouldn't have

had when you just have a box and say

that's that's your centralized data team

like it's it's allocated so that's

definitely one of the the challenges

that we've we've come under and quality

is a big part of that like how do you

how do you kind of work out if this data

product is of a higher quality than

another

one um we're starting to make a lot of

progress with that with with metadata

again where we're starting to kind of

label you know Puris stics like number

of incidents owners and building up kind

of a almost like a metad quity

framework yeah yeah

exactly cool I think it's uh I mean

don't beat yourself up too much naming

is hard I think it's one of the two

problems that are hard about computer

science so I think it'll continue to be

a a challenge wait till you get to

caching data products and then that'll

be uh the next hard problem but data

modeling completely agree um in fact

even at LinkedIn you know where we like

I said went through this journey of

going from centralized to trying to

decentralize and we faced it in the

microservices world very quickly uh we

started realizing data modeling prti

practices started uh fracturing and um

the initial reaction was really get

controled back and the the first thing

we did was formed a data model Review

Committee and for you know any LinkedIn

alumni or you know existing LinkedIn

employes if you're you know tuned in you

might kind of start to shudder and

Jitter because you know dmrc or data

model Review Committee was was a very uh

traumatic experience for the whole

company it was it was great for

centralized control but resulted in a

lot of delays um as as products went to

production because um halfway through

you know shipping a product you would

certainly get caught and and get told

that you have to go back and redesign

your your schema or your um your your

data

model and so you know that team spent

kind of a year or two asserting control

and then the next year or two trying to

Tool themselves out of existence and so

so I think we'll we'll see that kind of

pattern emerge in in real world

deployments as well where we'll see

these Central teams kind of have those

anxiety attacks when they start

decentralizing try to assert control

through gatekeeping but then realize

gatekeeping doesn't work and so you have

to kind of tool yourself out of

existence by just finding a way to

declare what good looks like finding a

way to describe what that good looks

like in a programmatic way and then

provid it to a platform that can then

make that thing happen so I think still

for me in my opinion something that uh

you know some folks like us are doing a

little bit of that in our product but I

think the future uh is is kind of being

able to autod describe what good looks

like and then uh being able to stand

standardize those practices without

needing uh too much human gatekeeping to

happen um would love to pull up uh one

of the questions from the audience at

this point before we uh drop into kind

of uh chatting about the future um you

know we have a question from the

audience around like are you using data

contracts if you are um we've talked a

lot about data products um and

thankfully we didn't talk about what a

data product is because that would be a

whole different hour conversation but

let's uh let's move and talk about data

contracts are you using them how was the

contract structured are you using a

standard template or something else

yeah so this feels like we what the way

um so we we kind of try to left shift a

lot of contracts or rigor around this

stuff so like for

example we had a real big push that if

we were going to ingest um data into the

analytical plane into our our data

platform we would expect that everything

was using AOS schemas and using Kafka

therefore like you're kind of shifting

responsibility back to a producer team

they need to you know make sure there is

a really good contract there and we have

got to this place where we've got

versioning and and really good things

like that and and and checks um so we

have that but between data products this

is definitely one of the areas that I'm

very very interested in is is we've been

doing data contracts probably more

implicitly so we have a bunch of

standardized um like method dat tests

and and validators um and we we try to

detect things um in a very automated way

around

detecting schem of changes and then kind

of triggering that for some of these

look into but I'm very much interested

in in in where the industry is moving

now where we're thinking about data

contracts um I find this I find this

space quite surprising because

everyone's talking about it or was but

it was very revolutionary not

evolutionary and as a as a as a

technologist as an engineer these feeli

like yeah like this this is a very much

a an obvious thing that I would do if I

was building an API for example I would

expect a contract and a and a schemer in

place but as as in most instances the

the data world is always a bit nent to

to some of these practices um but it it

seemed really positive and it's worked

in a lot of other software engineering

domains I think there's definitely a

bunch of uh so so thanks for for that

um uh response I think there's

definitely a lot

of

sociological processes that happen in

the data world where we get attracted to

A New Concept a new term we all rally

around

it uh we try to make that a

reality and then you know in a couple of

years disillusionment starts setting in

because heart problems continue to stay

hard and then um often a new term

emerges and then we all rally around

that um the the hard problems I think

around data quality data governance

metadata management they have continued

to stay hard and challenging um and I

think at different points in the

evolution of the data industry we've

kind of picked up different phrases as

the rallying cry to go and do something

about it so in fact I think about data

meeses an example of something like that

I think about data contracts or

something similar to that so you know

Gartner I think earlier this week or

maybe last week uh published an update

saying data mesh is now dead or it's

about to disappear we're not not no

longer tracking it or something like

that so what is your advice for others

that are thinking of either starting

continuing or abandoning their data mesh

strategies yeah I I could

have I can't tell if this is just you

know a marketing cycle around you know

like I'm I'm I'm expecting the articles

of data mesh is dead long live data mesh

next than you know the classic troes I

mean I think I think Ryan Dolly actually

did a quick video last week or this week

yeah so it's it's I think modern data

stack is dead so that's the new

marketing cycle and then the DAT mesh is

is is either dead or about to be dead so

that's the other other conversation

doing the rounds but anyways what what

do you think well well I think that

throughout all of my career as a

technologist as an engineer we we go

organizations have a level of

centralization or decentralization

around technology period um so so to me

data mesh was all about this idea that

particular organizations don't want to

be centralized it becomes too much of a

constraint for them to succeed with data

which isn't for everybody like I

wouldn't recommend you know an

organization of five people like a

startup should go all in on day mesh I

think it comes with a particular amount

of size right and need um you kind of

outgrow the central team just like most

other technology problems come with

scale so to me data mesh is all about

giving us that language and a set of

principles and values about how to

succeed at

decentralization maybe they AR aren't

right maybe some are good and some

aren't quite as good or they can't

really quite get there because

technology isn't ready today may be

ready in three years five years or maybe

it never will but I I can't imagine a

world where there isn't a reasonable

number of size organizations that need

to be more decentralized with how they

work with data um so I kind of don't

worry if it wins or dies I suppose on

some level because of that the bit which

I do really hope succeeds is that the

technology gets there Rises the occasion

to make it easier and I think biggest

reason I'm passionate about that is one

of the things that has changed for my

organization by doing data mesh is the

the data product thinking the product

thinking over data like when we went and

built some of our data products you kind

of um how to describe this like

sometimes like data is so fine grained

it's like grains of sand you know on the

beach and you can't you can't see like I

could build a castle right out of this

or anything so like when we start to

like group data and do that and catalog

and structure things you go actually we

could go further here you know um like

as an example we we we get lots of

observations about car sales and other

things and then we sort of realize when

we could see the shape of that that

actually we could go further and we

could start to bring more like

confidence intervals on sales and look

ahead and do forecasting and we could

always do that the data was there to

always do that by having shapes around

data products that was the exciting

thing so I think if to kind of conclude

that I think if it wins or succeeds I

think it's about if any of that lives on

and I think it should for any

organization that's that's the key thing

so if data mesh falls out of

popularity I I imagine there just be

another architectural blueprint about

how to do decentralization because I

can't I can't see that going away in

every company around the world so long

live data mesh principles yeah yeah sure

okay great um so on that note you know

you've you've

got uh the wind behind your back you've

done um data s code metadata s code

shift left you're you're kind of doing

control plane for data from what it

seems like with you know with kubernetes

as your um provider layer what are you

excited for uh about the future is it

continuing this decentralization game

and kind of making self data and then um

high quality data across teams a reality

or is it AI or is it like what what does

the future look like for your

teams well I think everybody is excited

about llms aren't they so isn't that are

given um I think for data mesh and data

products I think

I'm uh although we've made a lot of

progress there's just so much to do um

like we run a lot of our operational

servic our microservices our apis and

our you know operational systems on Qs

and we have applied a lot of these same

principles that were applying to the

data world today

operationally and it's it's completely

transformed how our organization

operates you know how we deploy Services

just how mature we are as a technology

business and I just see such an obvious

road to keep going that way with data

mesh and you know increase our profits

increase our time to Market find better

and more engaging ways of using our

data um you know shorten Cycles around

how we do an ml product and go to market

so that's probably one of the largest

areas that excites me with data mesh

it's the possibilities of what we get

more out of our

data where we're seeing this happen a

lot today is is probably now starting to

show up in more of our business areas so

like more emphasis on say marketing more

emphasis on um customer experience like

how we bring data into these spaces more

and and by unbundling from Central team

and building data teams and data

products around them with STS will lock

a lot more things with them than we

would have done when we had that that

that kind of centralized team with

everybody fighting over it um so that's

what excites me and and possibly llms

depending on if it's a a bubble or not

and on how you how you view that I guess

in fact a couple of episodes ago I was

chatting with uh HMA who's heading up

Kumo doai and um we were talking about

the the the needs of AI and you know

guess what metadata is one of the

biggest things that AI teams need to do

couple of things well one is

reproducibility and understandability

and

explainability um and the second thing

is actually prompt engineering and you

know real um uh you know for Rag

architectures and stuff like that so

it's actually kind of interesting how

the worlds of metadata and a are also

kind of coming together around the same

time with respect to data mesh I

definitely feel like the tooling like

you said coming together is is kind of a

theme I'm excited even within our

existing community and customer base uh

we're seeing a lot more um cases where

people our customers are talking about

data products they're actually using

data products in the catalog for real

not just asking about it so we've gone

from the stage where people would just

be interested because we had data

products like data data Hub had data

products like maybe a year ago at this

point you know we've gone from seeing

people just asking about data products

to actually having them and using them

and asking more so you know data product

lineage input output ports all of these

things that we've promised to the

community are actually going to come out

this year I'm really excited to see what

uh folks do with it um and the same

thing with data contracts you know we've

kind of had it in the product for a

while while but we're starting to see

people really want to connect these two

together and to see them um stitch

together last Town Hall we had a

gentleman Stefan uh from kpn actually

talk about you know a full-on data

product spec that he's working on and

developing on top of data Hub so it's

it's it's pretty exciting to

see uh kind of despite the overall hype

cycle around the term data mesh kind of

going down definitely seeing a lot of

the Practical implement mentations

coming to life and I think what you said

about um you know human comprehension

being a hard problem and so the more and

more data we generate and create as

Industries as companies the need to

simplify and communicate using simpler

terminology simpler more um coales uh

objects that we can all rally around and

govern uh has definitely been uh

uh an advantage that we've all gained a

relational person would be like well

that's what schemas in databases are

supposed to be and they're probably

right uh but I think in as we've split

apart databases and kind of fractured

the world into graphql schemas and open

API schemas and Kafka topics and S3

buckets and a bunch of snowflake tables

I think needing that logical layer on

top has kind of definitely uh come back

in and it's it is showing promise and I

think the I'm excited for the future of

trying to stay polyot and poly you know

imple at the lower layers because that's

where Innovation happens while still

staying harmonized and uh logical at the

at the understanding there yeah I think

uh dat Hub is met Data Systems but

especially data have the potential to be

the control plane that we have to

develop like and I think that's really

exciting and I do hope that I don't know

AAL the data Hub community the open

source Community realize that and build

around it like by by having these

materialized inner system we can build

you know the ability to make data

products and provision them in different

Cloud environments a lot more trivial

than it is today um and I do feel like

there will be a point when there'll be

enough technology available to do this

that you will really see it explode it

just needs that that moment but more

people are doing it

then it's probably you probably realize

like Z and the bit that I think we'll

live with with with or without data mesh

is data products this this idea of

encapsulating some of your data and

describing it as a as a system I feel

like that is a really powerful tool um

to come out of of data mesh as as a

vocabulary as a concept as a way of us

you know Building Systems from in in a

more decentralized way than a star

schema for

example well it's been a pleasure having

you on the show Darren and it's uh it's

been such a great chat I almost didn't

uh keep track of time we went a good 15

minutes over but hopefully yeah the

audience had a had a good time uh

listening and uh thanks for your

questions uh we'll we'll see you on the

internet as they say uh and um you know

it's been it's been a pleasure talking

to you Darren and it's been a pleasure

collaborating with you over the years as

well so looking forward to uh building

great things together thanks for having

Mr shanka and thanks to everybody that

joined and listened and the questions

that we got thank you very

much

5.0 / 5 (0 votes)